Publications

in reversed chronological order, generated by jekyll-scholar.

2026

- [C48]

STRinGS: Selective Text Refinement in Gaussian SplattingIn Winter Conference on Applications of Computer Vision (WACV), Mar 2026New!

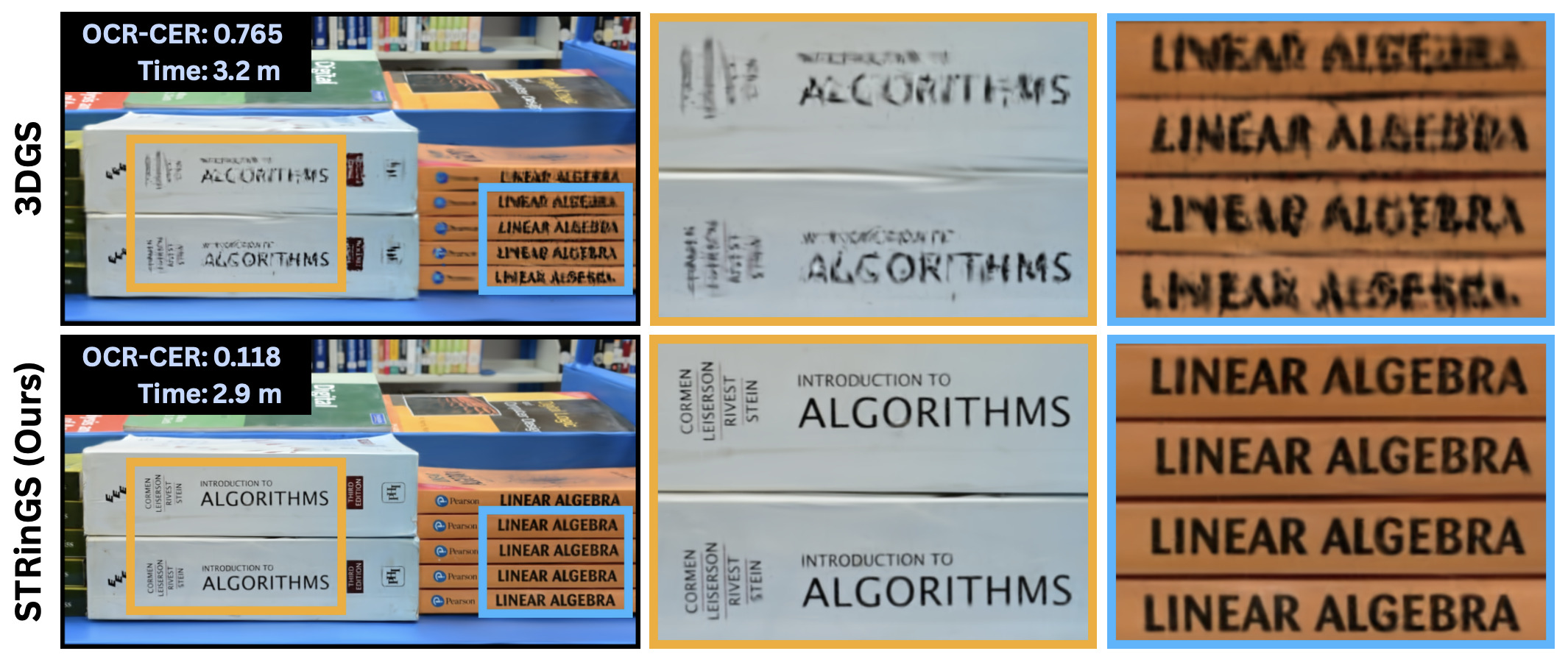

STRinGS: Selective Text Refinement in Gaussian SplattingIn Winter Conference on Applications of Computer Vision (WACV), Mar 2026New!Text as signs, labels, or instructions is a critical element of real-world scenes as they can convey important contextual information. 3D representations such as 3D Gaussian Splatting (3DGS) struggle to preserve fine-grained text details, while achieving high visual fidelity. Small errors in textual element reconstruction can lead to significant semantic loss. We propose STRinGS, a text-aware, selective refinement framework to address this issue for 3DGS reconstruction. Our method treats text and non-text regions separately, refining text regions first and merging them with non-text regions later for full-scene optimization. STRinGS produces sharp, readable text even in challenging configurations. We introduce a text readability measure OCR Character Error Rate (CER) to evaluate the efficacy on text regions. STRinGS results in a 63.6% relative improvement over 3DGS at just 7K iterations. We also introduce a curated dataset STRinGS-360 with diverse text scenarios to evaluate text readability in 3D reconstruction. Our method and dataset together push the boundaries of 3D scene understanding in text-rich environments, paving the way for more robust text-aware reconstruction methods.

@inproceedings{Raundhal2026_STRinGS, author = {Raundhal, Abhinav and Behera, Gaurav and Narayanan, P J and Sarvadevabhatla, Ravi Kiran and Tapaswi, Makarand}, title = {{STRinGS: Selective Text Refinement in Gaussian Splatting}}, year = {2026}, booktitle = {Winter Conference on Applications of Computer Vision (WACV)}, month = mar }

2025

- [J5]

MALeR: Improving Compositional Fidelity in Layout-Guided GenerationShivank Saxena, Dhruv Srivastava, and Makarand TapaswiACM Transactions on Graphics (ToG), Dec 2025Presented at Siggraph Asia (journal track)

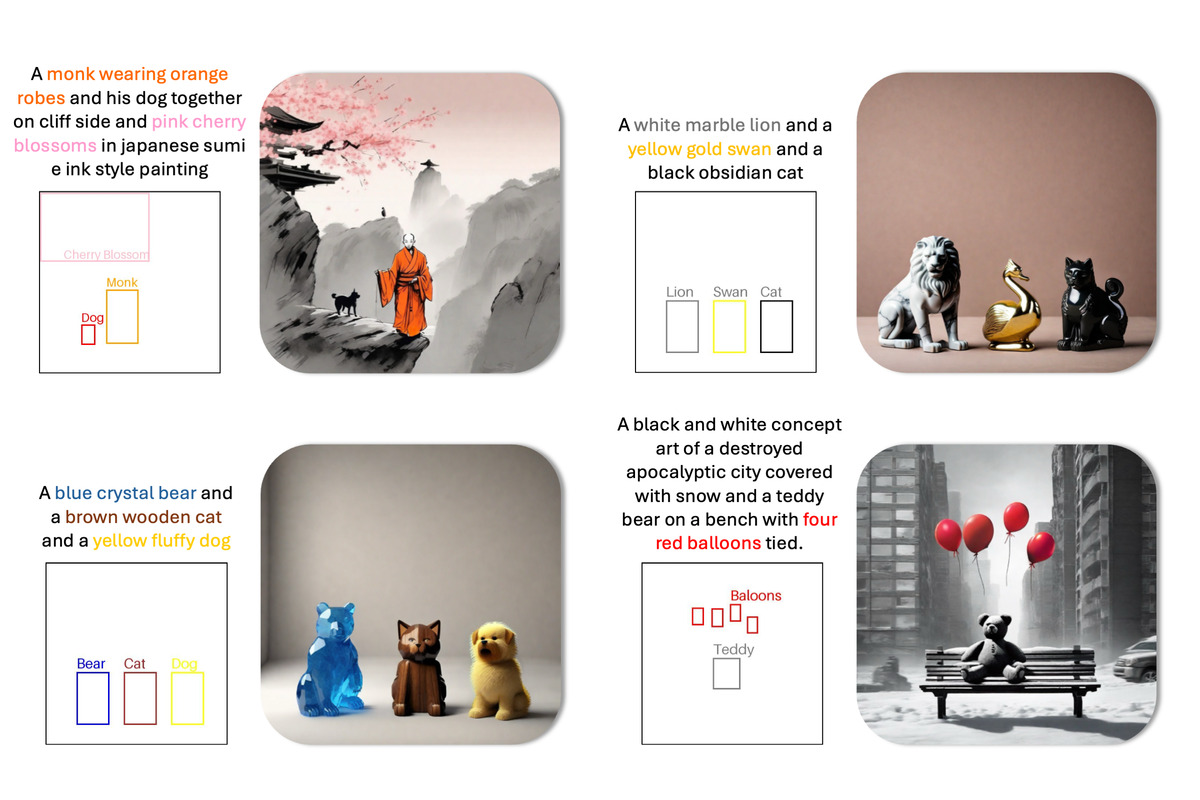

MALeR: Improving Compositional Fidelity in Layout-Guided GenerationShivank Saxena, Dhruv Srivastava, and Makarand TapaswiACM Transactions on Graphics (ToG), Dec 2025Presented at Siggraph Asia (journal track)Recent advances in text-to-image models have enabled a new era of creative and controllable image generation. However, generating compositional scenes with multiple subjects and attributes remains a significant challenge. To enhance user control over subject placement, several layout-guided methods have been proposed. However, these methods face numerous challenges, particularly in compositional scenes. Unintended subjects often appear outside the layouts, generated images can be out-of-distribution and contain unnatural artifacts, or attributes bleed across subjects, leading to incorrect visual outputs. In this work, we propose MALeR, a method that addresses each of these challenges. Given a text prompt and corresponding layouts, our method prevents subjects from appearing outside the given layouts while being in-distribution. Additionally, we propose a masked, attribute-aware binding mechanism that prevents attribute leakage, enabling accurate rendering of subjects with multiple attributes, even in complex compositional scenes. Qualitative and quantitative evaluation demonstrates that our method achieves superior performance in compositional accuracy, generation consistency, and attribute binding compared to previous work. MALeR is particularly adept at generating images of scenes with multiple subjects and multiple attributes per subject.

@article{Saxena2025_MALeR, author = {Saxena, Shivank and Srivastava, Dhruv and Tapaswi, Makarand}, title = {{MALeR: Improving Compositional Fidelity in Layout-Guided Generation}}, year = {2025}, journal = {ACM Transactions on Graphics (ToG)}, volume = {44}, number = {6}, month = dec, doi = {10.1145/3763341} } - [C47]

What You See is What You Ask: Evaluating Audio DescriptionsDivy Kala, Eshika Khandelwal, and Makarand TapaswiIn Empirical Methods in Natural Language Processing (EMNLP), Nov 2025Note: Main conference track, long paper

What You See is What You Ask: Evaluating Audio DescriptionsDivy Kala, Eshika Khandelwal, and Makarand TapaswiIn Empirical Methods in Natural Language Processing (EMNLP), Nov 2025Note: Main conference track, long paperAudio descriptions (ADs) narrate important visual details in movies, enabling Blind and Low Vision (BLV) users to understand narratives and appreciate visual details. Existing works in automatic AD generation mostly focus on few-second trimmed clips, and evaluate them by comparing against a single ground-truth reference AD. However, writing ADs is inherently subjective. Through alignment and analysis of two independent AD tracks for the same movies, we quantify the subjectivity in when and whether to describe, and what and how to highlight. Thus, we show that working with trimmed clips is inadequate. We propose ADQA, a QA benchmark that evaluates ADs at the level of few-minute long, coherent video segments, testing whether they would help BLV users understand the story and appreciate visual details. ADQA features visual appreciation (VA) questions about visual facts and narrative understanding (NU) questions based on the plot. Through ADQA, we show that current AD generation methods lag far behind human-authored ADs. We conclude with several recommendations for future work and introduce a public leaderboard for benchmarking.

@inproceedings{Kala2025_ADQA, author = {Kala, Divy and Khandelwal, Eshika and Tapaswi, Makarand}, title = {{What You See is What You Ask: Evaluating Audio Descriptions}}, year = {2025}, booktitle = {Empirical Methods in Natural Language Processing (EMNLP)}, month = nov, doi = {10.18653/v1/2025.emnlp-main.1199} } - [P3]

More than a Moment: Towards Coherent Sequences of Audio DescriptionsEshika Khandelwal, Junyu Xie, Tengda Han, Max Bain, Arsha Nagrani, Andrew Zisserman, Gül Varol, and Makarand TapaswiarXiv:2510.25440, Oct 2025

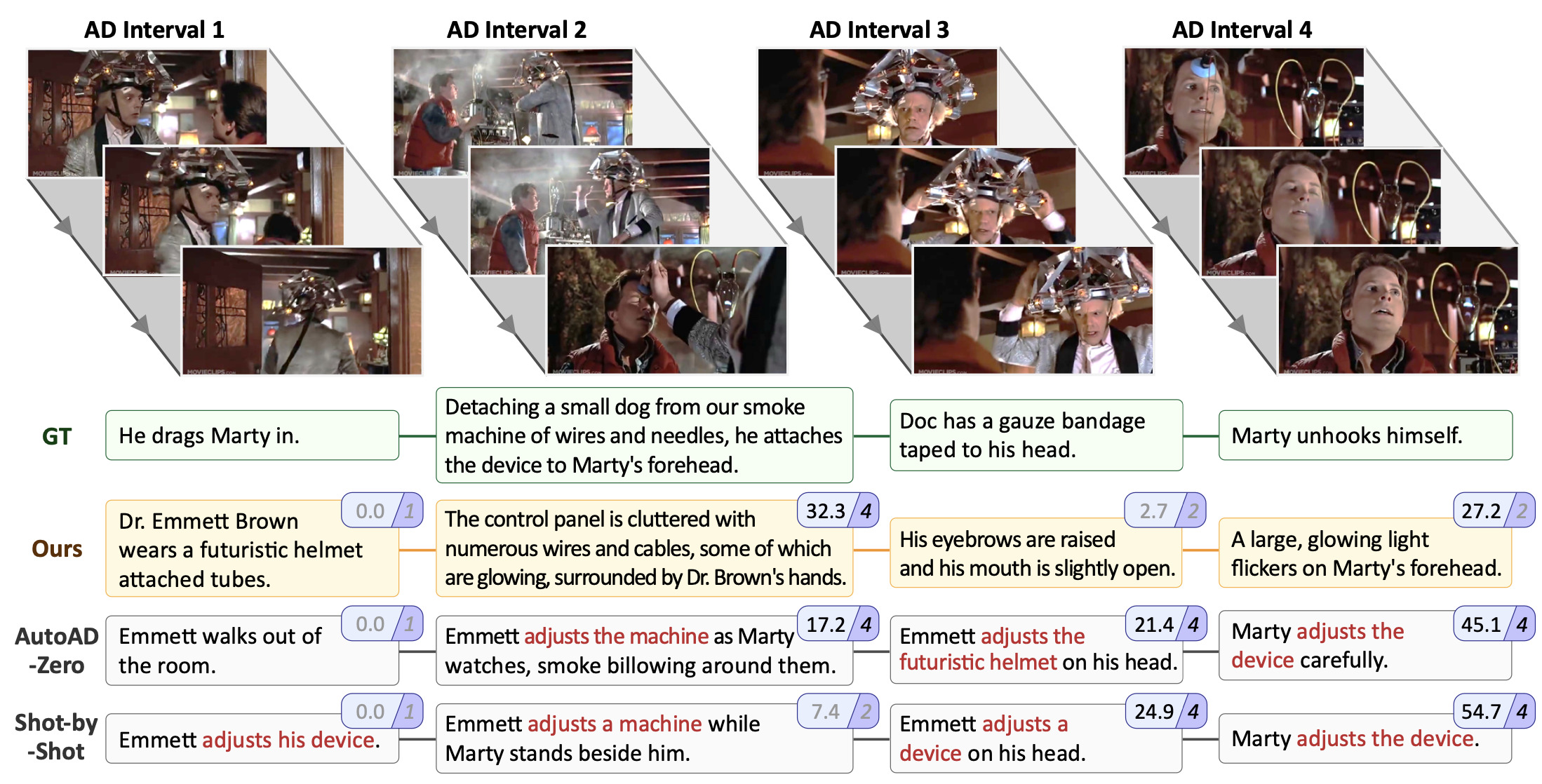

More than a Moment: Towards Coherent Sequences of Audio DescriptionsEshika Khandelwal, Junyu Xie, Tengda Han, Max Bain, Arsha Nagrani, Andrew Zisserman, Gül Varol, and Makarand TapaswiarXiv:2510.25440, Oct 2025Audio Descriptions (ADs) convey essential on-screen information, allowing visually impaired audiences to follow videos. To be effective, ADs must form a coherent sequence that helps listeners to visualise the unfolding scene, rather than describing isolated moments. However, most automatic methods generate each AD independently, often resulting in repetitive, incoherent descriptions. To address this, we propose a training-free method, CoherentAD, that first generates multiple candidate descriptions for each AD time interval, and then performs auto-regressive selection across the sequence to form a coherent and informative narrative. To evaluate AD sequences holistically, we introduce a sequence-level metric, StoryRecall, which measures how well the predicted ADs convey the ground truth narrative, alongside repetition metrics that capture the redundancy across consecutive AD outputs. Our method produces coherent AD sequences with enhanced narrative understanding, outperforming prior approaches that rely on independent generations.

@article{Khandelwal2025_CoherentAD, author = {Khandelwal, Eshika and Xie, Junyu and Han, Tengda and Bain, Max and Nagrani, Arsha and Zisserman, Andrew and Varol, Gül and Tapaswi, Makarand}, title = {{More than a Moment: Towards Coherent Sequences of Audio Descriptions}}, year = {2025}, month = oct, journal = {arXiv:2510.25440} } - [C46]

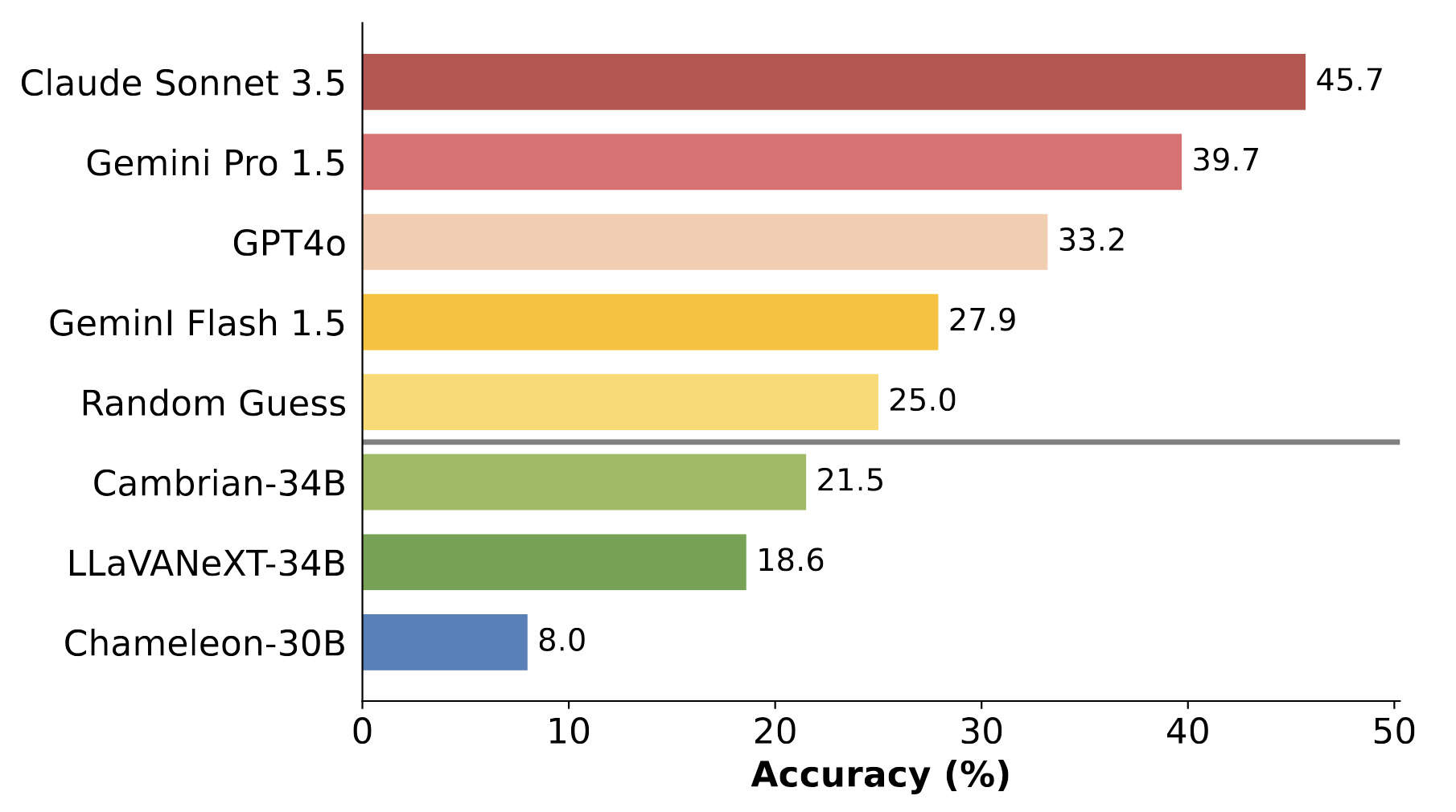

VELOCITI: Benchmarking Video-Language Compositional Reasoning with Strict EntailmentDarshana Saravanan* , Varun Gupta* , Darshan Singh*, Zeeshan Khan, Vineet Gandhi, and Makarand TapaswiIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2025Note: Also at CVPR Workshops: VidLLMs, EVAL-FoMo, WiCV, MMFM

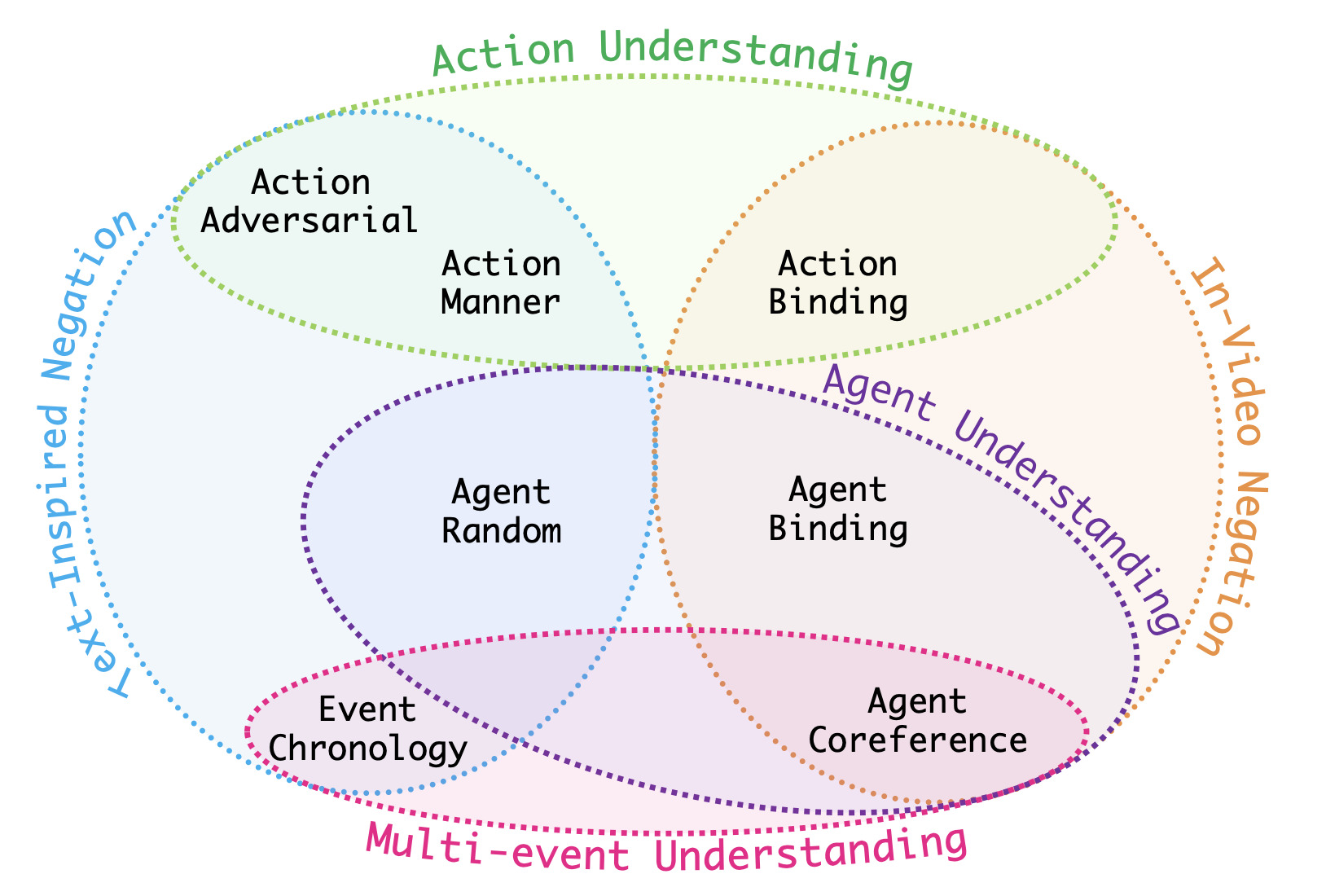

VELOCITI: Benchmarking Video-Language Compositional Reasoning with Strict EntailmentDarshana Saravanan* , Varun Gupta* , Darshan Singh*, Zeeshan Khan, Vineet Gandhi, and Makarand TapaswiIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2025Note: Also at CVPR Workshops: VidLLMs, EVAL-FoMo, WiCV, MMFMA fundamental aspect of compositional reasoning in a video is associating people and their actions across time. Recent years have seen great progress in general-purpose vision or video models and a move towards long-video understanding. While exciting, we take a step back and ask: are current models good at compositional reasoning on short videos? To this end, we introduce VELOCITI, a benchmark to study Video-LLMs by disentangling and assessing the comprehension of agents, actions, and their associations across multiple events. We adopt the Video-Language Entailment setup and propose StrictVLE that requires correct classification (rather than ranking) of the positive and negative caption. We evaluate several models and observe that even the best, LLaVA-OneVision (44.5%) and Gemini-1.5-Pro (49.3%), are far from human accuracy at 93.0%. Results show that action understanding lags behind agents, and negative captions created using entities appearing in the video perform worse than those obtained from pure text manipulation. We also present challenges with ClassicVLE and multiple-choice (MC) evaluation, strengthening our preference for StrictVLE. Finally, we validate that our benchmark requires visual inputs of multiple frames making it ideal to study video-language compositional reasoning.

@inproceedings{DDV2025_Velociti, author = {Saravanan, Darshana and Gupta, Varun and Singh, Darshan and Khan, Zeeshan and Gandhi, Vineet and Tapaswi, Makarand}, title = {{VELOCITI: Benchmarking Video-Language Compositional Reasoning with Strict Entailment}}, year = {2025}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, month = jun, doi = {10.1109/CVPR52734.2025.01762} } - [W13]

Investigating Mechanisms for In-Context Vision Language BindingDarshana Saravanan, Makarand Tapaswi, and Vineet GandhiIn CVPR Workshop on Mechanistic Interpretability in Vision (MIV), Jun 2025

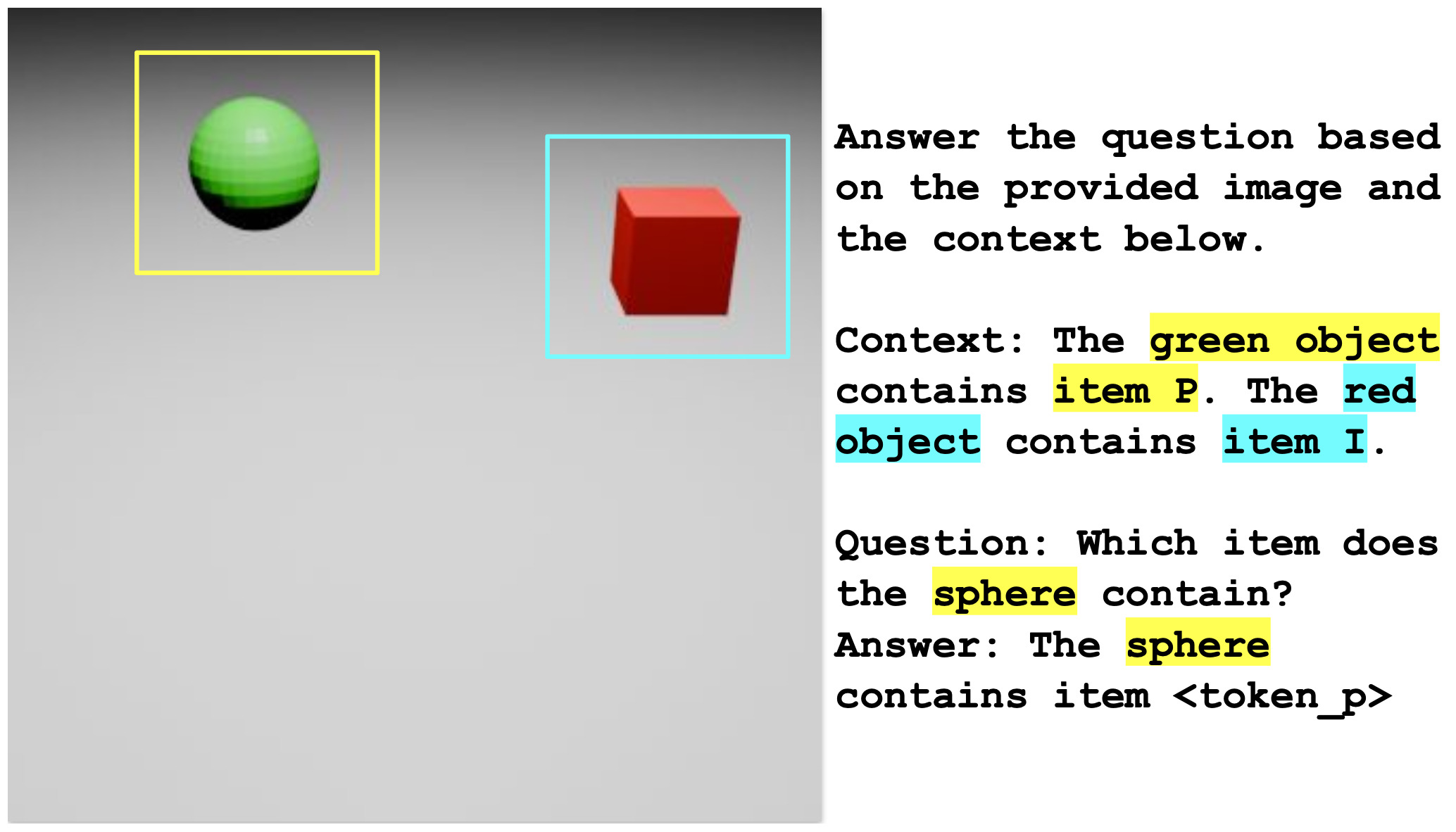

Investigating Mechanisms for In-Context Vision Language BindingDarshana Saravanan, Makarand Tapaswi, and Vineet GandhiIn CVPR Workshop on Mechanistic Interpretability in Vision (MIV), Jun 2025To understand a prompt, Vision-Language models (VLMs) must perceive the image, comprehend the text, and build associations within and across both modalities. For instance, given an ’image of a red toy car’, the model should associate this image to phrases like ’car’, ’red toy’, ’red object’, etc. Feng and Steinhardt propose the Binding ID mechanism in LLMs, suggesting that the entity and its corresponding attribute tokens share a Binding ID vector in the model activations. We investigate this for image-text binding in VLMs using a synthetic dataset and task that requires models to associate 3D objects in an image with their descriptions in the text. Our experiments demonstrate that VLMs assign a distinct Binding ID to an object’s image tokens and its textual references, enabling in-context association.

@inproceedings{Saravanan2025_BindingID, author = {Saravanan, Darshana and Tapaswi, Makarand and Gandhi, Vineet}, title = {{Investigating Mechanisms for In-Context Vision Language Binding}}, year = {2025}, booktitle = {CVPR Workshop on Mechanistic Interpretability in Vision (MIV)}, month = jun, doi = {10.1109/CVPRW67362.2025.00476} } - [C45]

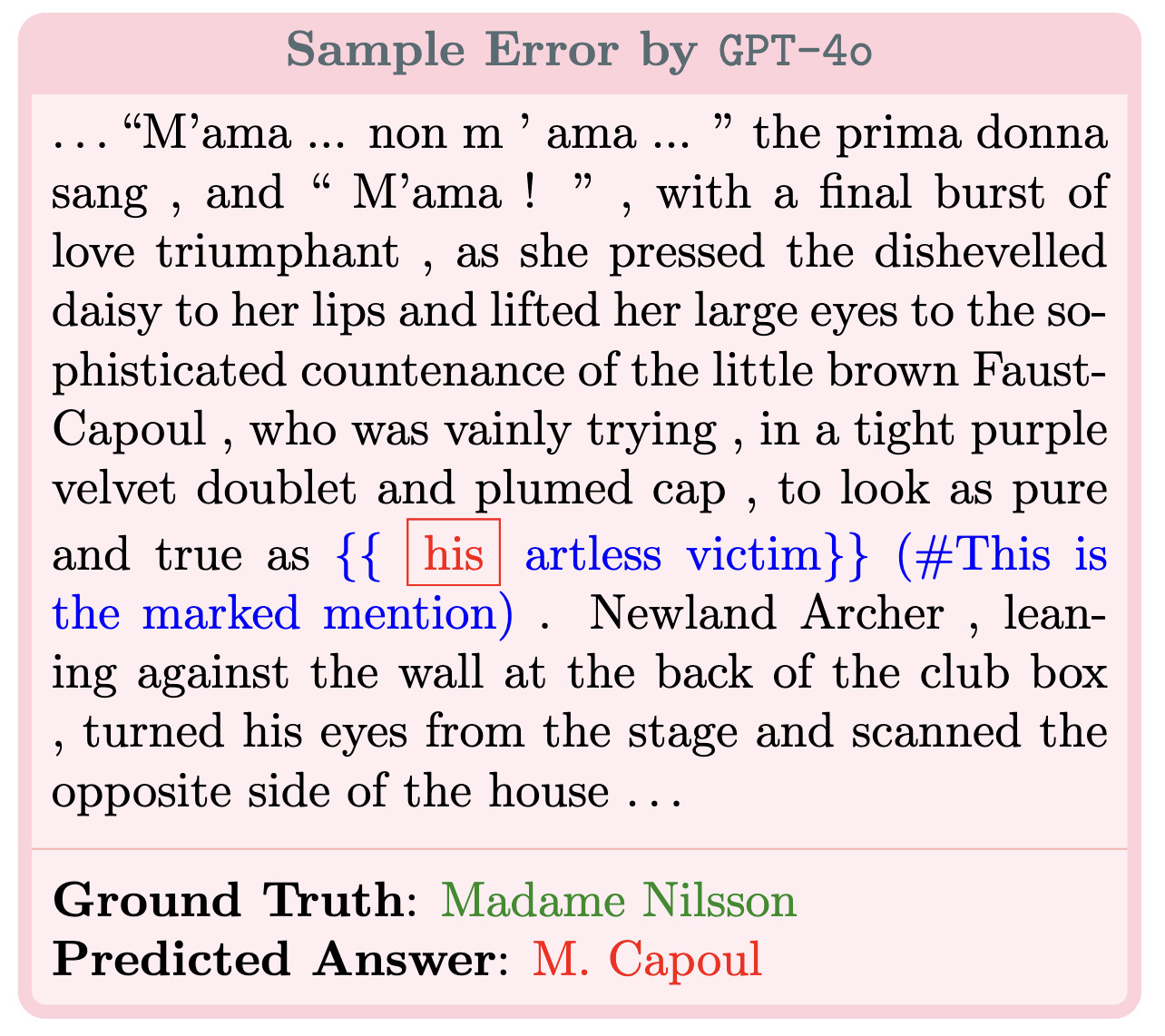

IdentifyMe: A Challenging Mention Resolution Benchmark for LLMsIn Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics (NAACL), May 2025Note: Main conference, Short paper

IdentifyMe: A Challenging Mention Resolution Benchmark for LLMsIn Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics (NAACL), May 2025Note: Main conference, Short paperRecent evaluations of LLMs on coreference resolution have revealed that traditional output formats and evaluation metrics do not fully capture the models’ referential understanding. To address this, we introduce IdentifyMe, a new benchmark for mention resolution presented in a multiple-choice question (MCQ) format, commonly used for evaluating LLMs. IdentifyMe features long narratives and employs heuristics to exclude easily identifiable mentions, creating a more challenging task. The benchmark also consists of a curated mixture of different mention types and corresponding entities, allowing for a fine-grained analysis of model performance. We evaluate both closed- and open source LLMs on IdentifyMe and observe a significant performance gap (20-30%) between the state-of-the-art sub-10B open models vs. closed ones. We observe that pronominal mentions, which have limited surface information, are typically much harder for models to resolve than nominal mentions. Additionally, we find that LLMs often confuse entities when their mentions overlap in nested structures. The highest-scoring model, GPT-4o, achieves 81.9% accuracy, highlighting the strong referential capabilities of state-of-the-art LLMs while also indicating room for further improvement.

@inproceedings{Manikantan2025_IdentifyMe, author = {Manikantan, Kawshik and Tapaswi, Makarand and Gandhi, Vineet and Toshniwal, Shubham}, title = {{IdentifyMe: A Challenging Mention Resolution Benchmark for LLMs}}, year = {2025}, booktitle = {Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics (NAACL)}, month = may, doi = {10.18653/v1/2025.naacl-short.64} } - [C44]

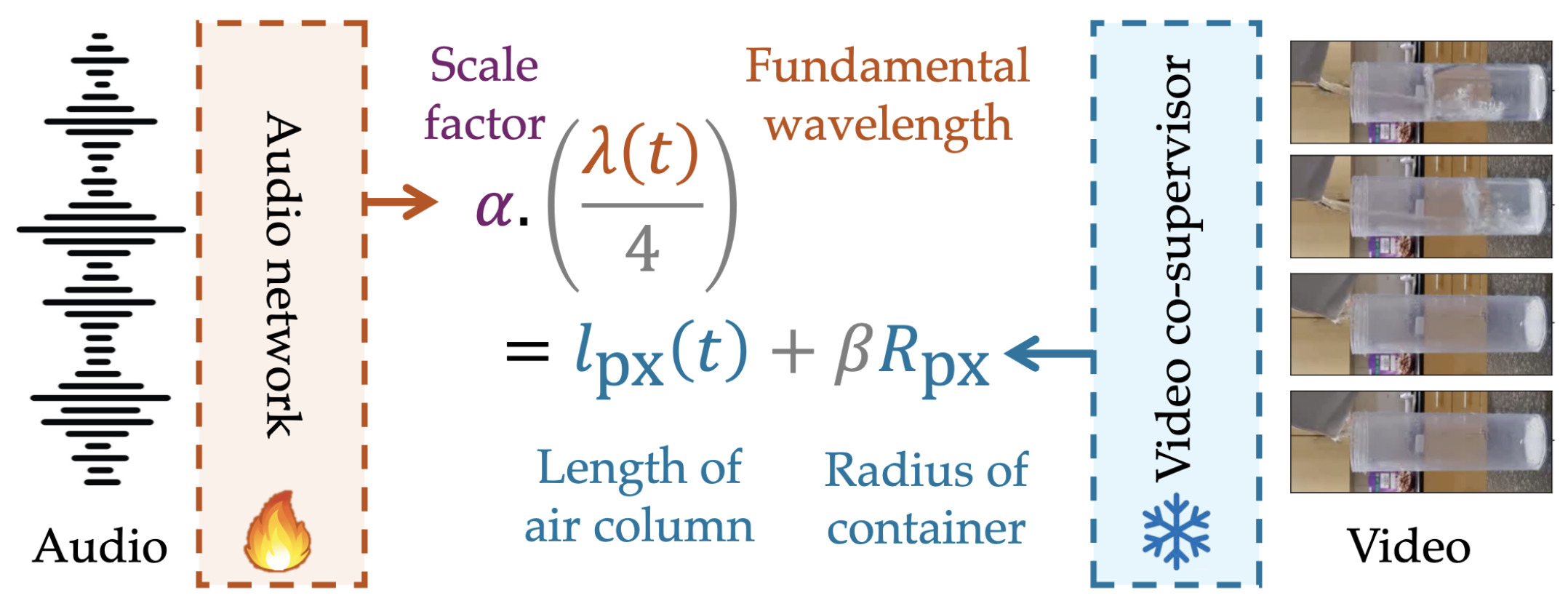

The Sound of Water: Inferring Physical Properties from Pouring LiquidsIn International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Apr 2025Note: Long version on arXiv

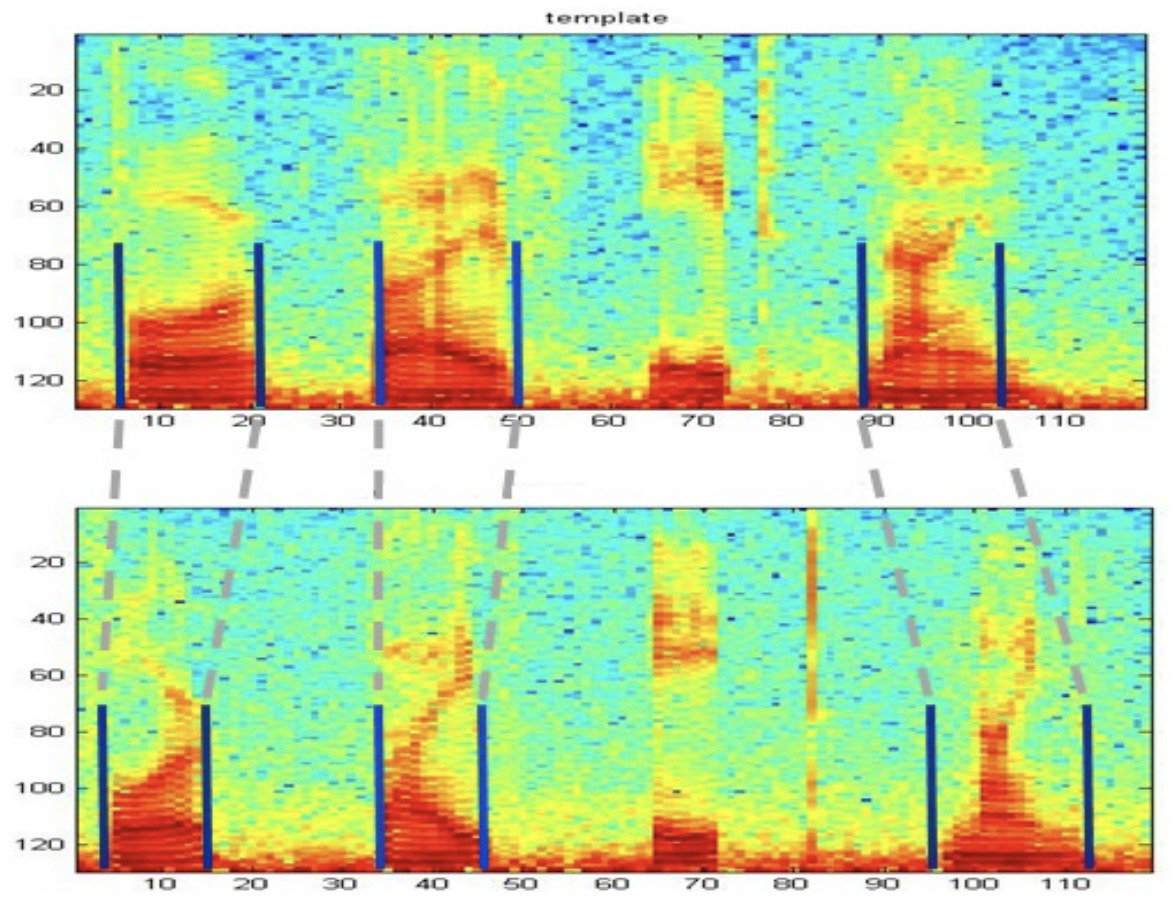

The Sound of Water: Inferring Physical Properties from Pouring LiquidsIn International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Apr 2025Note: Long version on arXivWe study the connection between audio-visual observations and the underlying physics of a mundane yet intriguing everyday activity: pouring liquids. Given only the sound of liquid pouring into a container, our objective is to automatically infer physical properties such as the liquid level, the shape and size of the container, the pouring rate and the time to fill. To this end, we: (i) show in theory that these properties can be determined from the fundamental frequency (pitch); (ii) train a pitch detection model with supervision from simulated data and visual data with a physics-inspired objective; (iii) introduce a new large dataset of real pouring videos for a systematic study; (iv) show that the trained model can indeed infer these physical properties for real data; and finally, (v) we demonstrate strong generalization to various container shapes, other datasets, and in-the-wild YouTube videos. Our work presents a keen understanding of a narrow yet rich problem at the intersection of acoustics, physics, and learning. It opens up applications to enhance multisensory perception in robotic pouring.

@inproceedings{Bagad2025_PouringSounds, author = {Bagad, Piyush and Tapaswi, Makarand and Snoek, Cees G M and Zisserman, Andrew}, title = {{The Sound of Water: Inferring Physical Properties from Pouring Liquids}}, year = {2025}, booktitle = {International Conference on Acoustics, Speech, and Signal Processing (ICASSP)}, month = apr, doi = {10.1109/ICASSP49660.2025.10889950} } - [C43]

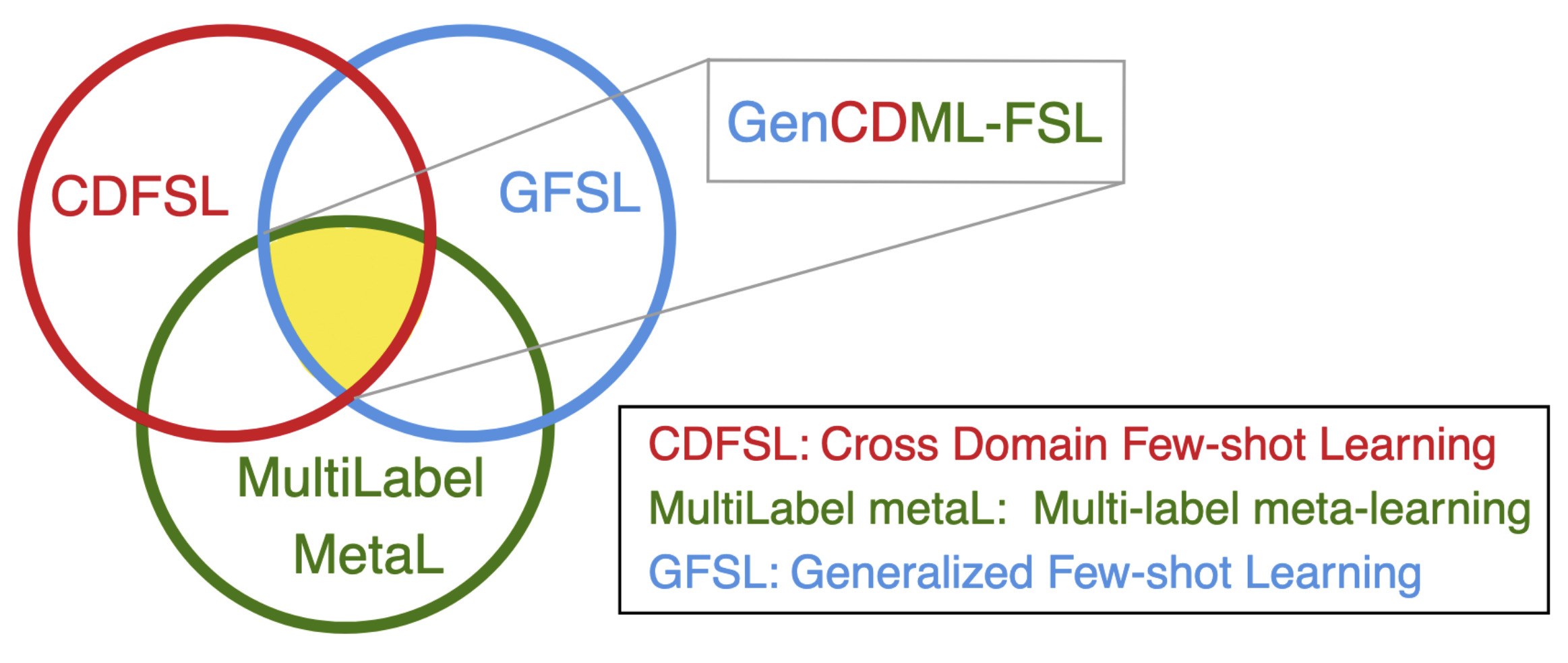

Generalized Cross-domain Multi-label Few-shot Learning for Chest X-raysIn International Symposium on Biomedical Imaging (ISBI), Apr 2025Note: Long version on arXiv

Generalized Cross-domain Multi-label Few-shot Learning for Chest X-raysIn International Symposium on Biomedical Imaging (ISBI), Apr 2025Note: Long version on arXivReal-world application of chest X-ray abnormality classification requires dealing with several challenges: (i) limited training data; (ii) training and evaluation sets that are derived from different domains; and (iii) classes that appear during training may have partial overlap with classes of interest during evaluation. To address these challenges, we present an integrated framework called Generalized Cross-Domain Multi-Label Few-Shot Learning (GenCDML-FSL). The framework supports overlap in classes during training and evaluation, cross-domain transfer, adopts meta-learning to learn using few training samples, and assumes each chest X-ray image is either normal or associated with one or more abnormalities. Furthermore, we propose Generalized Episodic Training (GenET), a training strategy that equips models to operate with multiple challenges observed in the GenCDML-FSL scenario. Comparisons with well-established methods such as transfer learning, hybrid transfer learning, and multi-label meta-learning on multiple datasets show the superiority of our approach.

@inproceedings{AimenWIAI2025_GenCDMLFSL, author = {Aimen, Aroof and Verma, Arsh and Tapaswi, Makarand and Krishnan, Narayanan C}, title = {{Generalized Cross-domain Multi-label Few-shot Learning for Chest X-rays}}, year = {2025}, booktitle = {International Symposium on Biomedical Imaging (ISBI)}, month = apr, doi = {10.1109/ISBI60581.2025.10980999} } - [C42]

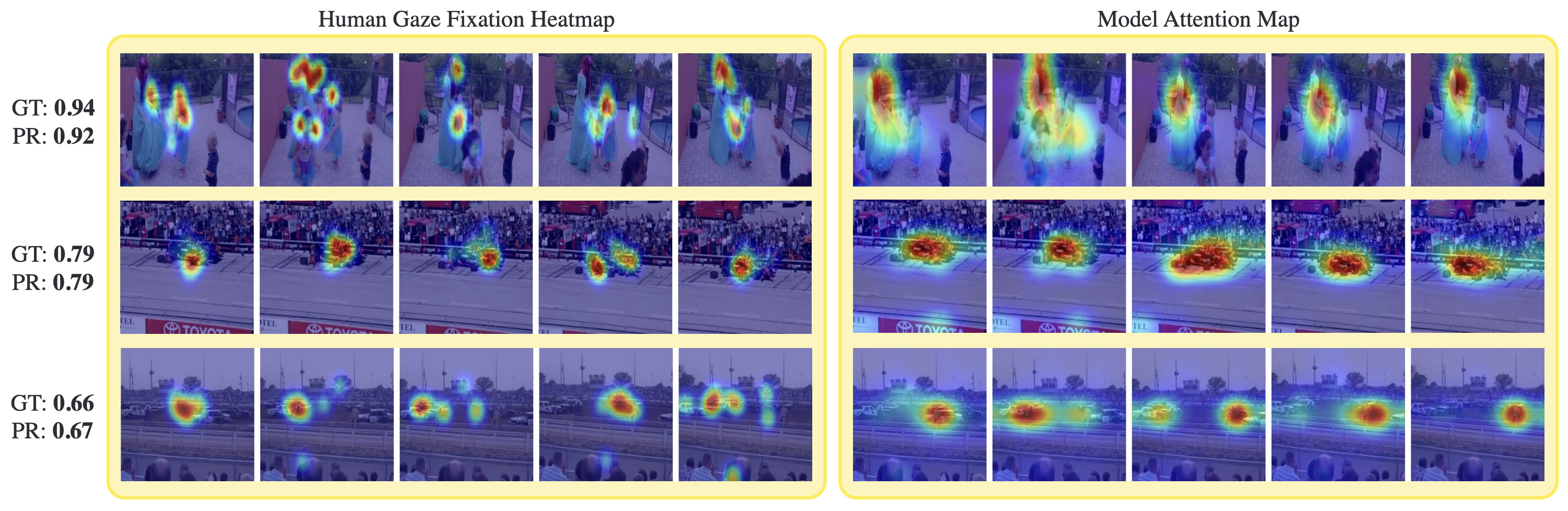

Seeing Eye to AI: Comparing Human Gaze and Model Attention in Video MemorabilityIn Winter Conference on Applications of Computer Vision (WACV), Feb 2025

Seeing Eye to AI: Comparing Human Gaze and Model Attention in Video MemorabilityIn Winter Conference on Applications of Computer Vision (WACV), Feb 2025Understanding what makes a video memorable has important applications in advertising or education technology. Towards this goal, we investigate spatio-temporal attention mechanisms underlying video memorability. Different from previous works that fuse multiple features, we adopt a simple CNN+Transformer architecture that enables analysis of spatio-temporal attention while matching state-of-the-art (SoTA) performance on video memorability prediction. We compare model attention against human gaze fixations collected through a small-scale eye-tracking study where humans perform the video memory task. We uncover the following insights: (i) Quantitative saliency metrics show that our model, trained only to predict a memorability score, exhibits similar spatial attention patterns to human gaze, especially for more memorable videos. (ii) Both, the model and humans, assign greater importance to initial frames in a video. (iii) Panoptic segmentation reveals that both (model and humans) assign a greater share of attention to things and less attention to stuff as compared to their occurrence probability. Thus, our approach captures key spatio-temporal and semantic attention signatures that are relevant for memorability.

@inproceedings{Prajneya2025_VideoMemorability, author = {Kumar, Prajneya and Khandelwal, Eshika and Tapaswi, Makarand and Sreekumar, Vishnu}, title = {{Seeing Eye to AI: Comparing Human Gaze and Model Attention in Video Memorability}}, year = {2025}, booktitle = {Winter Conference on Applications of Computer Vision (WACV)}, month = feb, doi = {10.1109/WACV61041.2025.00209} } - [J4]

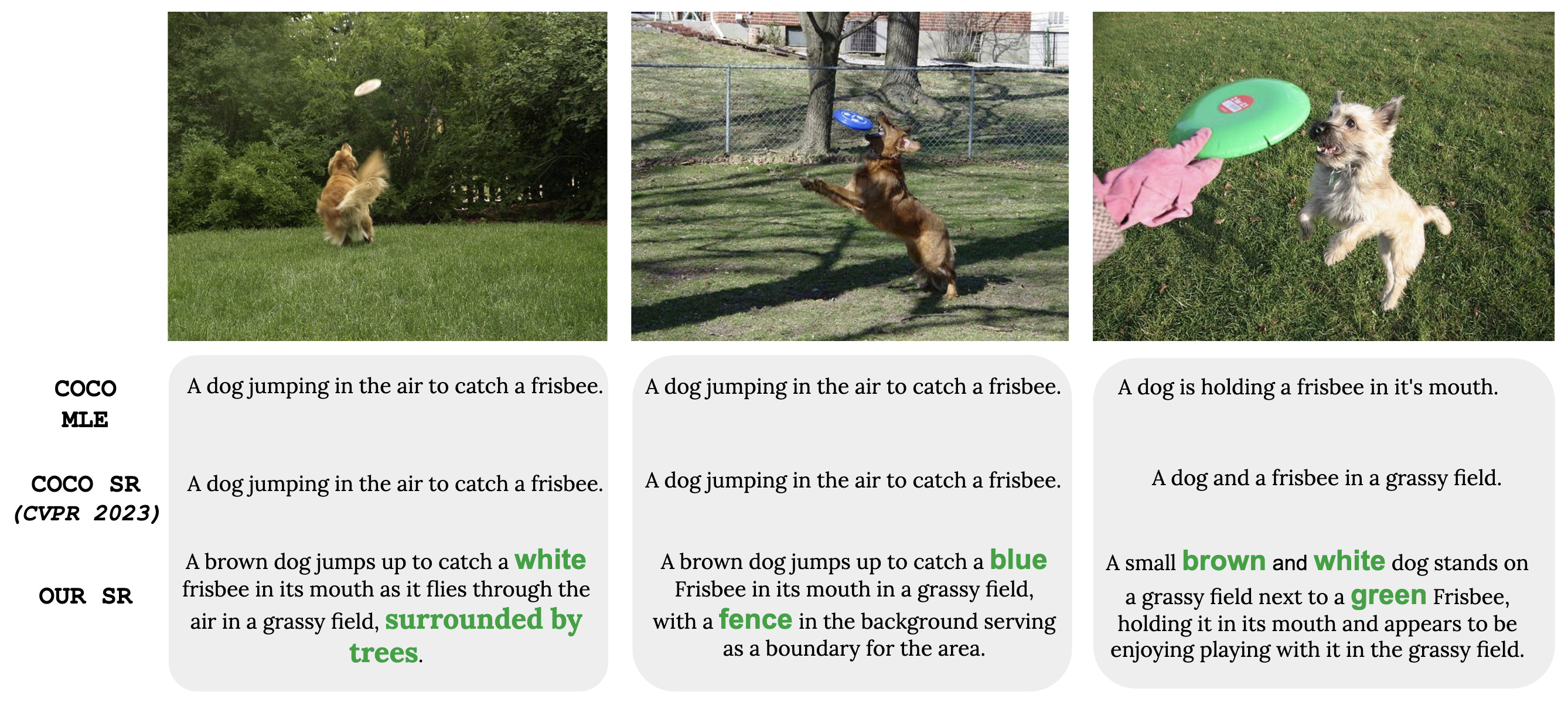

No Detail Left Behind: Revisiting Self-Retrieval for Fine-Grained Image CaptioningManu Gaur , Darshan Singh, and Makarand TapaswiTransactions on Machine Learning Research (TMLR), Jan 2025Presented at ICLR 2026 | Journal-to-Conference certification

No Detail Left Behind: Revisiting Self-Retrieval for Fine-Grained Image CaptioningManu Gaur , Darshan Singh, and Makarand TapaswiTransactions on Machine Learning Research (TMLR), Jan 2025Presented at ICLR 2026 | Journal-to-Conference certificationImage captioning systems are unable to generate fine-grained captions as they are trained on data that is either noisy (alt-text) or generic (human annotations). This is further exacerbated by maximum likelihood training that encourages generation of frequently occurring phrases. Previous works have tried to address this limitation by fine-tuning captioners with a self-retrieval (SR) reward. However, we find that SR fine-tuning has a tendency to reduce caption faithfulness and even hallucinate. In this work, we circumvent this bottleneck by improving the MLE initialization of the captioning system and designing a curriculum for the SR fine-tuning process. To this extent, we present (1) Visual Caption Boosting, a novel framework to instill fine-grainedness in generic image captioning datasets while remaining anchored in human annotations; and (2) BagCurri, a carefully designed training curriculum that more optimally leverages the contrastive nature of the self-retrieval reward. Jointly, they enable the captioner to describe fine-grained aspects in the image while preserving faithfulness to ground-truth captions. Our approach outperforms previous work by +8.9% on SR against 99 random distractors (RD100) (Dessi et al., 2023); and +7.6% on ImageCoDe. Additionally, existing metrics to evaluate captioning systems fail to reward diversity or evaluate a model’s fine-grained understanding ability. Our third contribution addresses this by proposing self-retrieval from the lens of evaluation. We introduce TrueMatch, a benchmark comprising bags of highly similar images that uses SR to assess the captioner’s ability to capture subtle visual distinctions. We evaluate and compare several state-of-the-art open-source MLLMs on TrueMatch, and find that our SR approach outperforms them all by a significant margin (e.g. +4.8% - 7.1% over Cambrian) while having 1-2 orders of magnitude fewer parameters.

@article{Manu2025_SelfRetrieval, author = {Gaur, Manu and Singh, Darshan and Tapaswi, Makarand}, title = {{No Detail Left Behind: Revisiting Self-Retrieval for Fine-Grained Image Captioning}}, year = {2025}, journal = {Transactions on Machine Learning Research (TMLR)}, month = jan }

2024

- [C41]

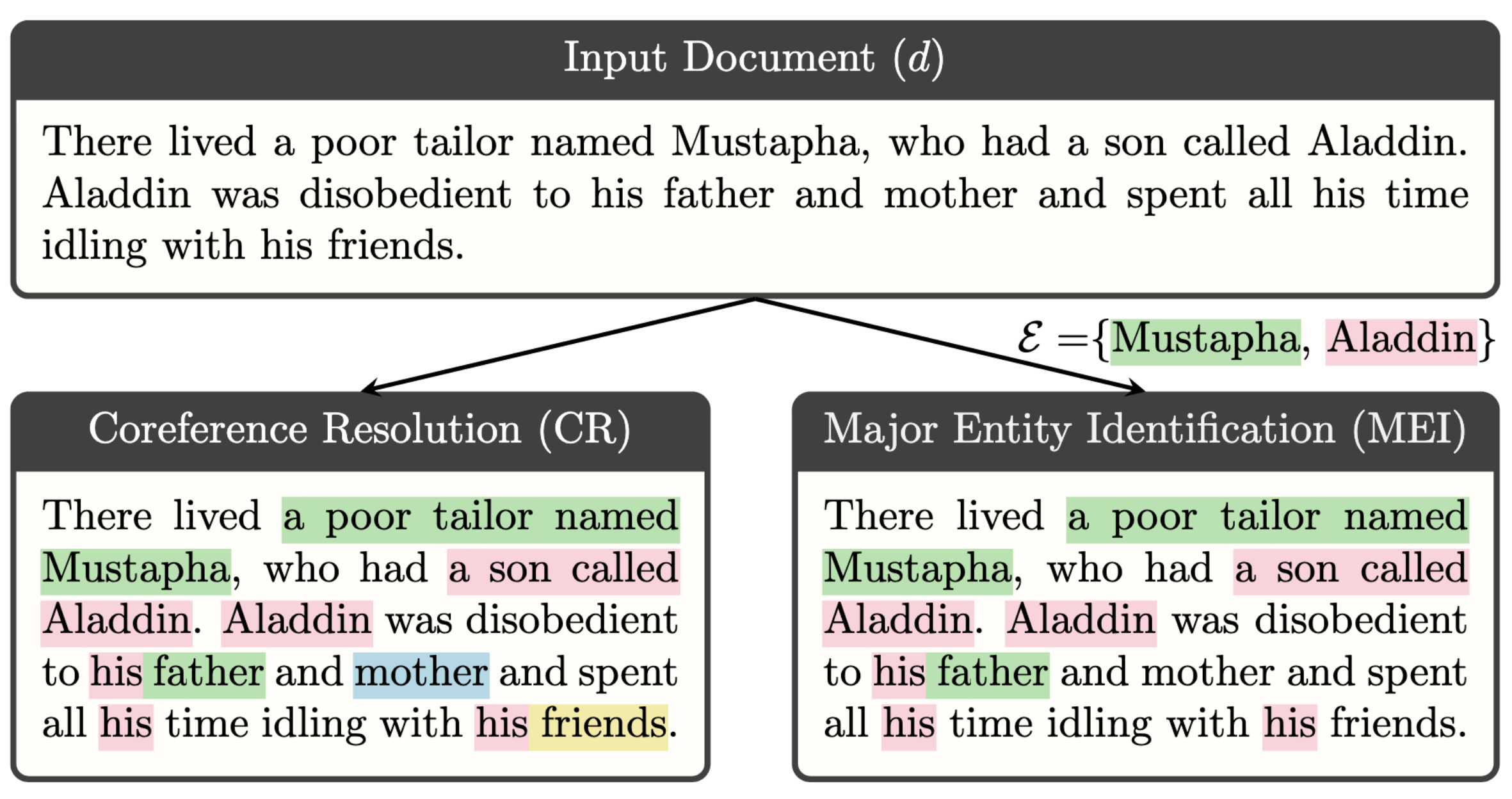

Major Entity Identification: A Generalizable Alternative to Coreference ResolutionIn Empirical Methods in Natural Language Processing (EMNLP), Nov 2024Note: Main conference

Major Entity Identification: A Generalizable Alternative to Coreference ResolutionIn Empirical Methods in Natural Language Processing (EMNLP), Nov 2024Note: Main conferenceThe limited generalization of coreference resolution (CR) models has been a major bottleneck in the task’s broad application. Prior work has identified annotation differences, especially for mention detection, as one of the main reasons for the generalization gap and proposed using additional annotated target domain data. Rather than relying on this additional annotation, we propose an alternative formulation of the CR task, Major Entity Identification (MEI), where we: (a) assume the target entities to be specified in the input, and (b) limit the task to only the frequent entities. Through extensive experiments, we demonstrate that MEI models generalize well across domains on multiple datasets with supervised models and LLM-based few-shot prompting. Additionally, the MEI task fits the classification framework, which enables the use of classification-based metrics that are more robust than the current CR metrics. Finally, MEI is also of practical use as it allows a user to search for all mentions of a particular entity or a group of entities of interest.

@inproceedings{Manikantan2024_MEI, author = {Manikantan, Kawshik and Toshniwal, Shubham and Tapaswi, Makarand and Gandhi, Vineet}, title = {{Major Entity Identification: A Generalizable Alternative to Coreference Resolution}}, year = {2024}, booktitle = {Empirical Methods in Natural Language Processing (EMNLP)}, month = nov, doi = {10.18653/v1/2024.emnlp-main.652} } - [W12]

Detect, Describe, Discriminate: Moving Beyond VQA for MLLM EvaluationManu Gaur , Darshan Singh, and Makarand TapaswiIn ECCV Workshop on Emergent Visual Abilities and Limits of Foundation Models (EVAL-FoMo), Sep 2024

Detect, Describe, Discriminate: Moving Beyond VQA for MLLM EvaluationManu Gaur , Darshan Singh, and Makarand TapaswiIn ECCV Workshop on Emergent Visual Abilities and Limits of Foundation Models (EVAL-FoMo), Sep 2024Visual Question Answering (VQA) with multiple choice questions enables a vision-centric evaluation of Multimodal Large Language Models (MLLMs). Although it reliably checks the existence of specific visual abilities, it is easier for the model to select an answer from multiple choices (VQA evaluation) than to generate the answer itself. In this work, we offer a novel perspective: we evaluate how well an MLLM understands a specific visual concept by its ability to uniquely describe two extremely similar images that differ only in the targeted visual concept. Specifically, we assess the ability of MLLMs to capture specific points of visual differences using self-retrieval, i.e. by retrieving the target image using its generated caption against the other image in the pair serving as the distractor. We curate 247 highly similar image pairs as part of the D3 benchmark. For each image pair, the model is prompted to: (i) Detect a specific visual difference, and (ii) Describe the target image uniquely such that it (iii) Discriminates the target image from the distractor. Self-retrieval within D3 enables white-box evaluation across six different visual patterns, revealing that current models struggle to independently discern fine-grained visual differences, with open-source models failing to outperform random guess.

@inproceedings{Manu2024_D3Benchmark, author = {Gaur, Manu and Singh, Darshan and Tapaswi, Makarand}, title = {{Detect, Describe, Discriminate: Moving Beyond VQA for MLLM Evaluation}}, year = {2024}, booktitle = {ECCV Workshop on Emergent Visual Abilities and Limits of Foundation Models (EVAL-FoMo)}, month = sep } - [W11]

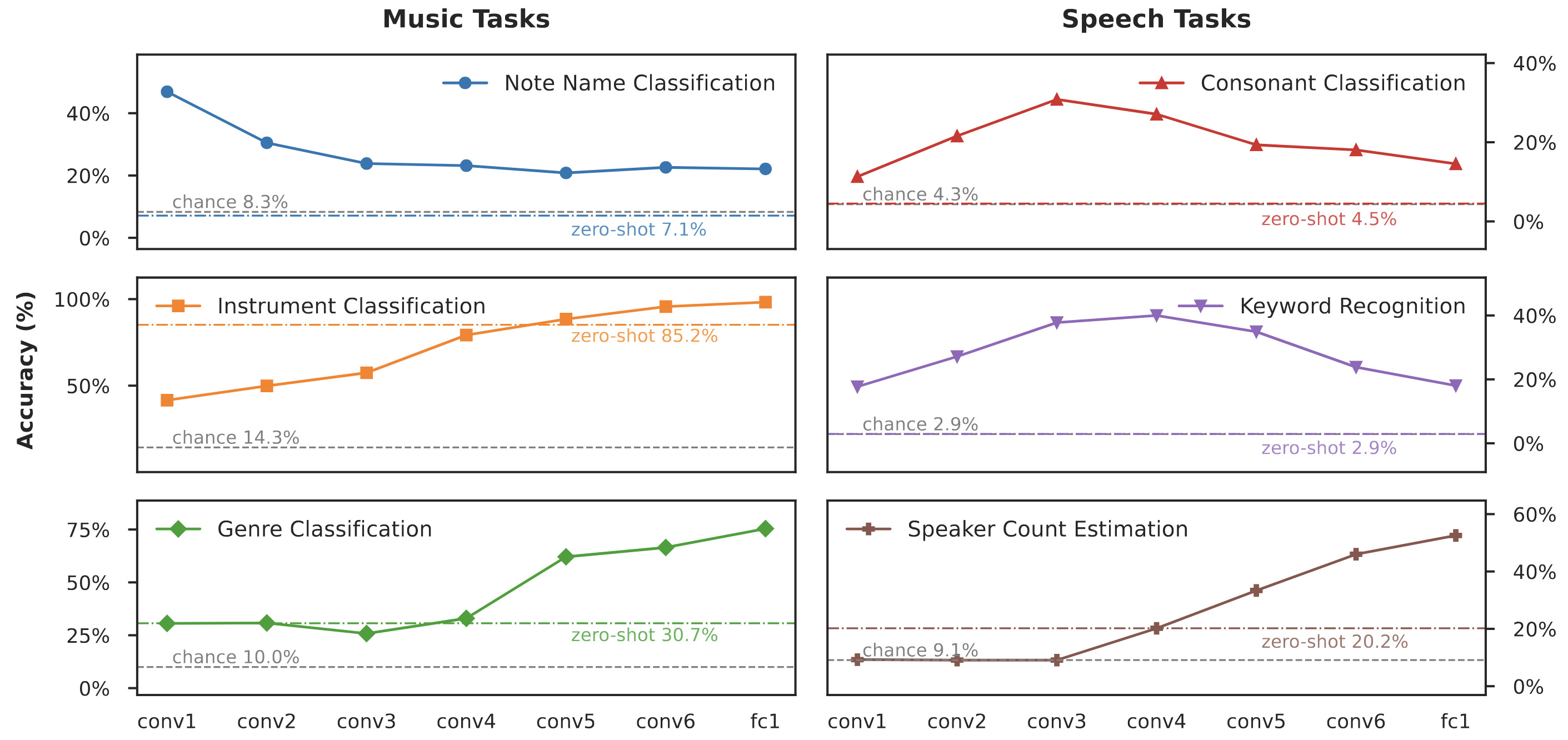

Localizing Auditory Concepts in CNNsPratyaksh Gautam, Makarand Tapaswi*, and Vinoo Alluri*In ICML Workshop on Mechanistic Interpretability (ICMLW-MI), Jul 2024

Localizing Auditory Concepts in CNNsPratyaksh Gautam, Makarand Tapaswi*, and Vinoo Alluri*In ICML Workshop on Mechanistic Interpretability (ICMLW-MI), Jul 2024Deep learning models are capable of complex auditory processing tasks such as keyword spotting, genre classification, and audio captioning, yet remain opaque. While several works have explored interpretability of neural networks for computer vision and natural language processing, the audio modality has been largely ignored. In this paper, we study the behavior of the audio CNN encoder used in the contrastively trained language-audio model, CLAP. In the domain of music and human speech sounds, we localize and identify the layers of the network that perform well on tasks of varying complexity, sometimes even outperforming the model’s final outputs. Digging deeper, we also localize specific dataset classes to neuron clusters within a layer and analyze a cluster’s contribution to the model’s discriminability for that class. To perform these analyses, we propose an automated framework that can leverage a small dataset of a few thousand samples to evaluate and score neuron clusters for their role in classification. Our findings provide insights into the hierarchical nature of representations in audio CNNs, paving the way for improved interpretability of audio model.

@inproceedings{Gautam2024_ICMLwMI-CLAP, author = {Gautam, Pratyaksh and Tapaswi, Makarand and Alluri, Vinoo}, title = {{Localizing Auditory Concepts in CNNs}}, year = {2024}, booktitle = {ICML Workshop on Mechanistic Interpretability (ICMLW-MI)}, month = jul } - [C40]

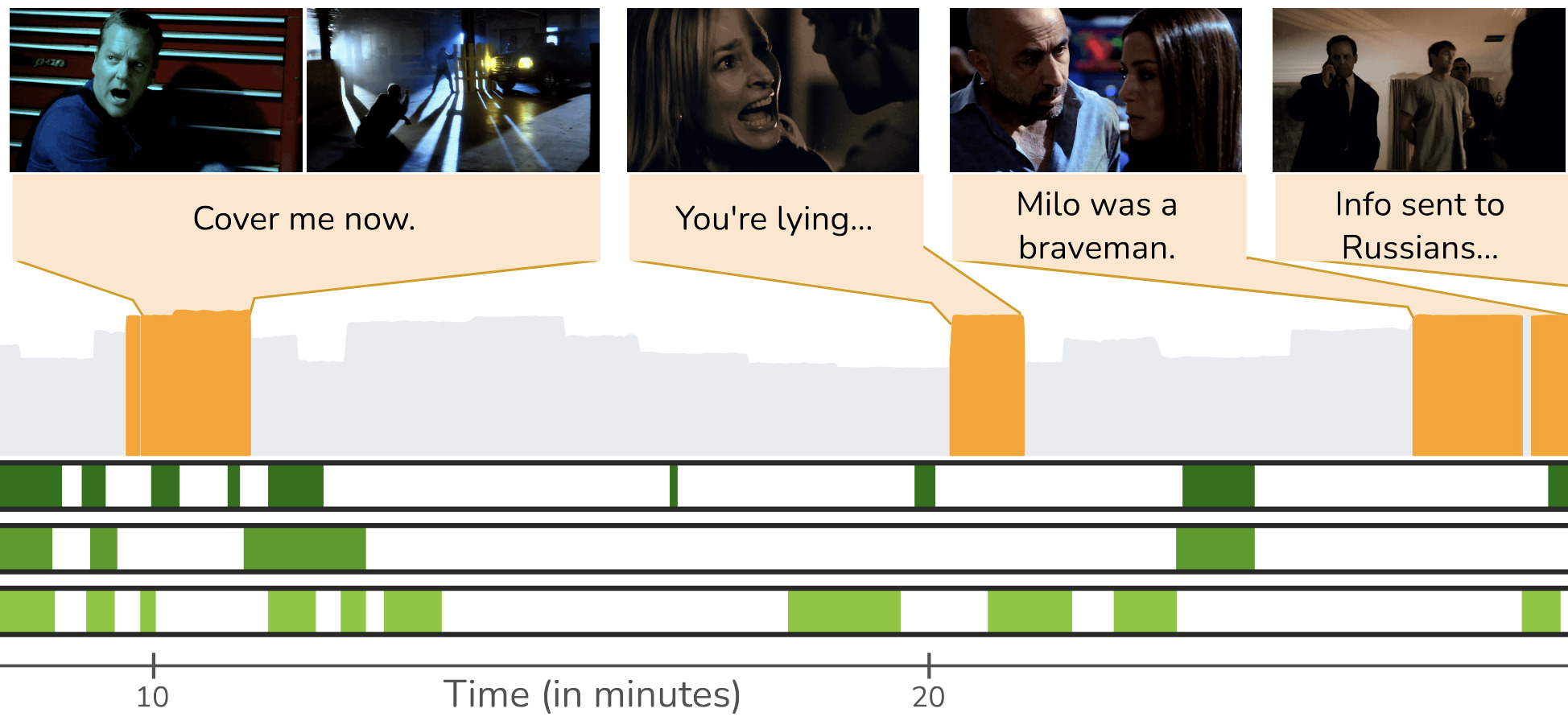

"Previously on ..." From Recaps to Story SummarizationAditya Kumar Singh, Dhruv Srivastava, and Makarand TapaswiIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2024

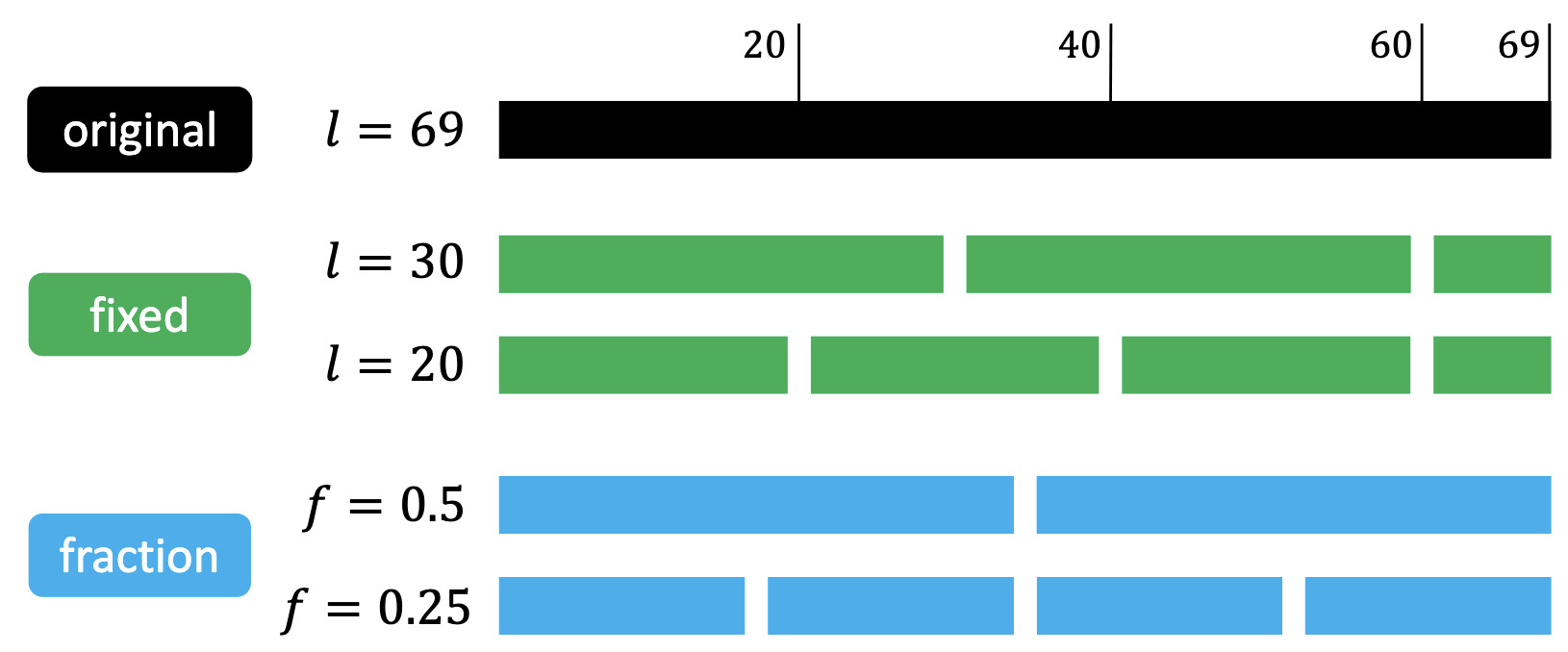

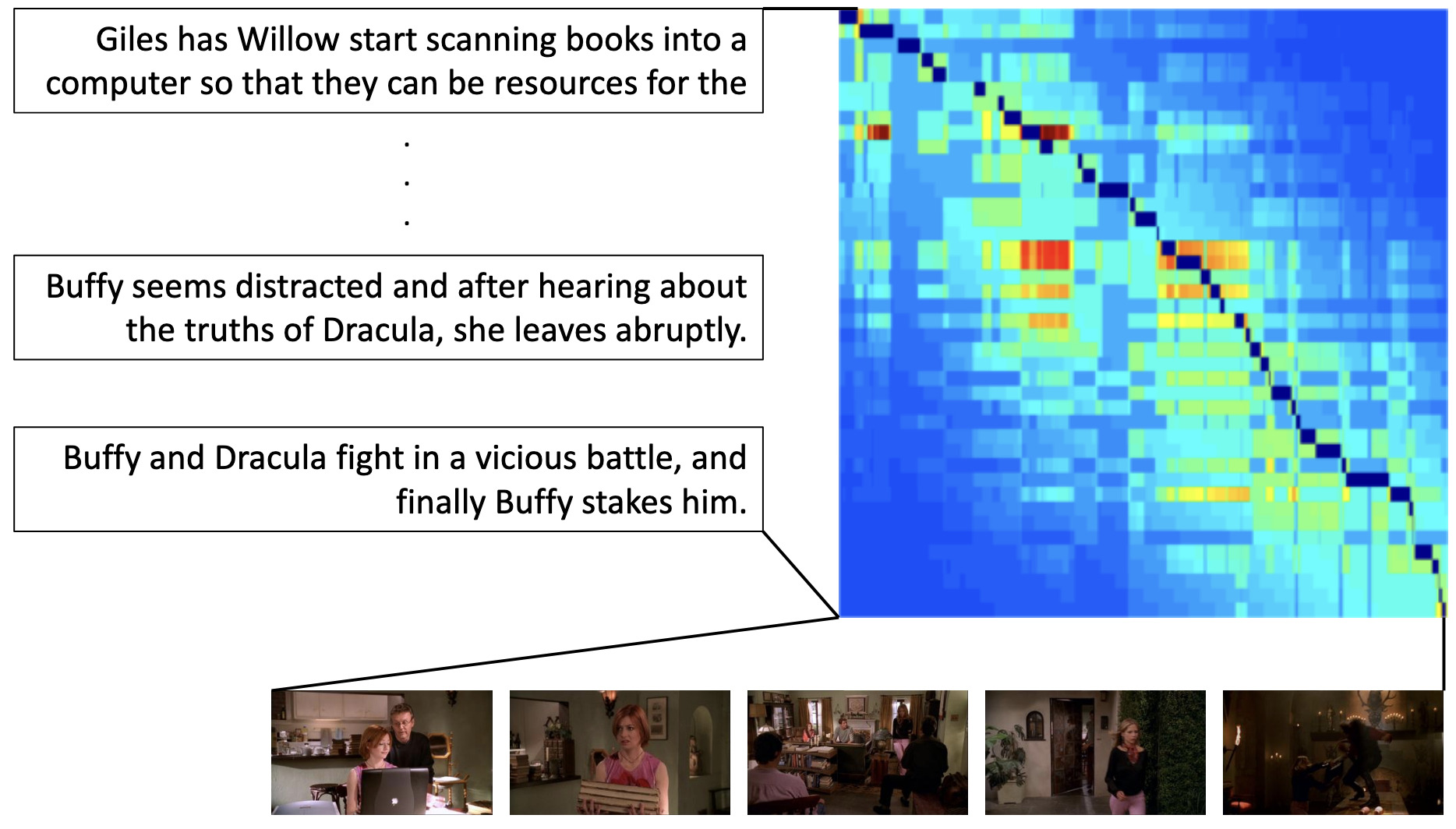

"Previously on ..." From Recaps to Story SummarizationAditya Kumar Singh, Dhruv Srivastava, and Makarand TapaswiIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2024We introduce multimodal story summarization by leveraging TV episode recaps - short video sequences interweaving key story moments from previous episodes to bring viewers up to speed. We propose PlotSnap, a dataset featuring two crime thriller TV shows with rich recaps and long episodes of 40 minutes. Story summarization labels are unlocked by matching recap shots to corresponding sub-stories in the episode. We propose a hierarchical model TaleSumm that processes entire episodes by creating compact shot and dialog representations, and predicts importance scores for each video shot and dialog utterance by enabling interactions between local story groups. Unlike traditional summarization, our method extracts multiple plot points from long videos. We present a thorough evaluation on story summarization, including promising cross-series generalization. TaleSumm also shows good results on classic video summarization benchmarks.

@inproceedings{Singh2024_Recaps, author = {Singh, Aditya Kumar and Srivastava, Dhruv and Tapaswi, Makarand}, title = {{"Previously on ..." From Recaps to Story Summarization}}, year = {2024}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, month = jun, doi = {10.1109/CVPR52733.2024.01294} } - [C39]

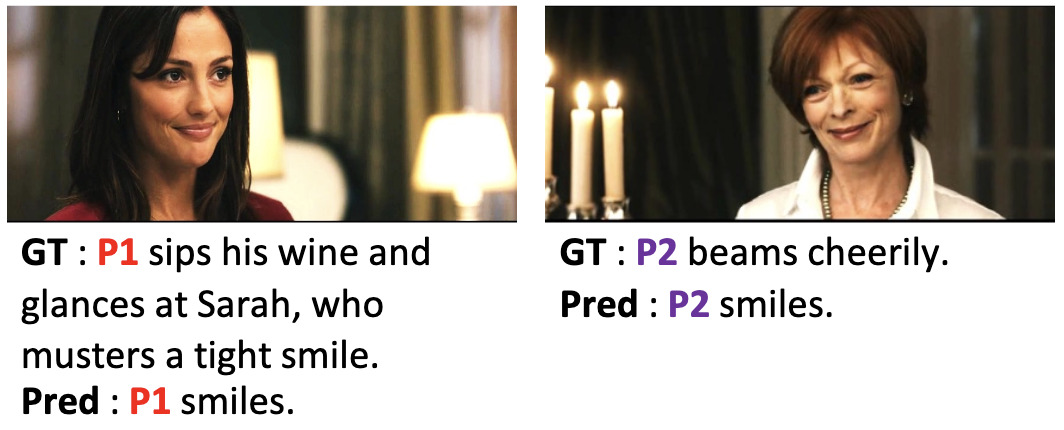

MICap: A Unified Model for Identity-aware Movie DescriptionsIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2024

MICap: A Unified Model for Identity-aware Movie DescriptionsIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2024Characters are an important aspect of any storyline and identifying and including them in descriptions is necessary for story understanding. While previous work has largely ignored identity and generated captions with someone (anonymized names), recent work formulates id-aware captioning as a fill-in-the-blanks (FITB) task, where, given a caption with blanks, the goal is to predict person id labels. However, to predict captions with ids, a two-stage approach is required: first predict captions with someone, then fill in identities. In this work, we present a new single stage approach that can seamlessly switch between id-aware caption generation or FITB when given a caption with blanks. Our model, Movie-Identity Captioner (MICap), uses a shared auto-regressive decoder that benefits from training with FITB and full-caption generation objectives, while the encoder can benefit from or disregard captions with blanks as input. Another challenge with id-aware captioning is the lack of a metric to capture subtle differences between person ids. To this end, we introduce iSPICE, a caption evaluation metric that focuses on identity tuples created through intermediate scene graphs. We evaluate MICap on Large-Scale Movie Description Challenge (LSMDC), where we show a 4.2% improvement in FITB accuracy, and a 1-2% bump in classic captioning metrics.

@inproceedings{Haran2024_MICap, author = {Raajesh, Haran and Desanur, Naveen Reddy and Khan, Zeeshan and Tapaswi, Makarand}, title = {{MICap: A Unified Model for Identity-aware Movie Descriptions}}, year = {2024}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, month = jun, doi = {10.1109/CVPR52733.2024.01329} } - [W10]

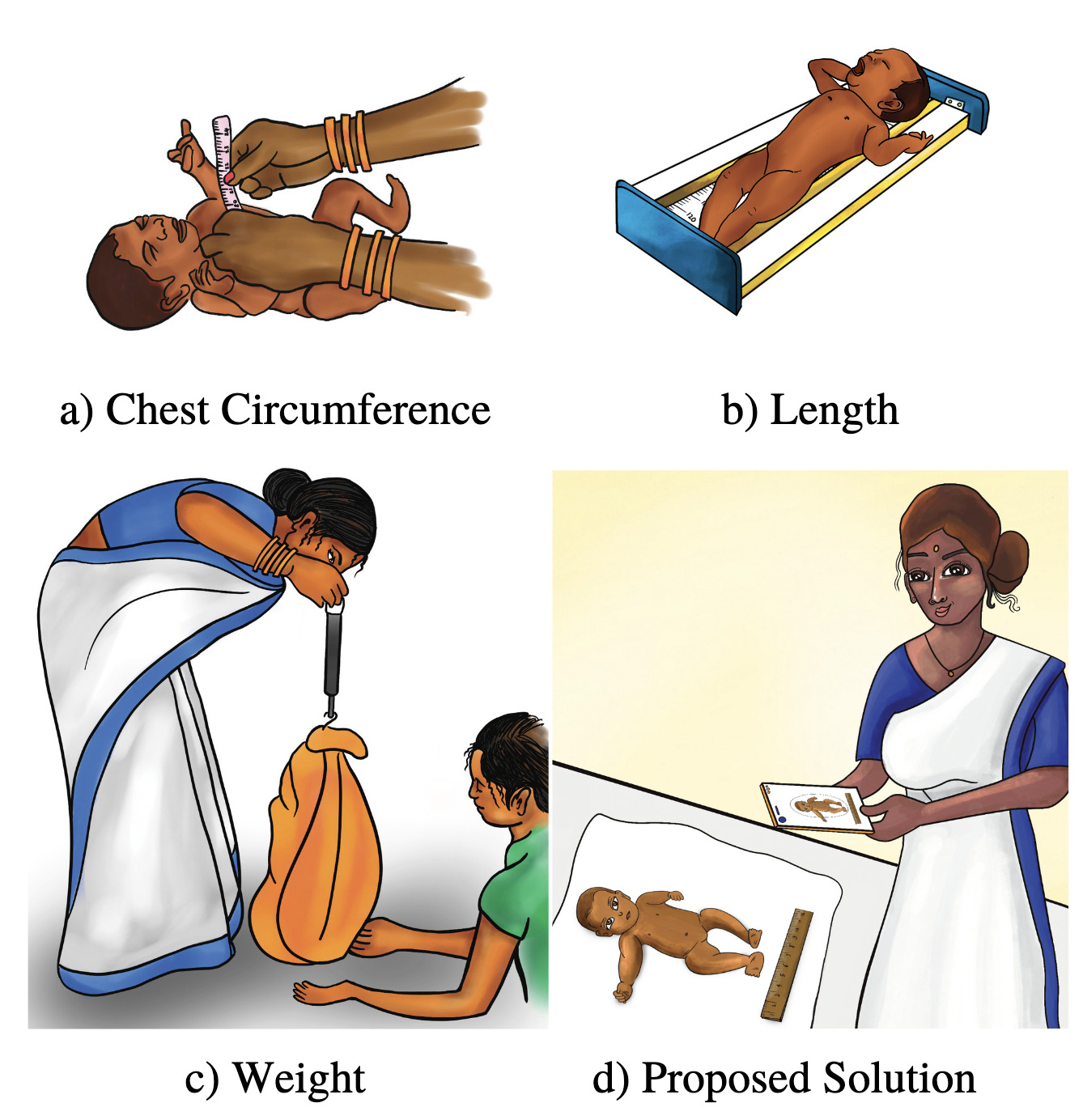

NurtureNet: A Multi-task Video-based Approach for Newborn AnthropometryYash Khandelwal, Mayur Arvind , Sriram Kumar , Ashish Gupta, Sachin Kumar Danisetty, Piyush Bagad, Anish Madan, Mayank Lunayach, Aditya Annavajjala, Abhishek Maiti, Sansiddh Jain, Aman Dalmia, Namrata Deka, Jerome White, Jigar Doshi, Angjoo Kanazawa, Rahul Panicker, Alpan Raval, Srinivas Rana, and Makarand TapaswiIn CVPR Worskhop on Computer Vision for Physiological Measurements (CVPM), Jun 2024🏆 Best Paper Award

NurtureNet: A Multi-task Video-based Approach for Newborn AnthropometryYash Khandelwal, Mayur Arvind , Sriram Kumar , Ashish Gupta, Sachin Kumar Danisetty, Piyush Bagad, Anish Madan, Mayank Lunayach, Aditya Annavajjala, Abhishek Maiti, Sansiddh Jain, Aman Dalmia, Namrata Deka, Jerome White, Jigar Doshi, Angjoo Kanazawa, Rahul Panicker, Alpan Raval, Srinivas Rana, and Makarand TapaswiIn CVPR Worskhop on Computer Vision for Physiological Measurements (CVPM), Jun 2024🏆 Best Paper AwardMalnutrition among newborns is a top public health concern in developing countries. Identification and subsequent growth monitoring are key to successful interventions. However, this is challenging in rural communities where health systems tend to be inaccessible and under-equipped, with poor adherence to protocol. Our goal is to equip health workers and public health systems with a solution for contactless newborn anthropometry in the community. We propose NurtureNet, a multi-task model that fuses visual information (a video taken with a low-cost smartphone) with tabular inputs to regress multiple anthropometry estimates including weight, length, head circumference, and chest circumference. We show that visual proxy tasks of segmentation and keypoint prediction further improve performance. We establish the efficacy of the model through several experiments and achieve a relative error of 3.9% and mean absolute error of 114.3 g for weight estimation. Model compression to 15 MB also allows offline deployment to low-cost smartphones.

@inproceedings{WIAI2024_CVPMNurtureNet, author = {Khandelwal, Yash and Arvind, Mayur and Kumar, Sriram and Gupta, Ashish and Danisetty, Sachin Kumar and Bagad, Piyush and Madan, Anish and Lunayach, Mayank and Annavajjala, Aditya and Maiti, Abhishek and Jain, Sansiddh and Dalmia, Aman and Deka, Namrata and White, Jerome and Doshi, Jigar and Kanazawa, Angjoo and Panicker, Rahul and Raval, Alpan and Rana, Srinivas and Tapaswi, Makarand}, title = {{NurtureNet: A Multi-task Video-based Approach for Newborn Anthropometry}}, year = {2024}, booktitle = {CVPR Worskhop on Computer Vision for Physiological Measurements (CVPM)}, month = jun, doi = {10.1109/CVPRW63382.2024.00038} } - [P2]

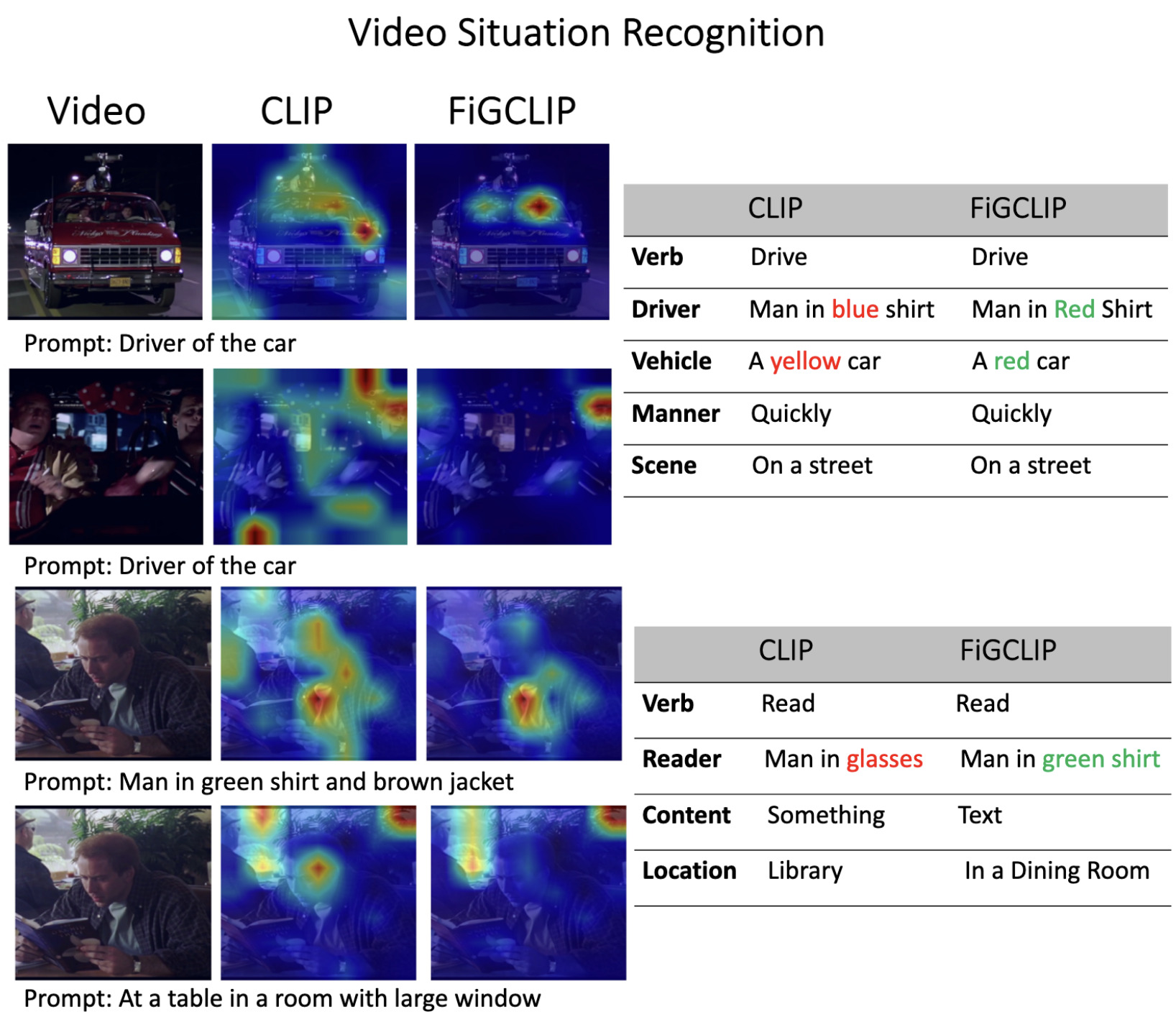

FiGCLIP: Fine-Grained CLIP Adaptation via Densely Annotated VideosDarshan Singh, Zeeshan Khan, and Makarand TapaswiarXiv:2401.07669, Jan 2024

FiGCLIP: Fine-Grained CLIP Adaptation via Densely Annotated VideosDarshan Singh, Zeeshan Khan, and Makarand TapaswiarXiv:2401.07669, Jan 2024[coming soon]

@article{Darshan2024_FiGCLIP, author = {Singh, Darshan and Khan, Zeeshan and Tapaswi, Makarand}, title = {{FiGCLIP: Fine-Grained CLIP Adaptation via Densely Annotated Videos}}, year = {2024}, month = jan, journal = {arXiv:2401.07669} }

2023

- [C38]

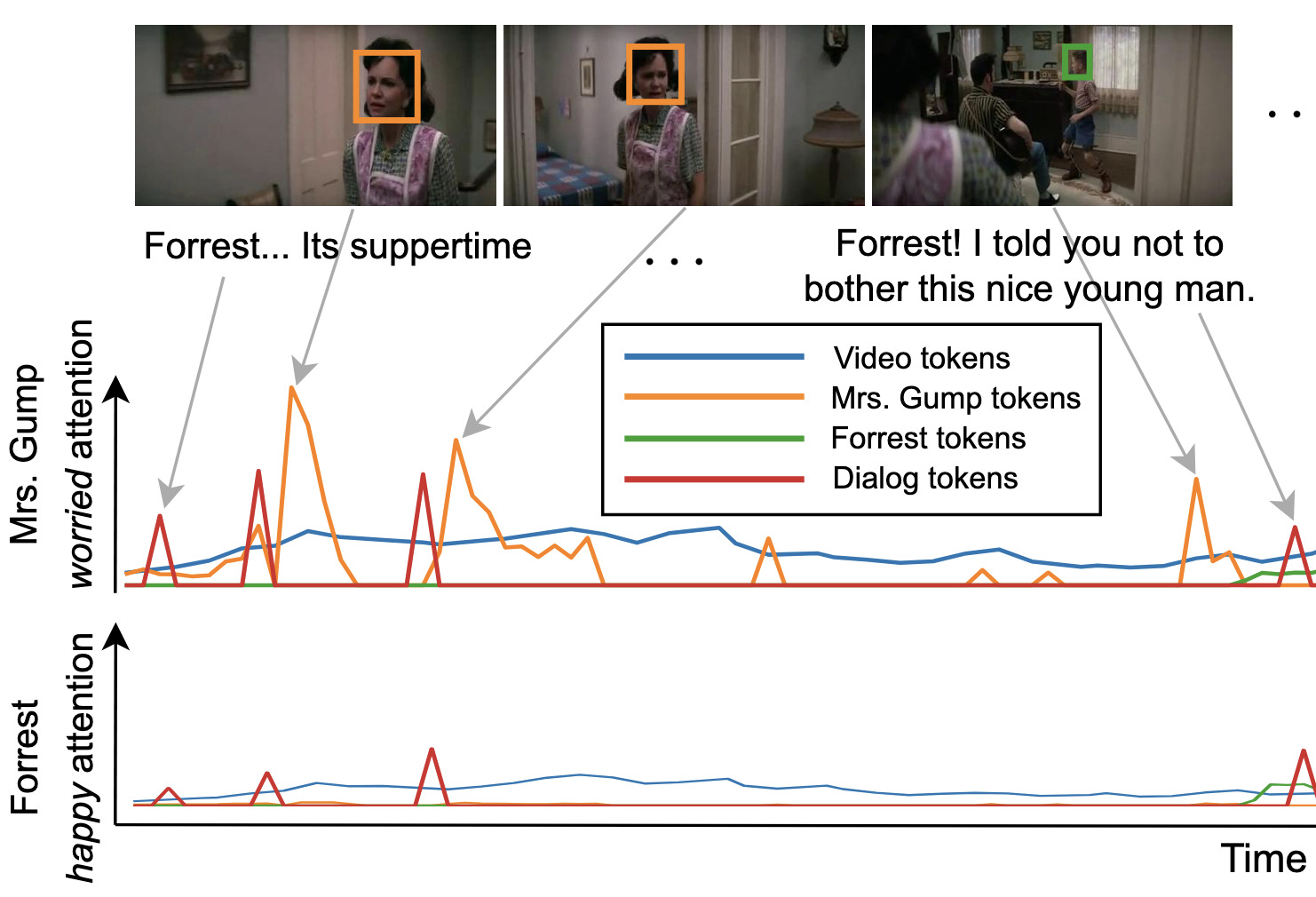

How you feelin’? Learning Emotions and Mental States in Movie ScenesDhruv Srivastava, Aditya Kumar Singh, and Makarand TapaswiIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2023

How you feelin’? Learning Emotions and Mental States in Movie ScenesDhruv Srivastava, Aditya Kumar Singh, and Makarand TapaswiIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2023Movie story analysis requires understanding characters’ emotions and mental states. Towards this goal, we formulate emotion understanding as predicting a diverse and multi-label set of emotions at the level of a movie scene and for each character. We propose EmoTx, a multimodal Transformer-based architecture that ingests videos, multiple characters, and dialog utterances to make joint predictions. By leveraging annotations from the MovieGraphs dataset, we aim to predict classic emotions (e.g. happy, angry) and other mental states (e.g. honest, helpful). We conduct experiments on the most frequently occurring 10 and 25 labels, and a mapping that clusters 181 labels to 26. Ablation studies and comparison against adapted state-of-the-art emotion recognition approaches shows the effectiveness of EmoTx. Analyzing EmoTx’s self-attention scores reveals that expressive emotions often look at character tokens while other mental states rely on video and dialog cues.

@inproceedings{Srivastava2023_EmoTx, author = {Srivastava, Dhruv and Singh, Aditya Kumar and Tapaswi, Makarand}, title = {{How you feelin'? Learning Emotions and Mental States in Movie Scenes}}, year = {2023}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, month = jun, doi = {10.1109/CVPR52729.2023.00248} } - [C37]

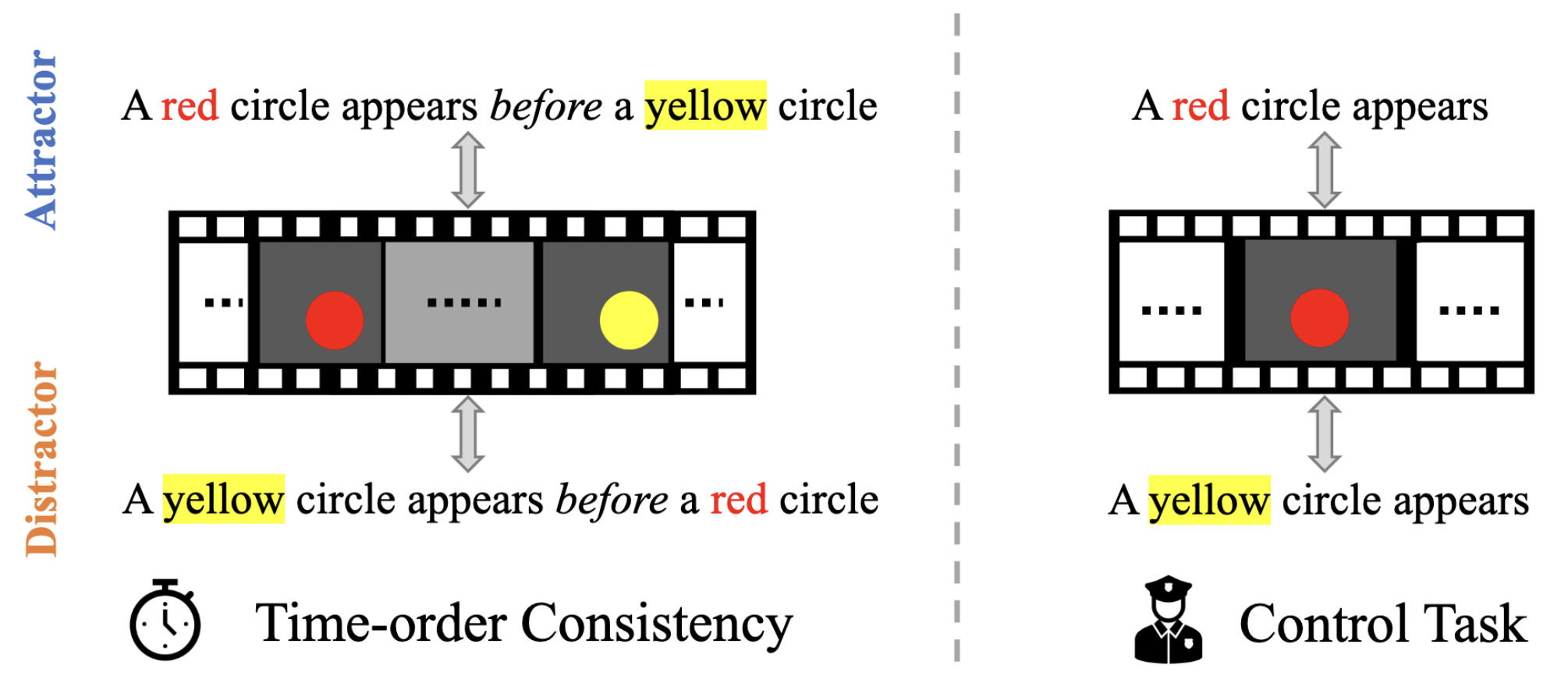

Test of Time: Instilling Video-Language Models with a Sense of TimePiyush Bagad, Makarand Tapaswi, and Cees G M SnoekIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2023

Test of Time: Instilling Video-Language Models with a Sense of TimePiyush Bagad, Makarand Tapaswi, and Cees G M SnoekIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2023Modeling and understanding time remains a challenge in contemporary video understanding models. With language emerging as a key driver towards powerful generalization, it is imperative for foundational video-language models to have a sense of time. In this paper, we consider a specific aspect of temporal understanding: consistency of time order as elicited by before/after relations. We establish that six existing video-language models struggle to understand even such simple temporal relations. We then question whether it is feasible to equip these foundational models with temporal awareness without re-training them from scratch. Towards this, we propose a temporal adaptation recipe on top of one such model, VideoCLIP, based on post-pretraining on a small amount of video-text data. We conduct a zero-shot evaluation of the adapted models on six datasets for three downstream tasks which require a varying degree of time awareness. We observe encouraging performance gains especially when the task needs higher time awareness. Our work serves as a first step towards probing and instilling a sense of time in existing video-language models without the need for data and compute-intense training from scratch.

@inproceedings{Bagad2023_TestOfTime, author = {Bagad, Piyush and Tapaswi, Makarand and Snoek, Cees G M}, title = {{Test of Time: Instilling Video-Language Models with a Sense of Time}}, year = {2023}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, month = jun, doi = {10.1109/CVPR52729.2023.00247} } - [W9]

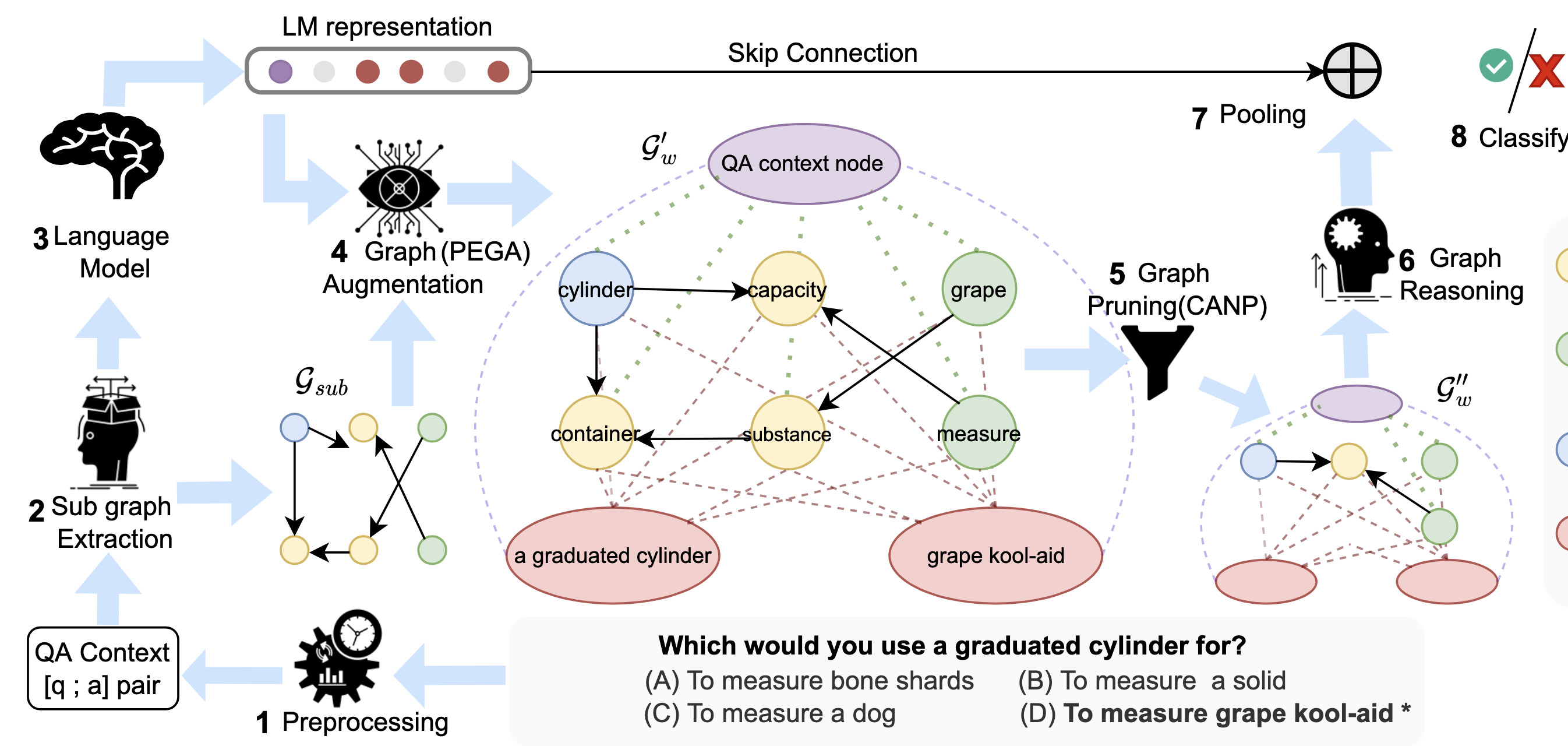

GrapeQA: GRaph Augmentation and Pruning to Enhance Question-AnsweringIn WWW Workshop on Natural Language Processing for Knowledge Graph Construction (NLP4KGc), May 2023

GrapeQA: GRaph Augmentation and Pruning to Enhance Question-AnsweringIn WWW Workshop on Natural Language Processing for Knowledge Graph Construction (NLP4KGc), May 2023Commonsense question-answering (QA) methods combine the power of pre-trained Language Models (LM) with the reasoning provided by Knowledge Graphs (KG). A typical approach collects nodes relevant to the QA pair from a KG to form a Working Graph (WG) followed by reasoning using Graph Neural Networks(GNNs). This faces two major challenges: (i) it is difficult to capture all the information from the QA in the WG, and (ii) the WG contains some irrelevant nodes from the KG. To address these, we propose GrapeQA with two simple improvements on the WG: (i) Prominent Entities for Graph Augmentation identifies relevant text chunks from the QA pair and augments the WG with corresponding latent representations from the LM, and (ii) Context-Aware Node Pruning removes nodes that are less relevant to the QA pair. We evaluate our results on OpenBookQA, CommonsenseQA and MedQA-USMLE and see that GrapeQA shows consistent improvements over its LM + KG predecessor (QA-GNN in particular) and large improvements on OpenBookQA.

@inproceedings{Taunk2023_GrapeQA, author = {Taunk, Dhaval and Khanna, Lakshya and Kandru, Pavan and Varma, Vasudeva and Sharma, Charu and Tapaswi, Makarand}, title = {{GrapeQA: GRaph Augmentation and Pruning to Enhance Question-Answering}}, year = {2023}, booktitle = {WWW Workshop on Natural Language Processing for Knowledge Graph Construction (NLP4KGc)}, month = may, doi = {10.1145/3543873.3587651} } - [C36]

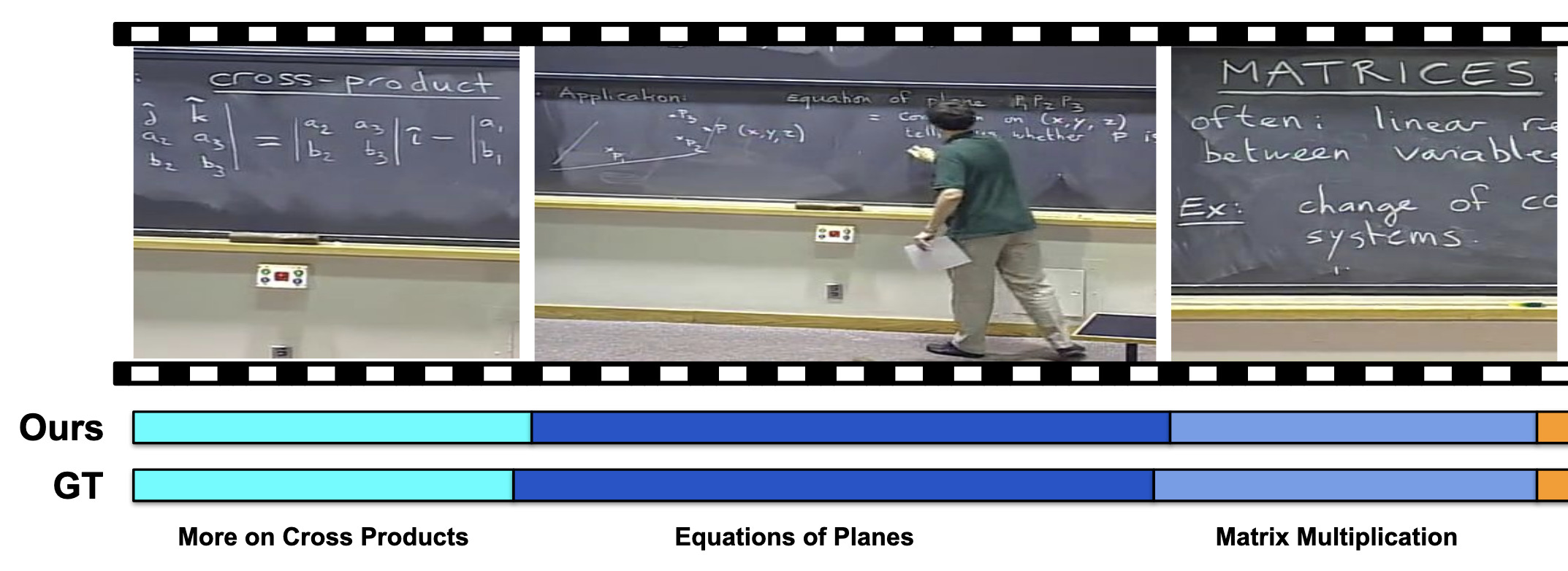

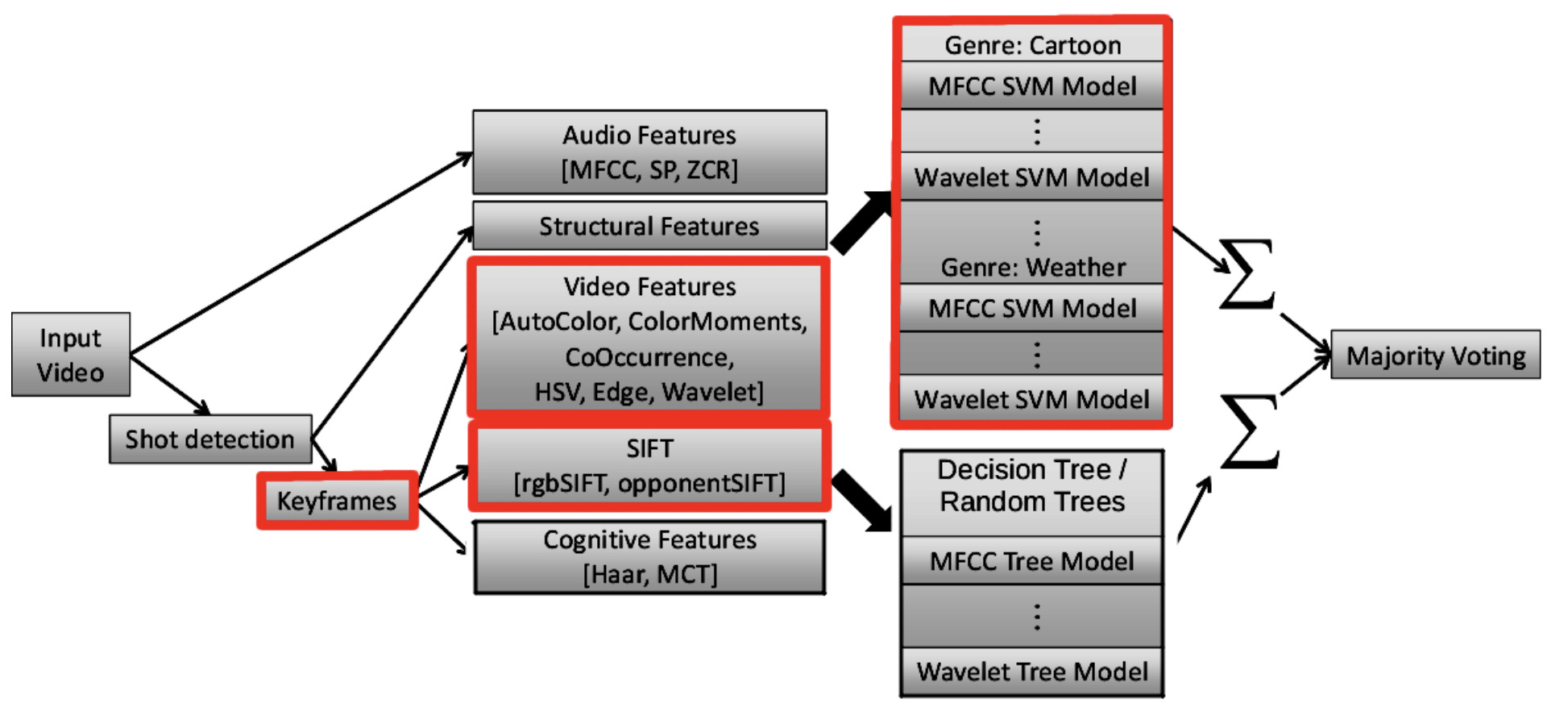

Unsupervised Audio-Visual Lecture SegmentationIn Winter Conference on Applications of Computer Vision (WACV), Jan 2023

Unsupervised Audio-Visual Lecture SegmentationIn Winter Conference on Applications of Computer Vision (WACV), Jan 2023Over the last decade, online lecture videos have become increasingly popular and have experienced a meteoric rise during the pandemic. However, video-language research has primarily focused on instructional videos or movies, and tools to help students navigate the growing online lectures are lacking. Our first contribution is to facilitate research in the educational domain by introducing AVLectures, a large-scale dataset consisting of 86 courses with over 2,350 lectures covering various STEM subjects. Each course contains video lectures, transcripts, OCR outputs for lecture frames, and optionally lecture notes, slides, assignments, and related educational content that can inspire a variety of tasks. Our second contribution is introducing video lecture segmentation that splits lectures into bite-sized topics. Lecture clip representations leverage visual, textual, and OCR cues and are trained on a pretext self-supervised task of matching the narration with the temporally aligned visual content. We formulate lecture segmentation as an unsupervised task and use these representations to generate segments using a temporally consistent 1-nearest neighbor algorithm, TW-FINCH. We evaluate our method on 15 courses and compare it against various visual and textual baselines, outperforming all of them. Our comprehensive ablation studies also identify the key factors driving the success of our approach.

@inproceedings{Singh2023_AVLectures, author = {Singh, Darshan and Gupta, Anchit and Jawahar, C V and Tapaswi, Makarand}, title = {{Unsupervised Audio-Visual Lecture Segmentation}}, year = {2023}, booktitle = {Winter Conference on Applications of Computer Vision (WACV)}, month = jan, doi = {10.1109/WACV56688.2023.00520} }

2022

- [C35]

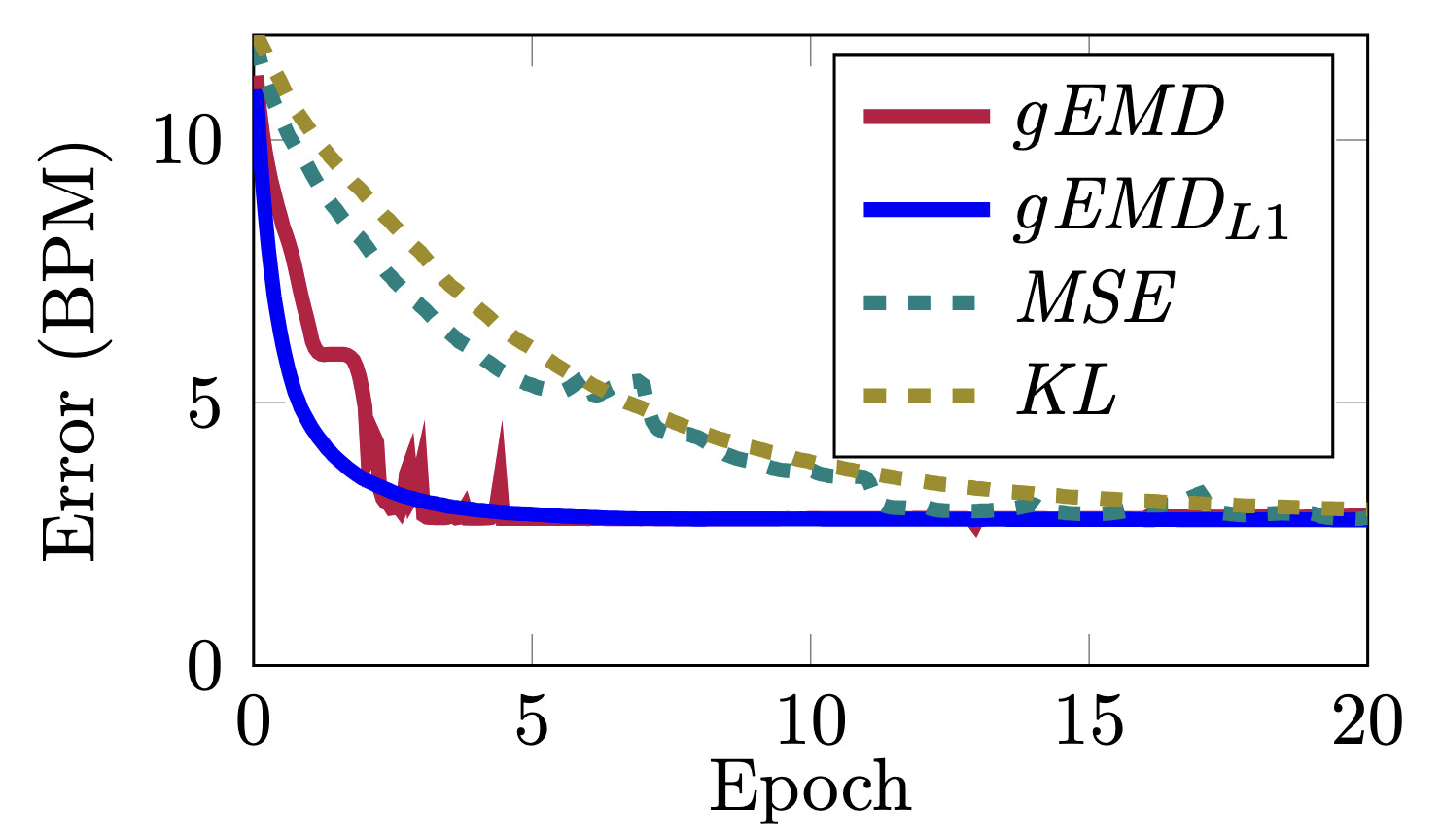

Sonus Texere! Automated Dense Soundtrack Construction for Books using Movie AdaptationsJaidev Shriram, Makarand Tapaswi, and Vinoo AlluriIn International Society for Music Information Retrieval Conference (ISMIR), Dec 2022🏆 Brave New Idea Award

Sonus Texere! Automated Dense Soundtrack Construction for Books using Movie AdaptationsJaidev Shriram, Makarand Tapaswi, and Vinoo AlluriIn International Society for Music Information Retrieval Conference (ISMIR), Dec 2022🏆 Brave New Idea AwardReading, much like music listening, is an immersive experience that transports readers while taking them on an emotional journey. Listening to complementary music has the potential to amplify the reading experience, especially when the music is stylistically cohesive and emotionally relevant. In this paper, we propose the first fully automatic method to build a dense soundtrack for books, which can play high-quality instrumental music for the entirety of the reading duration. Our work employs a unique text processing and music weaving pipeline that determines the context and emotional composition of scenes in a chapter. This allows our method to identify and play relevant excerpts from the soundtrack of the book’s movie adaptation. By relying on the movie composer’s craftsmanship, our book soundtracks include expert-made motifs and other scene-specific musical characteristics. We validate the design decisions of our approach through a perceptual study. Our readers note that the book soundtrack greatly enhanced their reading experience, due to high immersiveness granted via uninterrupted and style-consistent music, and a heightened emotional state attained via high precision emotion and scene context recognition.

@inproceedings{Shriram2022_BookMusic, author = {Shriram, Jaidev and Tapaswi, Makarand and Alluri, Vinoo}, title = {{Sonus Texere! Automated Dense Soundtrack Construction for Books using Movie Adaptations}}, year = {2022}, booktitle = {International Society for Music Information Retrieval Conference (ISMIR)}, month = dec } - [C34]

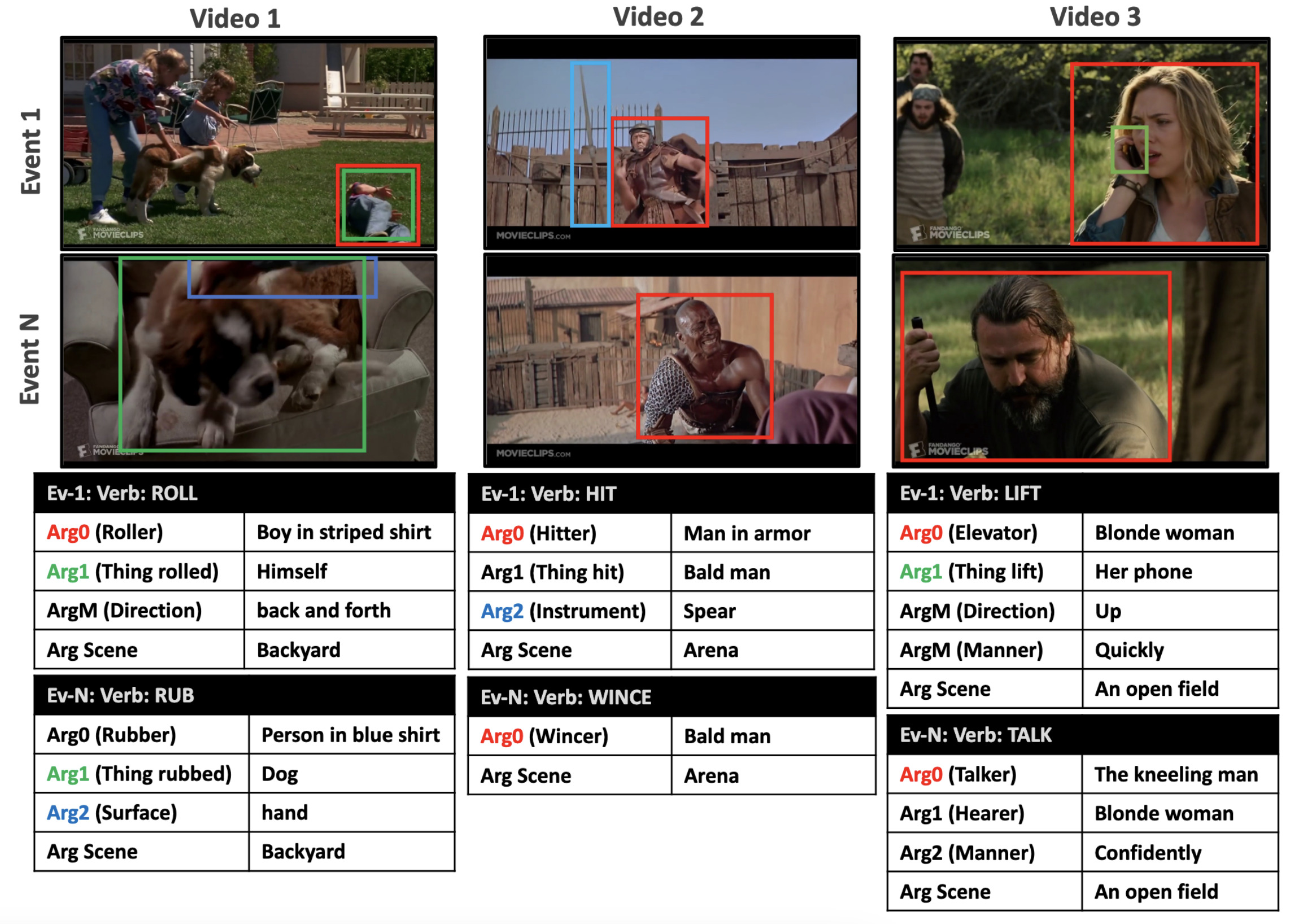

Grounded Video Situation RecognitionZeeshan Khan, C V Jawahar, and Makarand TapaswiIn Neural Information Processing Systems (NeurIPS), Dec 2022

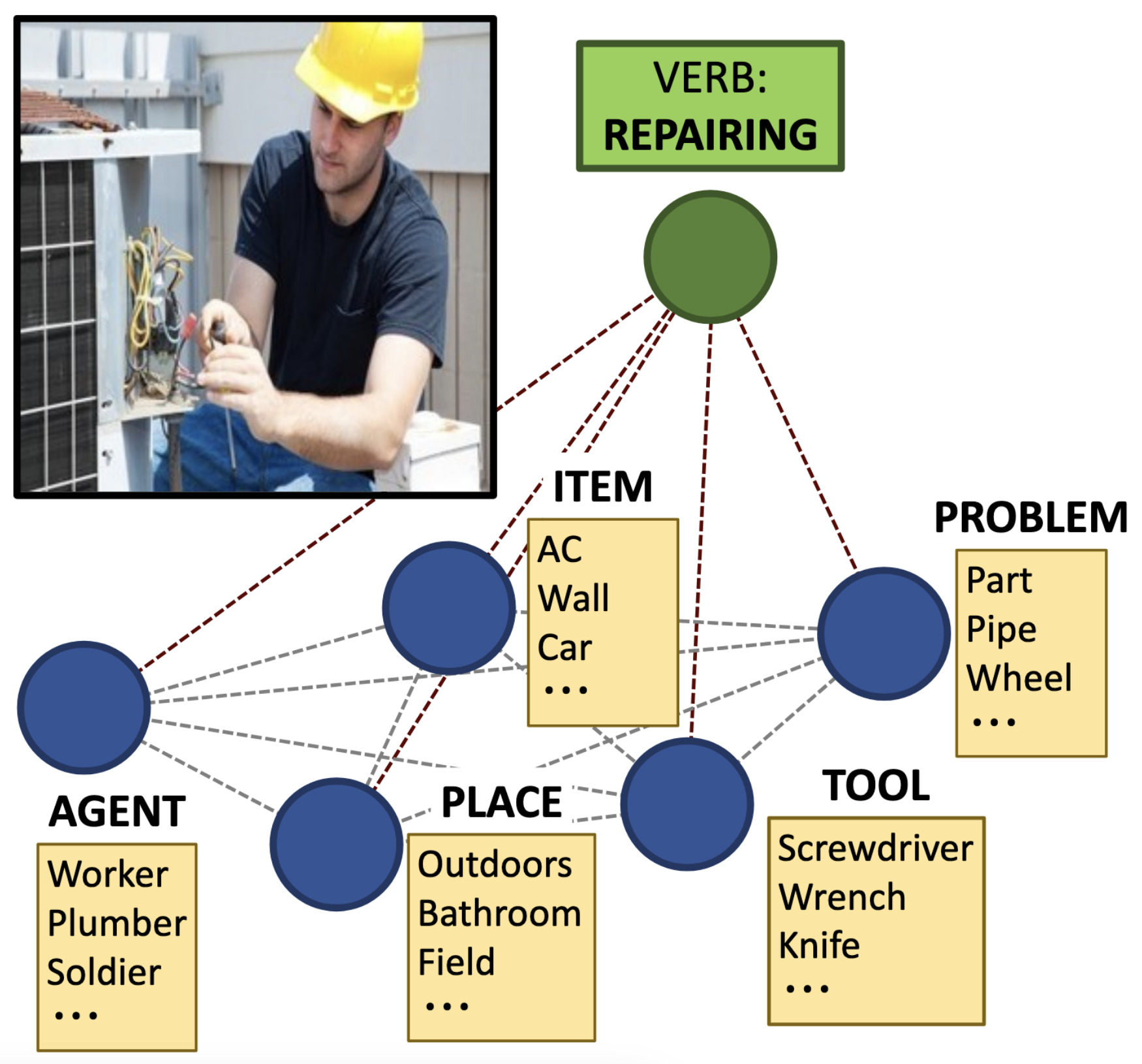

Grounded Video Situation RecognitionZeeshan Khan, C V Jawahar, and Makarand TapaswiIn Neural Information Processing Systems (NeurIPS), Dec 2022Dense video understanding requires answering several questions such as who is doing what to whom, with what, how, why, and where. Recently, Video Situation Recognition (VidSitu) is framed as a task for structured prediction of multiple events, their relationships, and actions and various verb-role pairs attached to descriptive entities. This task poses several challenges in identifying, disambiguating, and co-referencing entities across multiple verb-role pairs, but also faces some challenges of evaluation. In this work, we propose the addition of spatio-temporal grounding as an essential component of the structured prediction task in a weakly supervised setting, and present a novel three stage Transformer model, VideoWhisperer, that is empowered to make joint predictions. In stage one, we learn contextualised embeddings for video features in parallel with key objects that appear in the video clips to enable fine-grained spatio-temporal reasoning. The second stage sees verb-role queries attend and pool information from object embeddings, localising answers to questions posed about the action. The final stage generates these answers as captions to describe each verb-role pair present in the video. Our model operates on a group of events (clips) simultaneously and predicts verbs, verb-role pairs, their nouns, and their grounding on-the-fly. When evaluated on a grounding-augmented version of the VidSitu dataset, we observe a large improvement in entity captioning accuracy, as well as the ability to localize verb-roles without grounding annotations at training time.

@inproceedings{Khan2022_GroundedVidSitu, author = {Khan, Zeeshan and Jawahar, C V and Tapaswi, Makarand}, title = {{Grounded Video Situation Recognition}}, year = {2022}, booktitle = {Neural Information Processing Systems (NeurIPS)}, month = dec } - [C33]

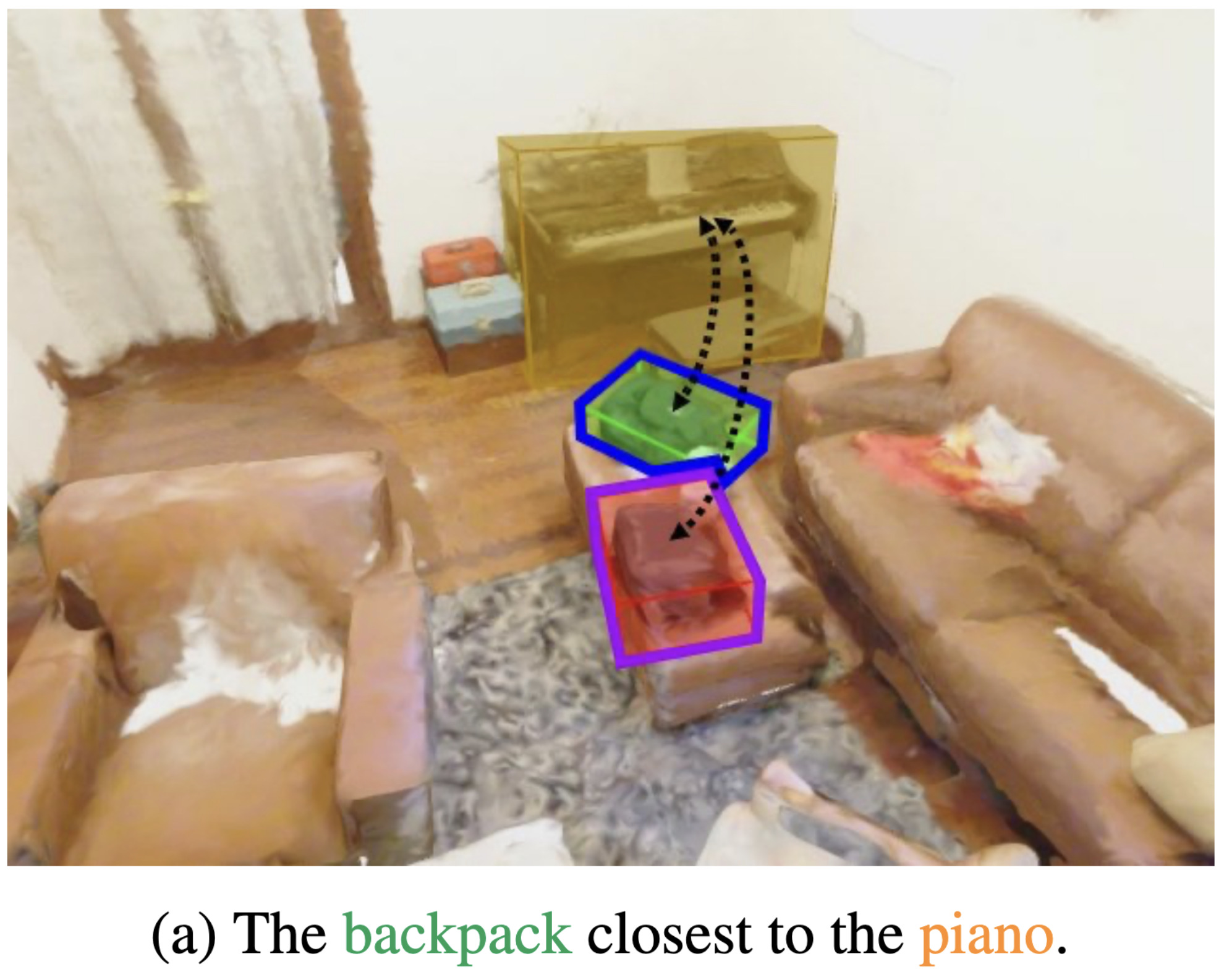

Language Conditioned Spatial Relation Reasoning for 3D Object GroundingIn Neural Information Processing Systems (NeurIPS), Dec 2022

Language Conditioned Spatial Relation Reasoning for 3D Object GroundingIn Neural Information Processing Systems (NeurIPS), Dec 2022Localizing objects in 3D scenes based on natural language requires understanding and reasoning about spatial relations. In particular, it is often crucial to distinguish similar objects referred by the text, such as "the left most chair" and "a chair next to the window". In this work we propose a language-conditioned transformer model for grounding 3D objects and their spatial relations. To this end, we design a spatial self-attention layer that accounts for relative distances and orientations between objects in input 3D point clouds. Training such a layer with visual and language inputs enables to disambiguate spatial relations and to localize objects referred by the text. To facilitate the cross-modal learning of relations, we further propose a teacher-student approach where the teacher model is first trained using ground-truth object labels, and then helps to train a student model using point cloud inputs. We perform ablation studies showing advantages of our approach. We also demonstrate our model to significantly outperform the state of the art on the challenging Nr3D, Sr3D and ScanRefer 3D object grounding datasets. Our code and pretrained models will become publicly available.

@inproceedings{Chen2022_3DVG, author = {Chen, Shizhe and Guhur, Pierre-Louis and Tapaswi, Makarand and Schmid, Cordelia and Laptev, Ivan}, title = {{Language Conditioned Spatial Relation Reasoning for 3D Object Grounding}}, year = {2022}, booktitle = {Neural Information Processing Systems (NeurIPS)}, month = dec } - [W8]

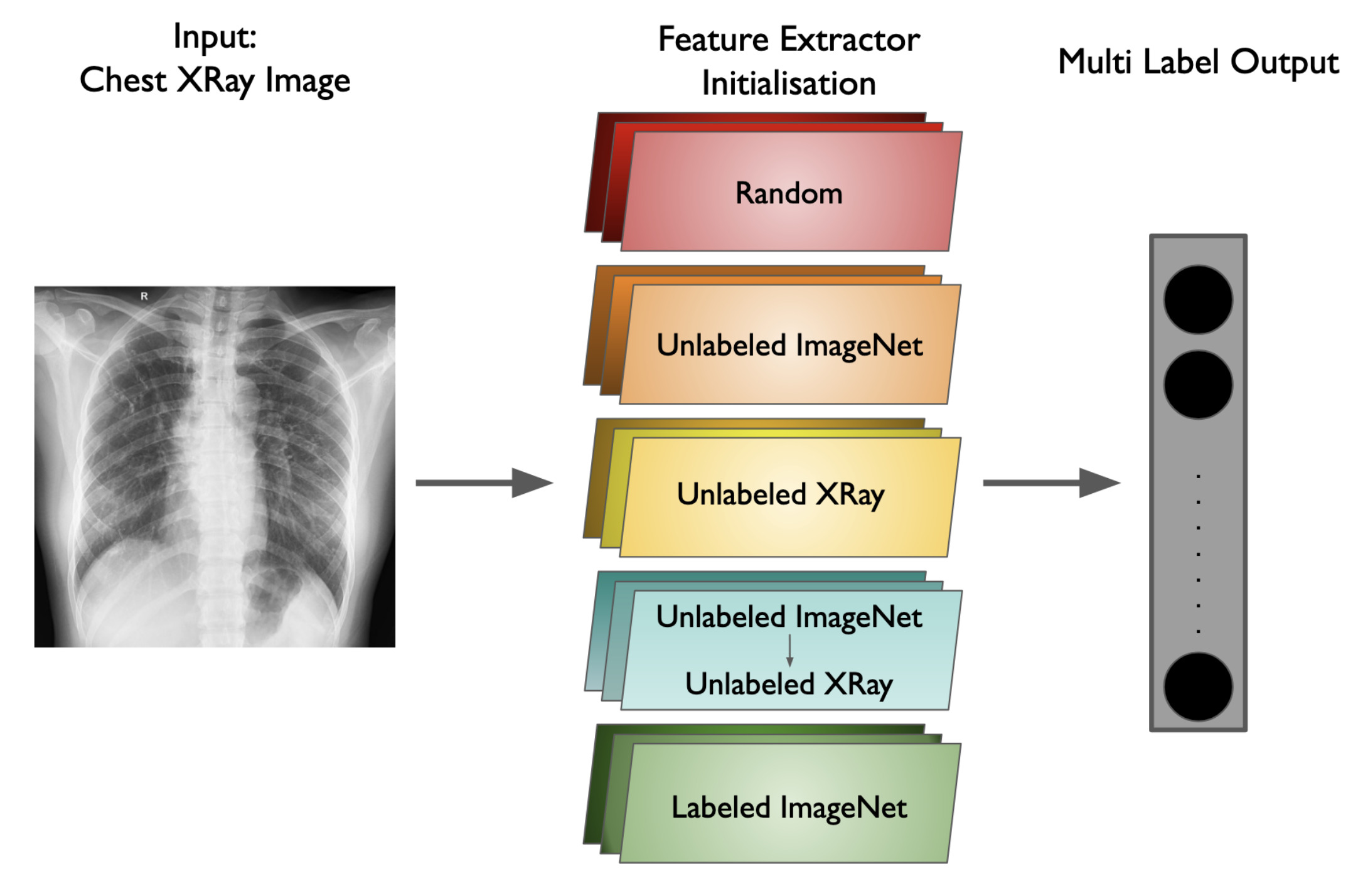

Can we Adopt Self-supervised Pretraining for Chest X-Rays?Arsh Verma, and Makarand TapaswiIn Machine Learning for Healthcare (ML4H) (Extended Abstract), Nov 2022

Can we Adopt Self-supervised Pretraining for Chest X-Rays?Arsh Verma, and Makarand TapaswiIn Machine Learning for Healthcare (ML4H) (Extended Abstract), Nov 2022Chest radiograph (or Chest X-Ray, CXR) is a popular medical imaging modality that is used by radiologists across the world to diagnose heart or lung conditions. Over the last decade, Convolutional Neural Networks (CNN), have seen success in identifying pathologies in CXR images. Typically, these CNNs are pretrained on the standard ImageNet classification task, but this assumes availability of large-scale annotated datasets. In this work, we analyze the utility of pretraining on unlabeled ImageNet or Chest X-Ray (CXR) datasets using various algorithms and in multiple settings. Some findings of our work include: (i) supervised training with labeled ImageNet learns strong representations that are hard to beat; (ii) self-supervised pretraining on ImageNet ( 1M images) shows performance similar to self-supervised pretraining on a CXR dataset ( 100K images); and (iii) the CNN trained on supervised ImageNet can be trained further with self-supervised CXR images leading to improvements, especially when the downstream dataset is on the order of a few thousand images.

@inproceedings{Verma2022_SSLCXR, author = {Verma, Arsh and Tapaswi, Makarand}, title = {{Can we Adopt Self-supervised Pretraining for Chest X-Rays?}}, year = {2022}, booktitle = {Machine Learning for Healthcare (ML4H) (Extended Abstract)}, month = nov } - [C32]

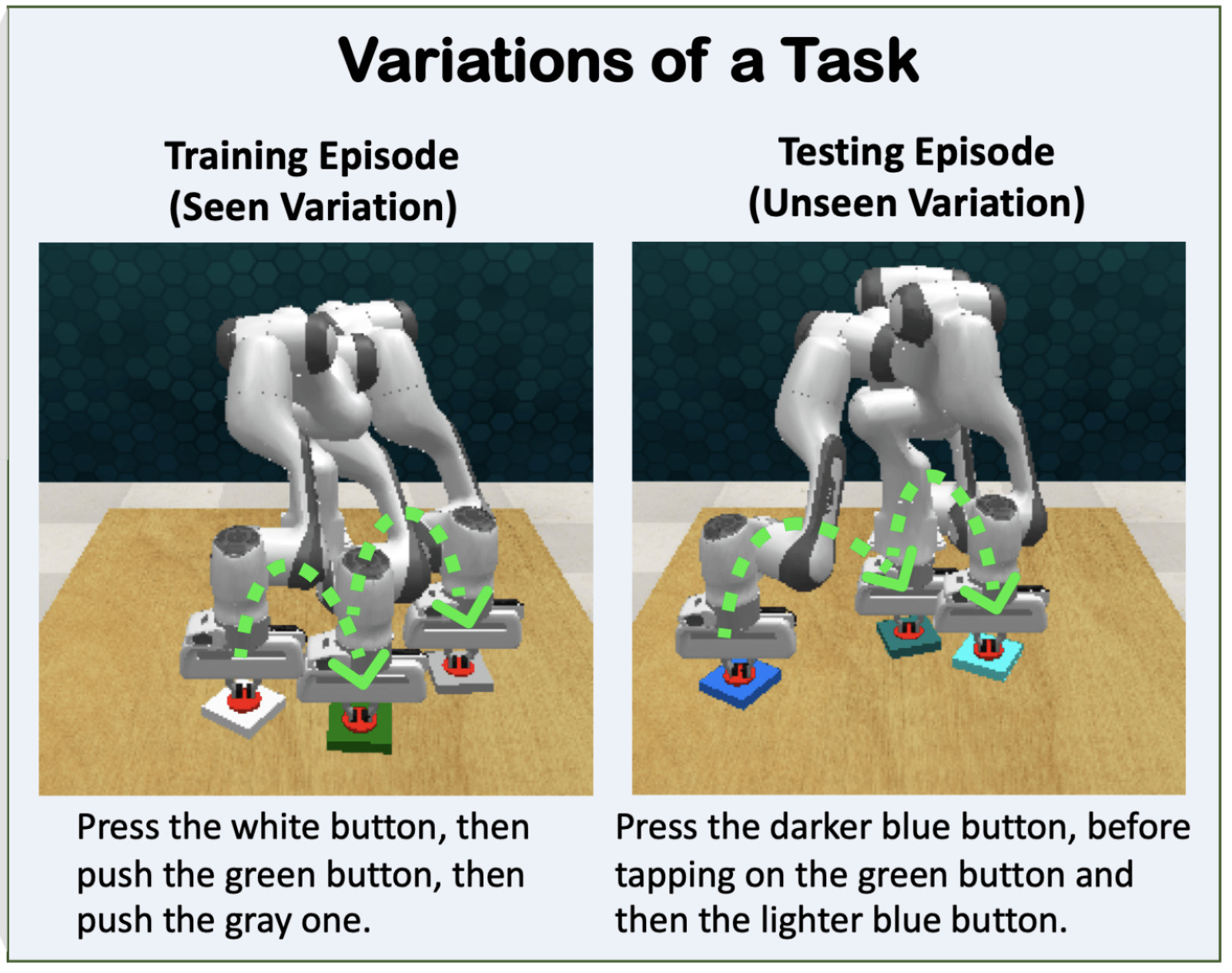

Instruction-driven History-aware Policies for Robotic ManipulationsPierre-Louis Guhur, Shizhe Chen, Ricardo Garcia Pinel, Makarand Tapaswi, Ivan Laptev, and Cordelia SchmidIn Conference on Robot Learning (CoRL), Dec 2022

Instruction-driven History-aware Policies for Robotic ManipulationsPierre-Louis Guhur, Shizhe Chen, Ricardo Garcia Pinel, Makarand Tapaswi, Ivan Laptev, and Cordelia SchmidIn Conference on Robot Learning (CoRL), Dec 2022In human environments, robots are expected to accomplish a variety of manipulation tasks given simple natural language instructions. Yet, robotic manipulation is extremely challenging as it requires fine-grained motor control, long-term memory as well as generalization to previously unseen tasks and environments. To address these challenges, we propose a unified transformer-based approach that takes into account multiple inputs. In particular, our transformer architecture integrates (i) natural language instructions and (ii) multi-view scene observations while (iii) keeping track of the full history of observations and actions. Such an approach enables learning dependencies between history and instructions and improves manipulation precision using multiple views. We evaluate our method on the challenging RLBench benchmark and on a real-world robot. Notably, our approach scales to 74 diverse RLBench tasks and outperforms the state of the art. We also address instruction-conditioned tasks and demonstrate excellent generalization to previously unseen variations.

@inproceedings{Guhur2022_Hiveformer, author = {Guhur, Pierre-Louis and Chen, Shizhe and Pinel, Ricardo Garcia and Tapaswi, Makarand and Laptev, Ivan and Schmid, Cordelia}, title = {{Instruction-driven History-aware Policies for Robotic Manipulations}}, year = {2022}, booktitle = {Conference on Robot Learning (CoRL)}, month = dec } - [C31]

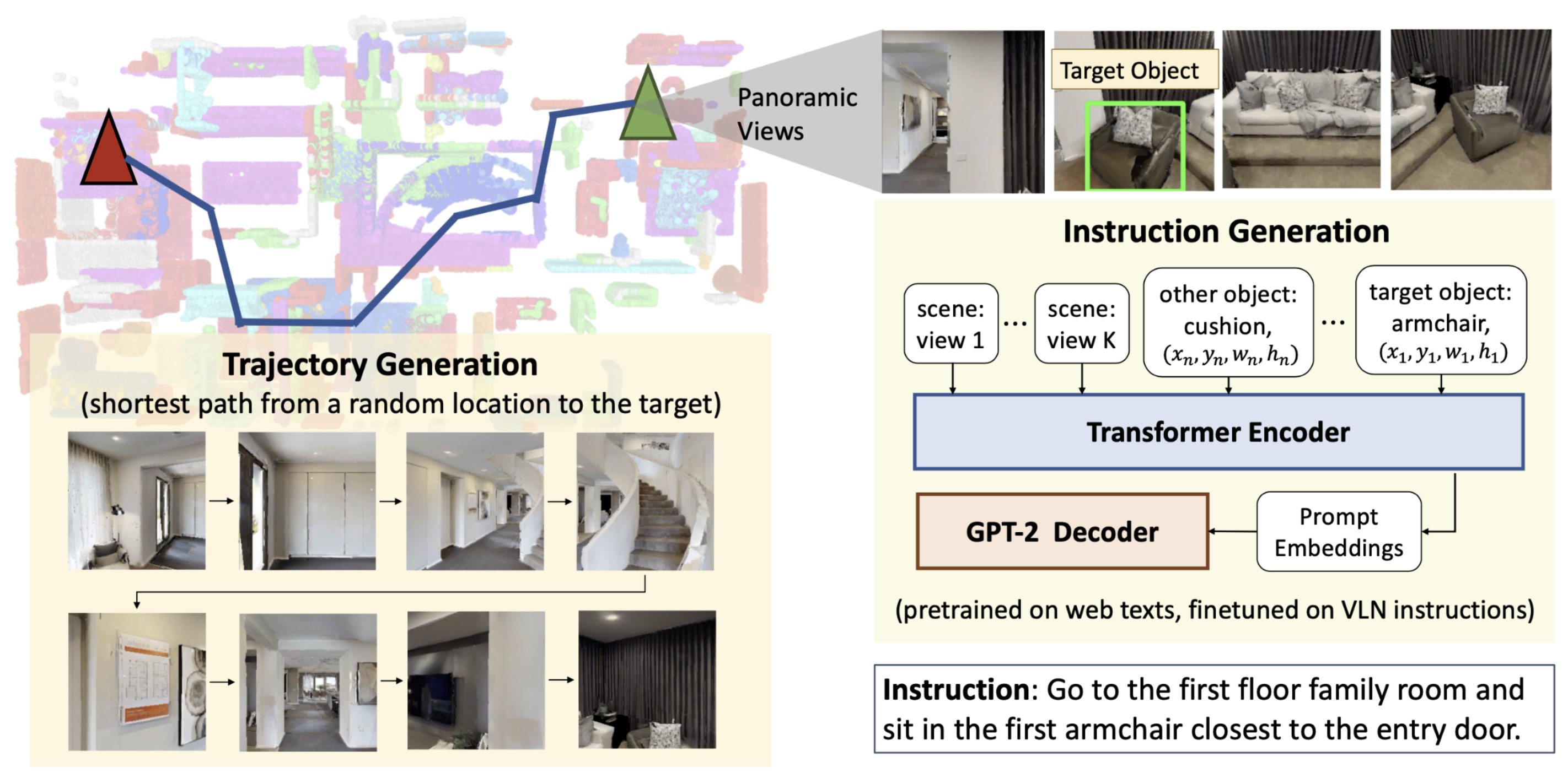

Learning from Unlabeled 3D Environments for Vision-and-Language NavigationIn European Conference on Computer Vision (ECCV), Oct 2022

Learning from Unlabeled 3D Environments for Vision-and-Language NavigationIn European Conference on Computer Vision (ECCV), Oct 2022In vision-and-language navigation (VLN), an embodied agent is required to navigate in realistic 3D environments following natural language instructions. One major bottleneck for existing VLN approaches is the lack of sufficient training data, resulting in unsatisfactory generalization to unseen environments. While VLN data is typically collected manually, such an approach is expensive and prevents scalability. In this work, we address the data scarcity issue by proposing to automatically create a large-scale VLN dataset from 900 unlabeled 3D buildings from HM3D. We generate a navigation graph for each building and transfer object predictions from 2D to generate pseudo 3D object labels by cross-view consistency. We then fine-tune a pretrained language model using pseudo object labels as prompts to alleviate the cross-modal gap in instruction generation. Our resulting HM3D-AutoVLN dataset is an order of magnitude larger than existing VLN datasets in terms of navigation environments and instructions. We experimentally demonstrate that HM3D-AutoVLN significantly increases the generalization ability of resulting VLN models. On the SPL metric, our approach improves over state of the art by 7.1% and 8.1% on the unseen validation splits of REVERIE and SOON datasets respectively.

@inproceedings{Chen2022_3DVLN, author = {Chen, Shizhe and Guhur, Pierre-Louis and Tapaswi, Makarand and Schmid, Cordelia and Laptev, Ivan}, title = {{Learning from Unlabeled 3D Environments for Vision-and-Language Navigation}}, year = {2022}, booktitle = {European Conference on Computer Vision (ECCV)}, month = oct } - [C30]

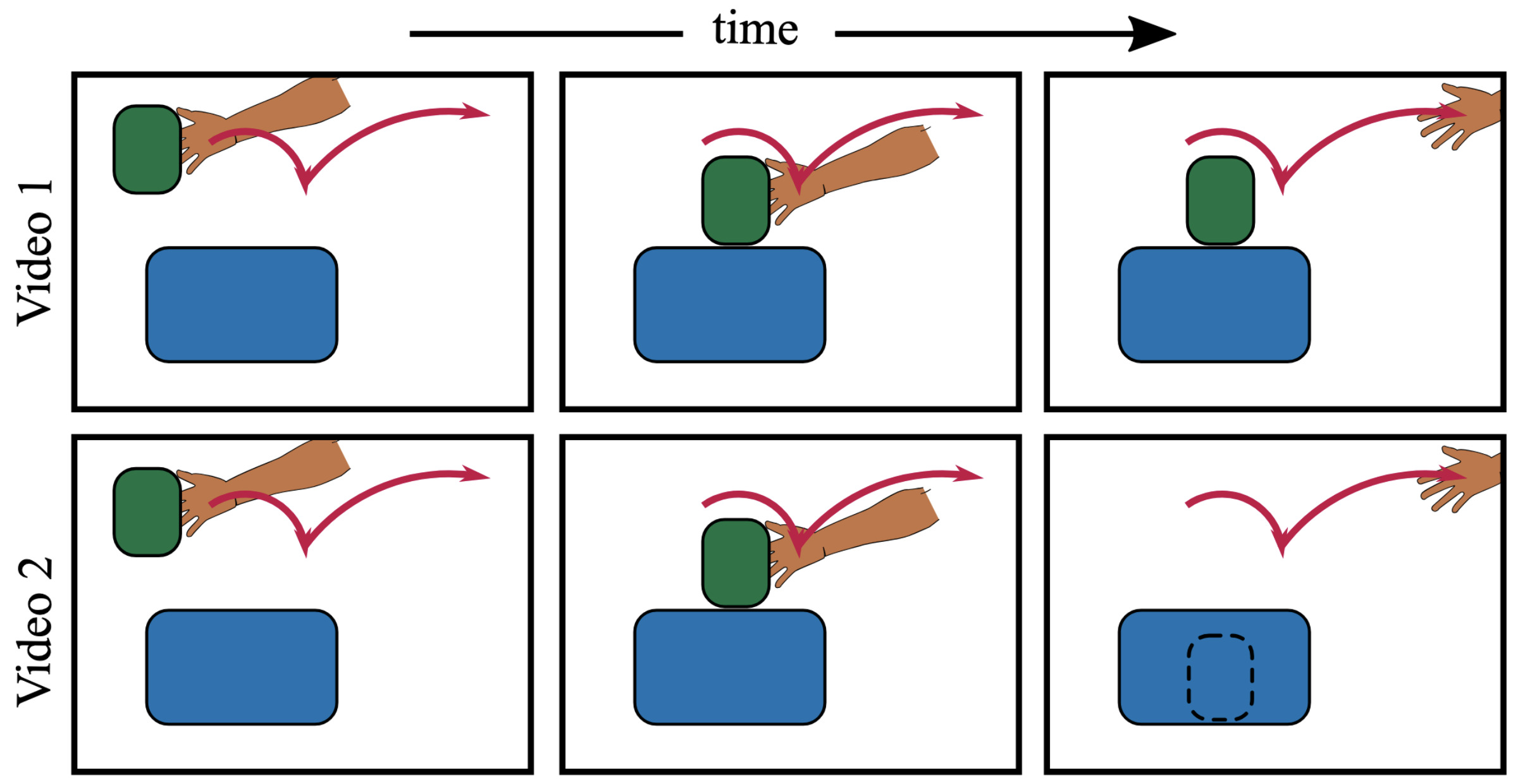

Learning Object Manipulation Skills from Video via Approximate Differentiable PhysicsIn International Conference on Intelligent Robots and Systems (IROS), Oct 2022

Learning Object Manipulation Skills from Video via Approximate Differentiable PhysicsIn International Conference on Intelligent Robots and Systems (IROS), Oct 2022We aim to teach robots to perform simple object manipulation tasks by watching a single video demonstration. Towards this goal, we propose an optimization approach that outputs a coarse and temporally evolving 3D scene to mimic the action demonstrated in the input video. Similar to previous work, a differentiable renderer ensures perceptual fidelity between the 3D scene and the 2D video. Our key novelty lies in the inclusion of a differentiable approach to solve a set of Ordinary Differential Equations (ODEs) that allows us to approximately model laws of physics such as gravity, friction, and hand-object or object-object interactions. This not only enables us to dramatically improve the quality of estimated hand and object states, but also produces physically admissible trajectories that can be directly translated to a robot without the need for costly reinforcement learning. We evaluate our approach on a 3D reconstruction task that consists of 54 video demonstrations sourced from 9 actions such as pull something from right to left or put something in front of something. Our approach improves over previous state-of-the-art by almost 30%, demonstrating superior quality on especially challenging actions involving physical interactions of two objects such as put something onto something. Finally, we showcase the learned skills on a Franka Emika Panda robot.

@inproceedings{Petrik2022_PhysReal2Sim, author = {Petrik, Vladimir and Qureshi, Mohammad Nomaan and Sivic, Josef and Tapaswi, Makarand}, title = {{Learning Object Manipulation Skills from Video via Approximate Differentiable Physics}}, year = {2022}, booktitle = {International Conference on Intelligent Robots and Systems (IROS)}, month = oct } - [C29]

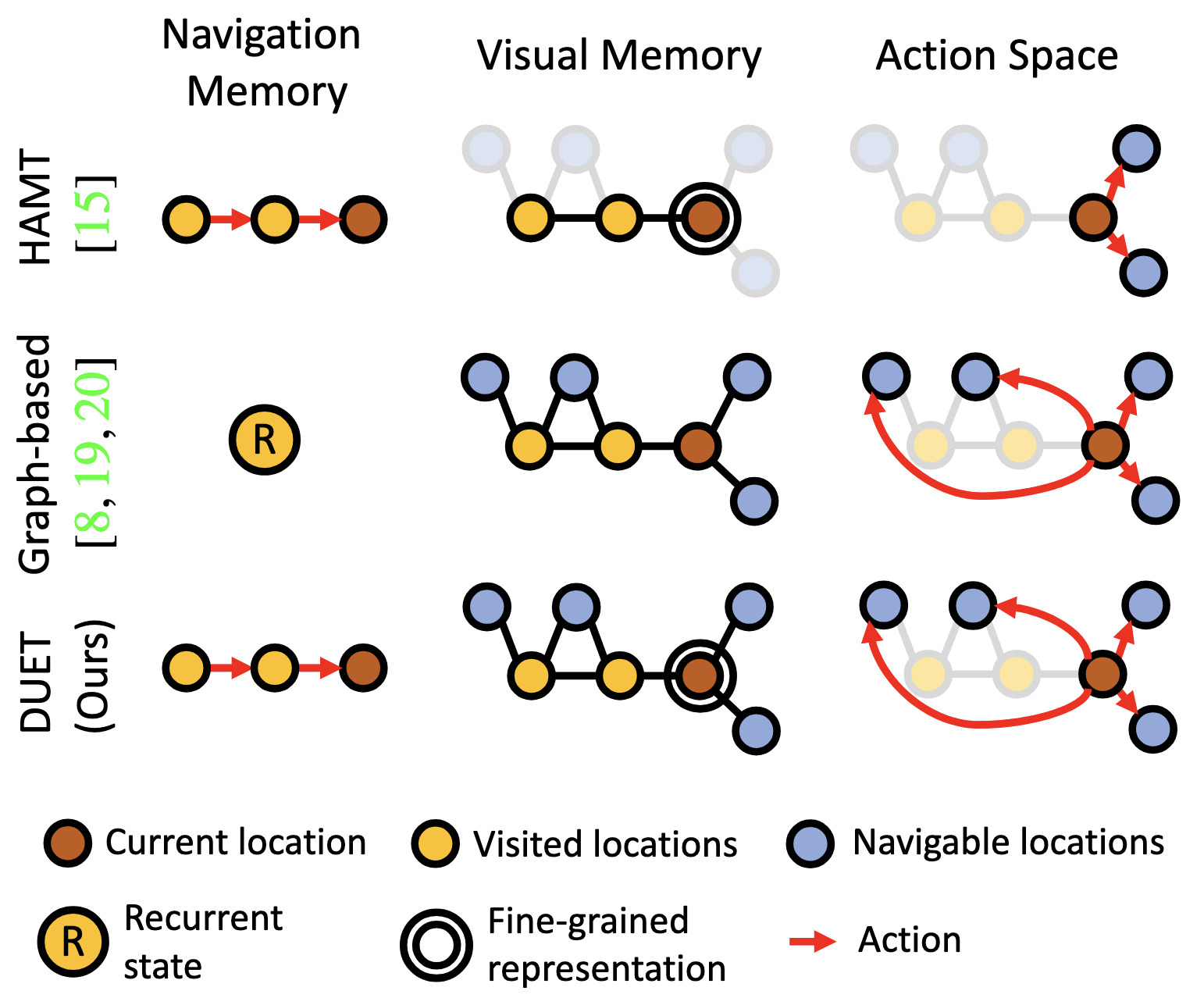

Think Global, Act Local: Dual-scale Graph Transformer for Vision-and-Language NavigationIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2022

Think Global, Act Local: Dual-scale Graph Transformer for Vision-and-Language NavigationIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2022Following language instructions to navigate in unseen environments is a challenging problem for autonomous embodied agents. The agent not only needs to ground languages in visual scenes, but also should explore the environment to reach its target. In this work, we propose a dual-scale graph transformer (DUET) for joint long-term action planning and fine-grained cross-modal understanding. We build a topological map on-the-fly to enable efficient exploration in global action space. To balance the complexity of large action space reasoning and fine-grained language grounding, we dynamically combine a fine-scale encoding over local observations and a coarse-scale encoding on a global map via graph transformers. The proposed approach, DUET, significantly outperforms state-of-the-art methods on goal-oriented vision-and-language navigation (VLN) benchmarks REVERIE and SOON. It also improves the success rate on the fine-grained VLN benchmark R2R.

@inproceedings{Chen2022_Duet, author = {Chen, Shizhe and Guhur, Pierre-Louis and Tapaswi, Makarand and Schmid, Cordelia and Laptev, Ivan}, title = {{Think Global, Act Local: Dual-scale Graph Transformer for Vision-and-Language Navigation}}, year = {2022}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, month = jun, doi = {10.1109/CVPR52688.2022.01604} }

2021

- [J3]

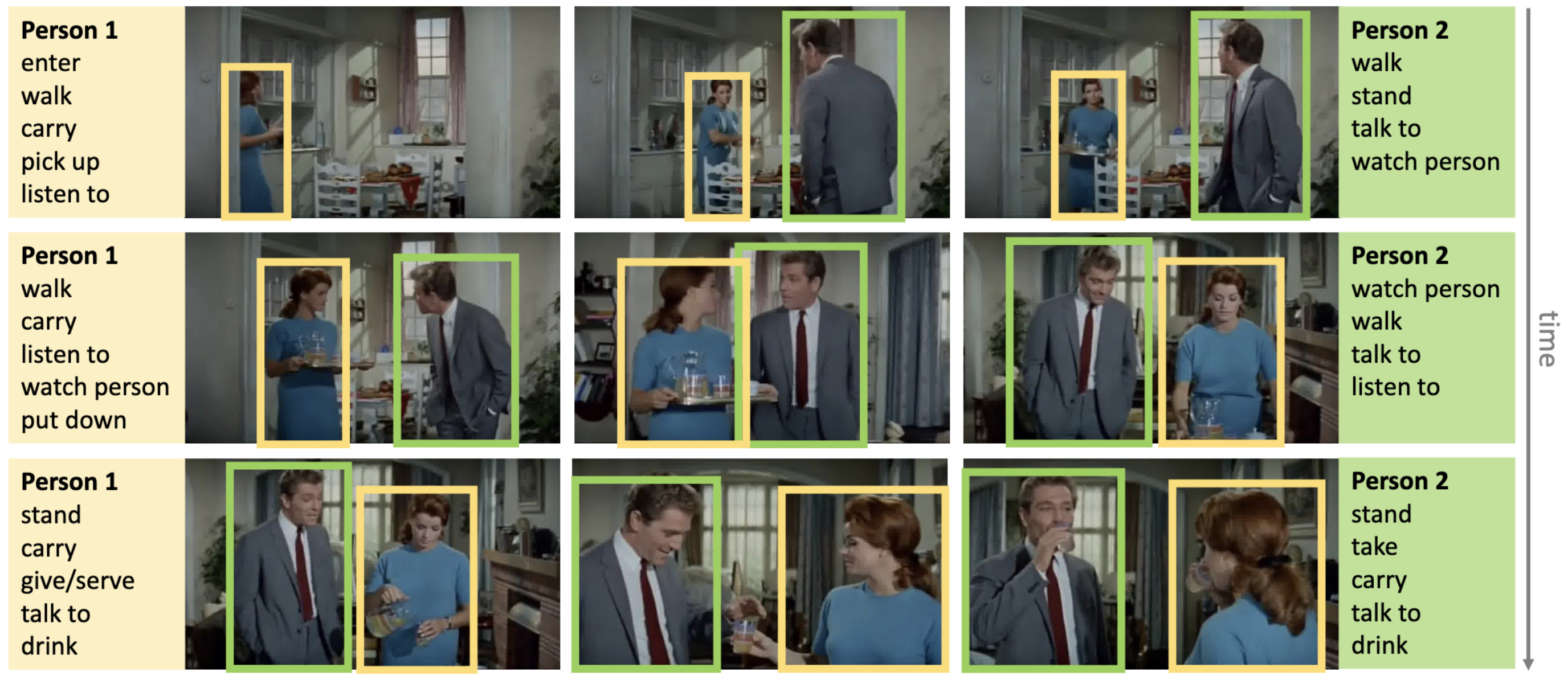

Long term Spatio-Temporal Modeling for Action DetectionMakarand Tapaswi* , Vijay Kumar*, and Ivan LaptevComputer Vision and Image Understanding (CVIU), Jun 2021

Long term Spatio-Temporal Modeling for Action DetectionMakarand Tapaswi* , Vijay Kumar*, and Ivan LaptevComputer Vision and Image Understanding (CVIU), Jun 2021Modeling person interactions with their surroundings has proven to be effective for recognizing and localizing human actions in videos. While most recent works focus on learning short term interactions, in this work, we consider long-term person interactions and jointly localize actions of multiple actors over an entire video shot. We construct a graph with nodes that correspond to keyframe actor instances and connect them with two edge types. Spatial edges connect actors within a keyframe, and temporal edges connect multiple instances of the same actor over a video shot. We propose a Graph Neural Network that explicitly models spatial and temporal states for each person instance and learns to effectively combine information from both modalities to make predictions at the same time. We conduct experiments on the AVA dataset and show that our graph-based model provides consistent improvements over several video descriptors, achieving state-of-the-art performance without any fine-tuning.

@article{Tapaswi2021_ActionDetection, author = {Tapaswi, Makarand and Kumar, Vijay and Laptev, Ivan}, title = {{Long term Spatio-Temporal Modeling for Action Detection}}, year = {2021}, journal = {Computer Vision and Image Understanding (CVIU)}, volume = {210}, doi = {10.1016/j.cviu.2021.103242} } - [C28]

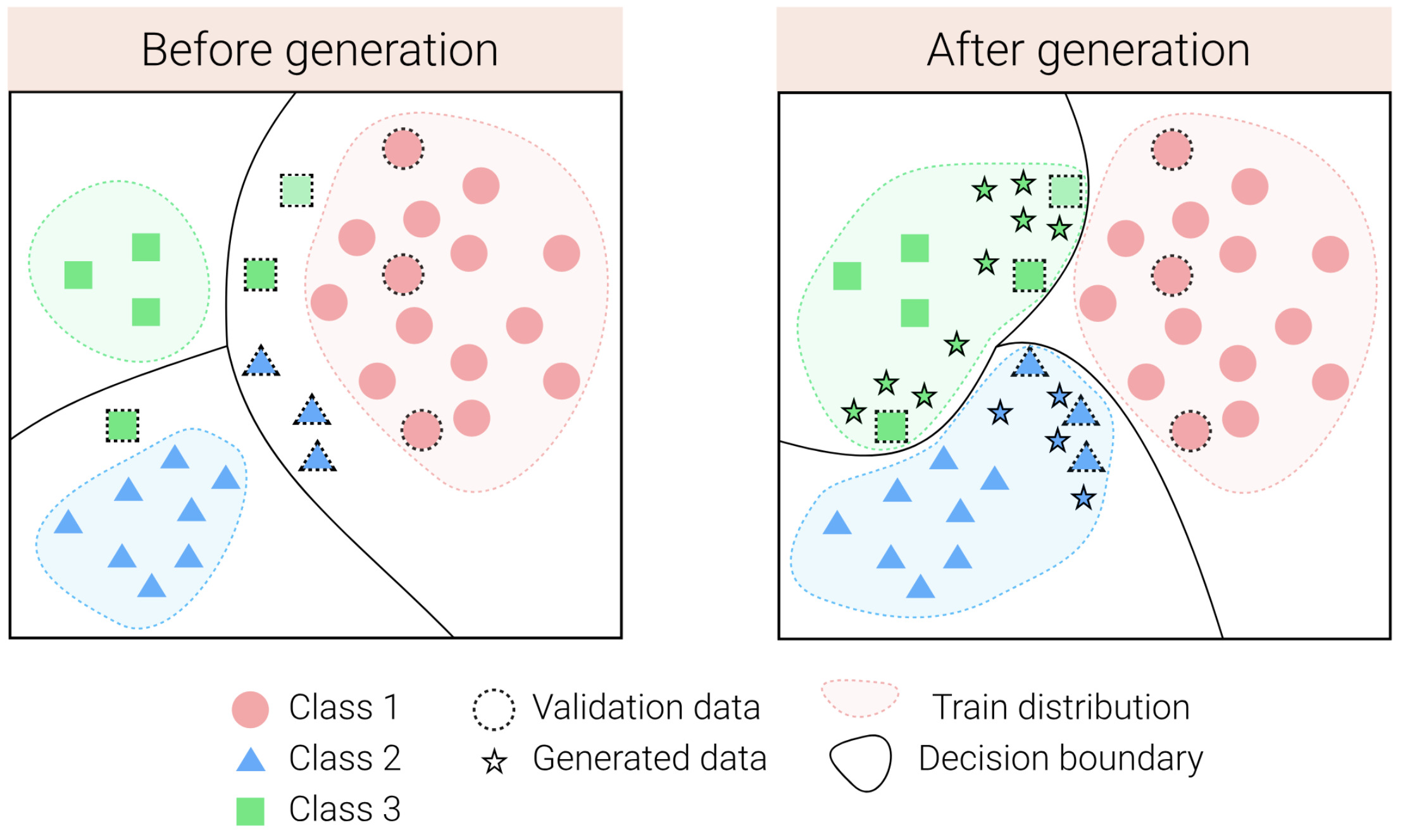

Feature Generation for Long-tail ClassificationIn Indian Conference on Computer Vision, Graphics, and Image Processing (ICVGIP), Dec 2021

Feature Generation for Long-tail ClassificationIn Indian Conference on Computer Vision, Graphics, and Image Processing (ICVGIP), Dec 2021The visual world naturally exhibits an imbalance in the number of object or scene instances resulting in a long-tailed distribution. This imbalance poses significant challenges for classification models based on deep learning. Oversampling instances of the tail classes attempts to solve this imbalance. However, the limited visual diversity results in a network with poor representation ability. A simple counter to this is decoupling the representation and classifier networks and using oversampling only to train the classifier. In this paper, instead of repeatedly re-sampling the same image (and thereby features), we explore a direction that attempts to generate meaningful features by estimating the tail category’s distribution. Inspired by ideas from recent work on few-shot learning, we create calibrated distributions to sample additional features that are subsequently used to train the classifier. Through several experiments on the CIFAR-100-LT (long-tail) dataset with varying imbalance factors and on mini-ImageNet-LT (long-tail), we show the efficacy of our approach and establish a new state-of-the-art. We also present a qualitative analysis of generated features using t-SNE visualizations and analyze the nearest neighbors used to calibrate the tail class distributions. Our code is available at \urlhttps://github.com/rahulvigneswaran/TailCalibX.

@inproceedings{Vigneswaran2021_TailCalibX, author = {Vigneswaran, Rahul and Law, Marc T. and Balasubramanian, Vineeth N and Tapaswi, Makarand}, title = {{Feature Generation for Long-tail Classification}}, year = {2021}, booktitle = {Indian Conference on Computer Vision, Graphics, and Image Processing (ICVGIP)}, month = dec, doi = {10.1145/3490035.3490300} } - [C27]

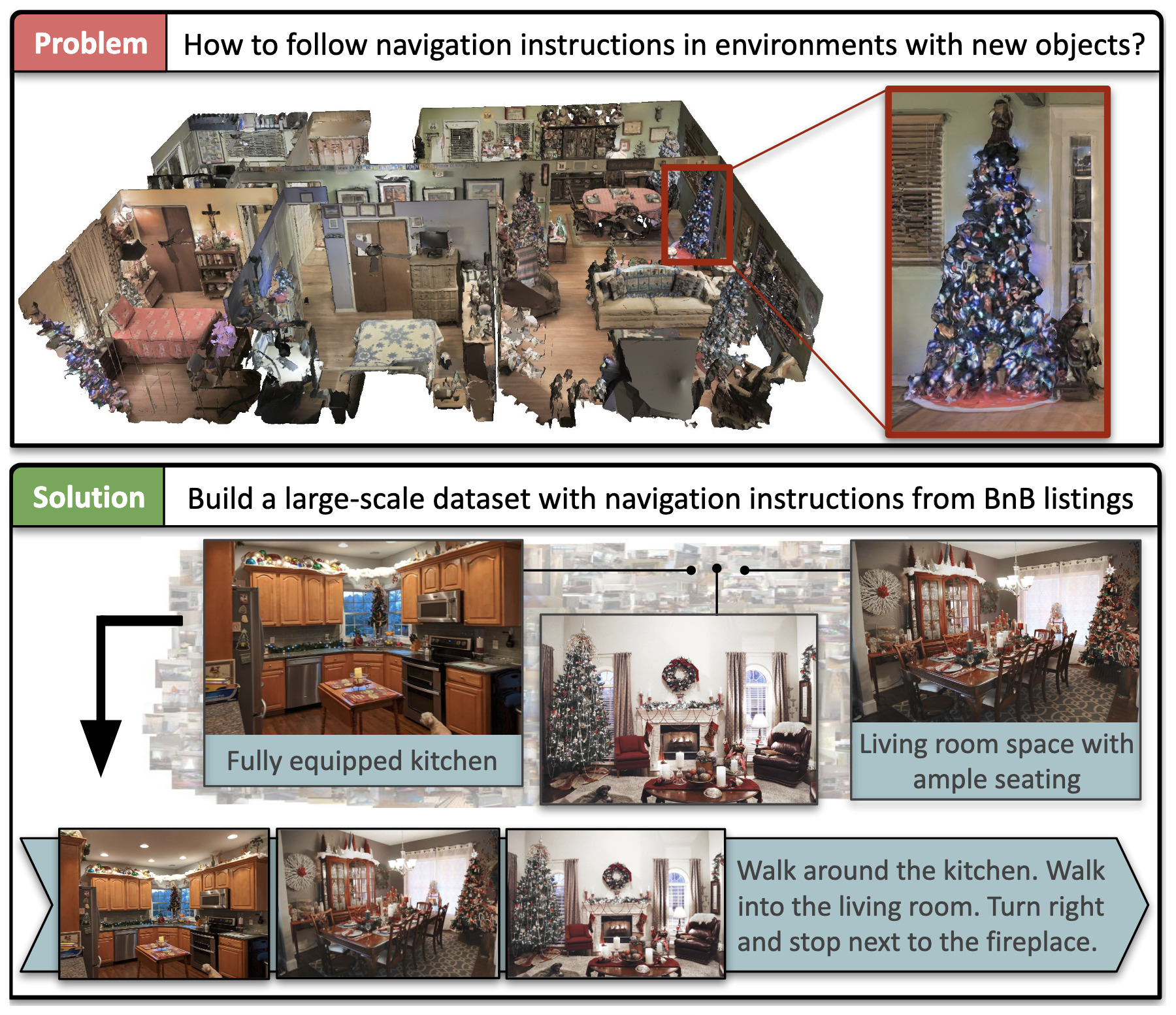

Airbert: In-domain Pretraining for Vision-and-Language NavigationIn International Conference on Computer Vision (ICCV), Oct 2021

Airbert: In-domain Pretraining for Vision-and-Language NavigationIn International Conference on Computer Vision (ICCV), Oct 2021Vision-and-language navigation (VLN) aims to enable embodied agents to navigate in realistic environments using natural language instructions. Given the scarcity of domain-specific training data and the high diversity of image and language inputs, the generalization of VLN agents to unseen environments remains challenging. Recent methods explore pretraining to improve generalization, however, the use of generic image-caption datasets or existing small-scale VLN environments is suboptimal and results in limited improvements. In this work, we introduce BnB, a large-scale and diverse in-domain VLN dataset. We first collect image-caption (IC) pairs from hundreds of thousands of listings from online rental marketplaces. Using IC pairs we next propose automatic strategies to generate millions of VLN path-instruction (PI) pairs. We further propose a shuffling loss that improves the learning of temporal order inside PI pairs. We use BnB to pretrain our Airbert model that can be adapted to discriminative and generative settings and show that it outperforms state of the art for Room-to-Room (R2R) navigation and Remote Referring Expression (REVERIE) benchmarks. Moreover, our in-domain pretraining significantly increases performance on a challenging few-shot VLN evaluation, where we train the model only on VLN instructions from a few houses.

@inproceedings{Guhur2021_Airbert, author = {Guhur, Pierre-Louis and Tapaswi, Makarand and Chen, Shizhe and Schmid, Cordelia and Laptev, Ivan}, title = {{Airbert: In-domain Pretraining for Vision-and-Language Navigation}}, year = {2021}, booktitle = {International Conference on Computer Vision (ICCV)}, month = oct, doi = {10.1109/ICCV48922.2021.00166} }

2020

- [C26]

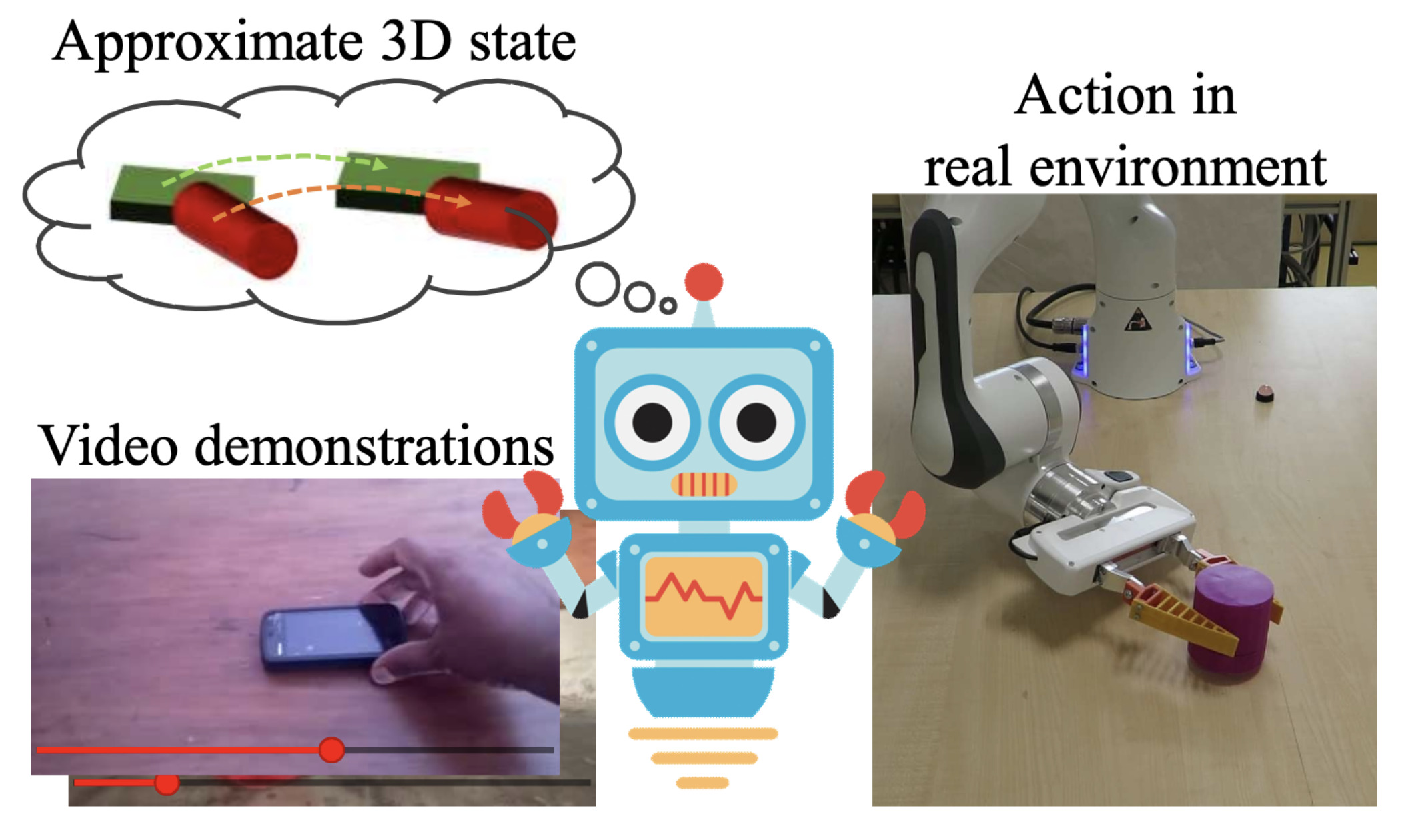

Learning Object Manipulation Skills via Approximate State Estimation from Real VideosIn Conference on Robot Learning (CoRL), Nov 2020

Learning Object Manipulation Skills via Approximate State Estimation from Real VideosIn Conference on Robot Learning (CoRL), Nov 2020Humans are adept at learning new tasks by watching a few instructional videos. On the other hand, robots that learn new actions either require a lot of effort through trial and error, or use expert demonstrations that are challenging to obtain. In this paper, we explore a method that facilitates learning object manipulation skills directly from videos. Leveraging recent advances in 2D visual recognition and differentiable rendering, we develop an optimization based method to estimate a coarse 3D state representation for the hand and the manipulated object(s) without requiring any supervision. We use these trajectories as dense rewards for an agent that learns to mimic them through reinforcement learning. We evaluate our method on simple single- and two-object actions from the Something-Something dataset. Our approach allows an agent to learn actions from single videos, while watching multiple demonstrations makes the policy more robust. We show that policies learned in a simulated environment can be easily transferred to a real robot.

@inproceedings{Petrik2020_Real2Sim, author = {Petrik, Vladimir and Tapaswi, Makarand and Laptev, Ivan and Sivic, Josef}, title = {{Learning Object Manipulation Skills via Approximate State Estimation from Real Videos}}, year = {2020}, booktitle = {Conference on Robot Learning (CoRL)}, month = nov } - [C25]

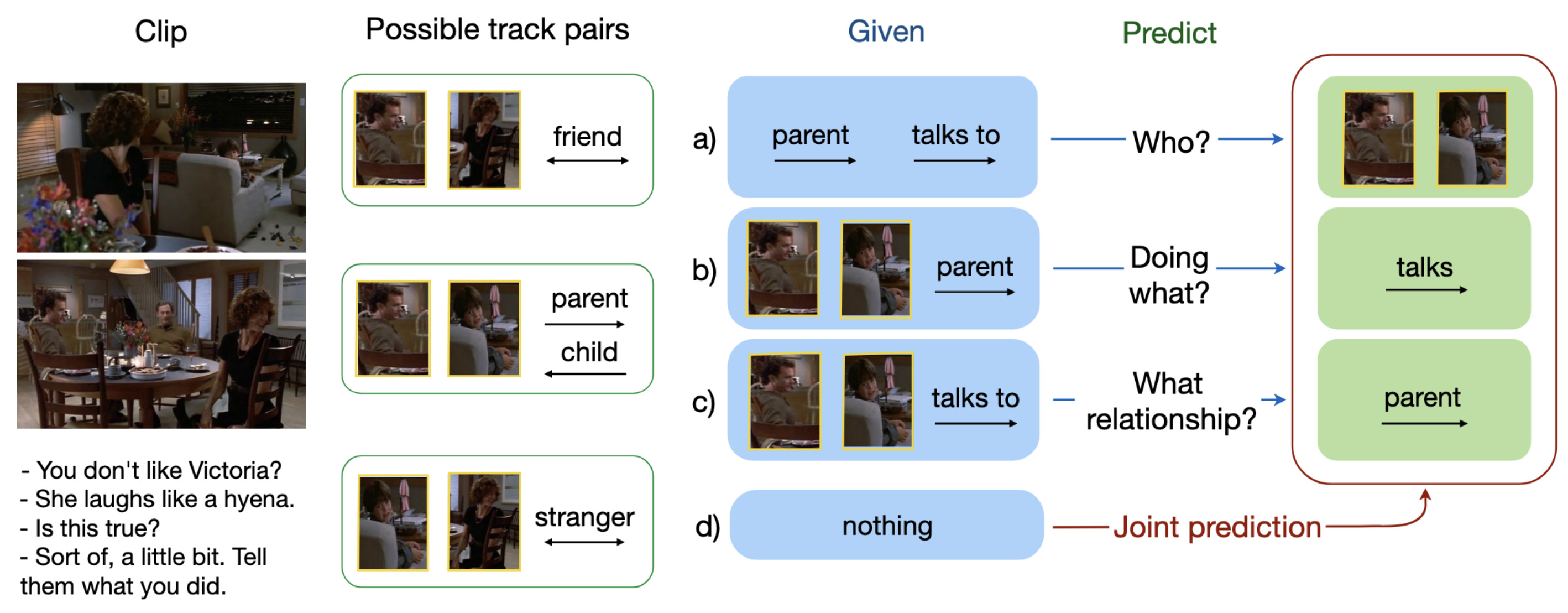

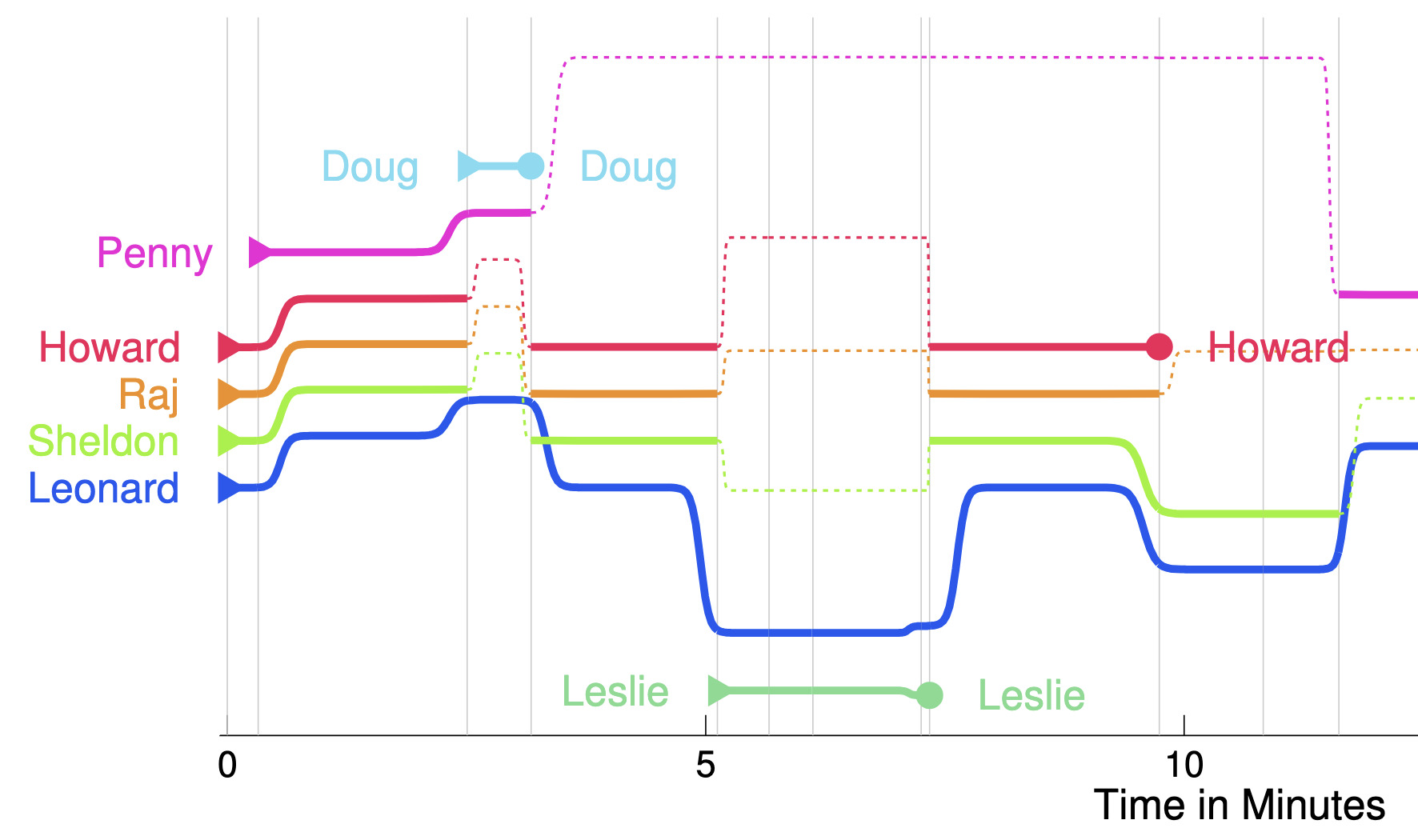

Learning Interactions and Relationships between Movie CharactersAnna Kukleva, Makarand Tapaswi, and Ivan LaptevIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2020

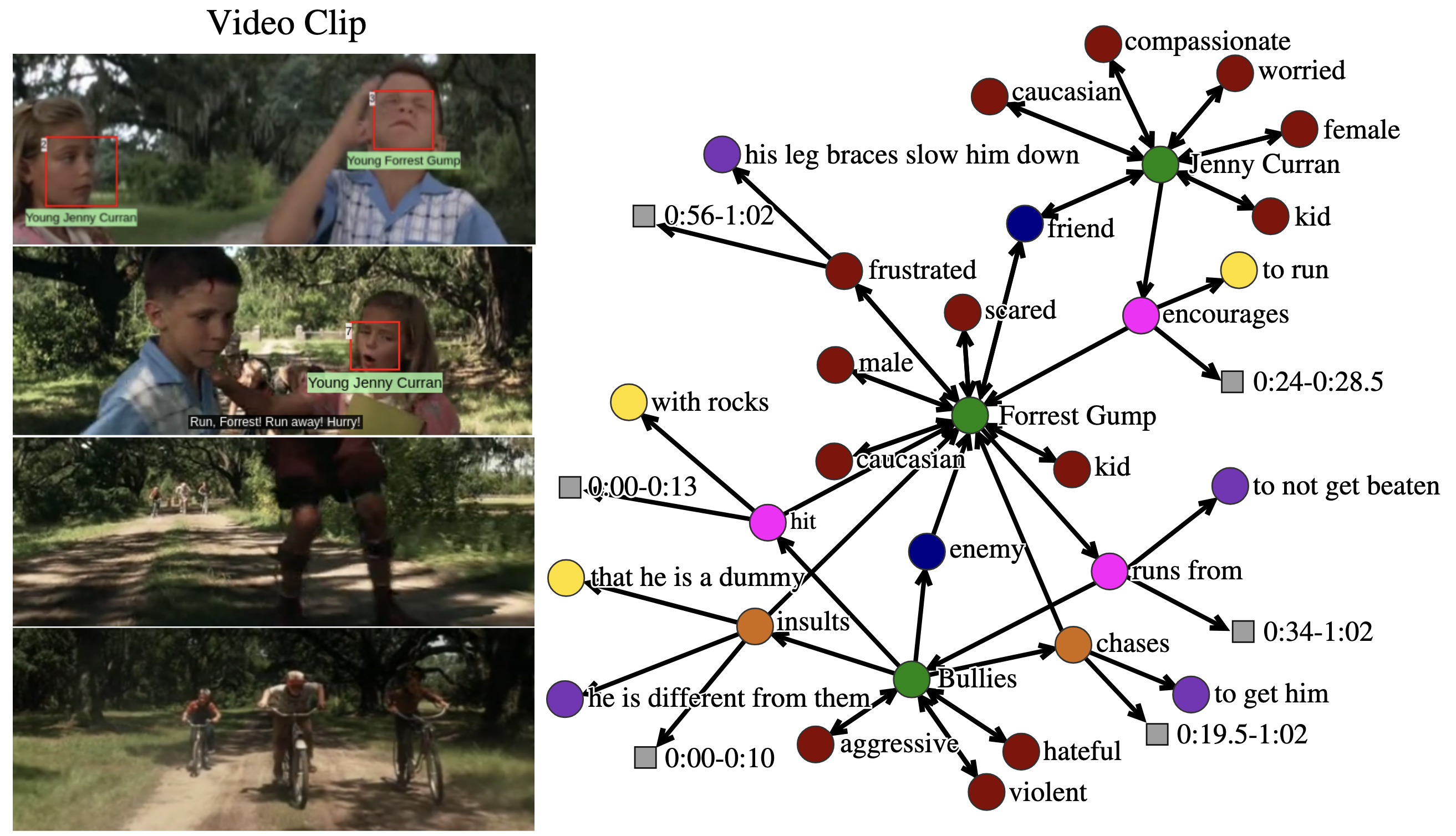

Learning Interactions and Relationships between Movie CharactersAnna Kukleva, Makarand Tapaswi, and Ivan LaptevIn Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2020Interactions between people are often governed by their relationships. On the flip side, social relationships are built upon several interactions. Two strangers are more likely to greet and introduce themselves while becoming friends over time. We are fascinated by this interplay between interactions and relationships, and believe that it is an important aspect of understanding social situations. In this work, we propose neural models to learn and jointly predict interactions, relationships, and the pair of characters that are involved. We note that interactions are informed by a mixture of visual and dialog cues, and present a multimodal architecture to extract meaningful information from them. Localizing the pair of interacting characters in video is a time-consuming process, instead, we train our model to learn from clip-level weak labels. We evaluate our models on the MovieGraphs dataset and show the impact of modalities, use of longer temporal context for predicting relationships, and achieve encouraging performance using weak labels as compared with ground-truth labels.

@inproceedings{Kukleva2020_MGIntRel, author = {Kukleva, Anna and Tapaswi, Makarand and Laptev, Ivan}, title = {{Learning Interactions and Relationships between Movie Characters}}, year = {2020}, booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)}, month = jun, doi = {10.1109/CVPR42600.2020.00987} } - [C24]

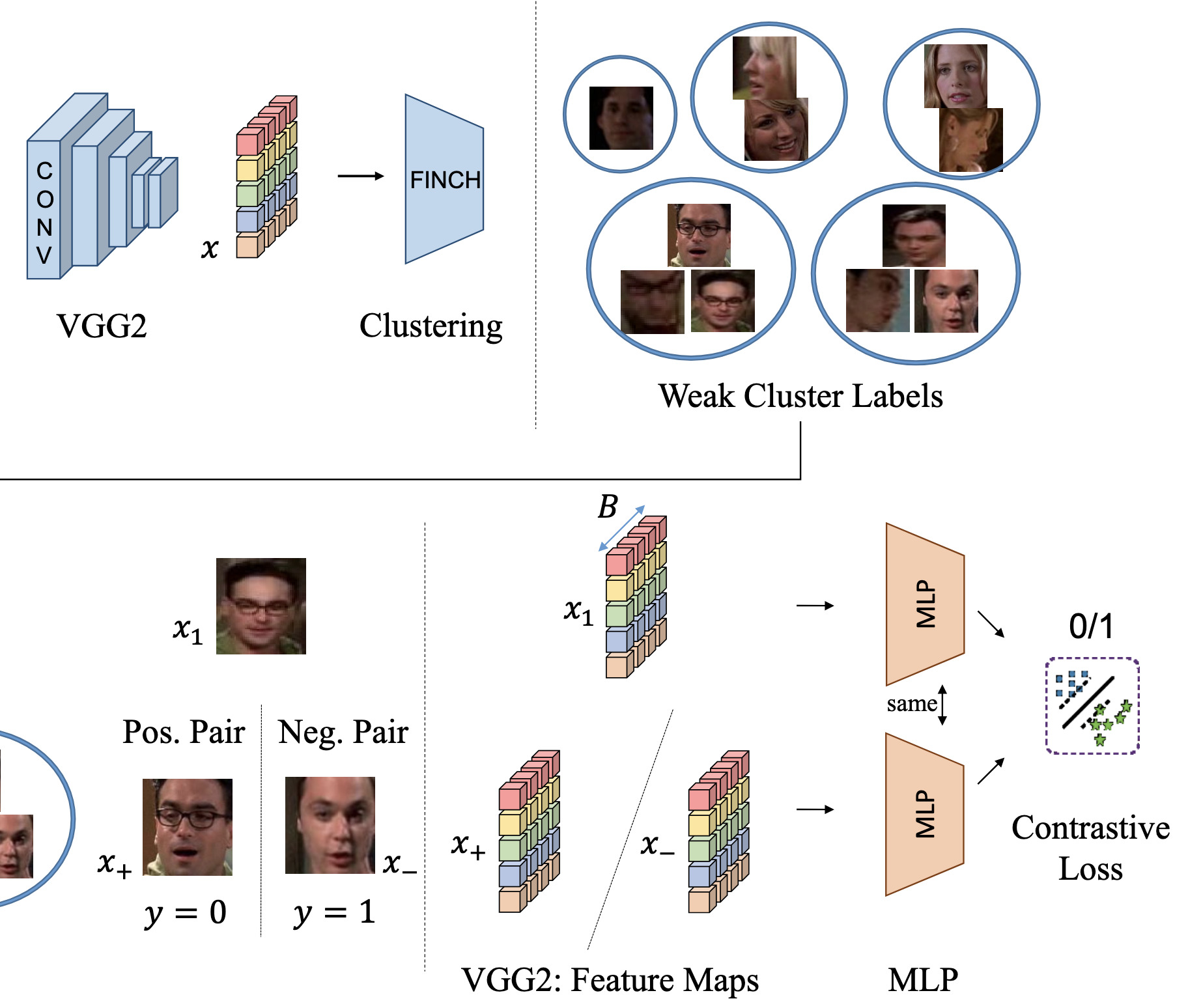

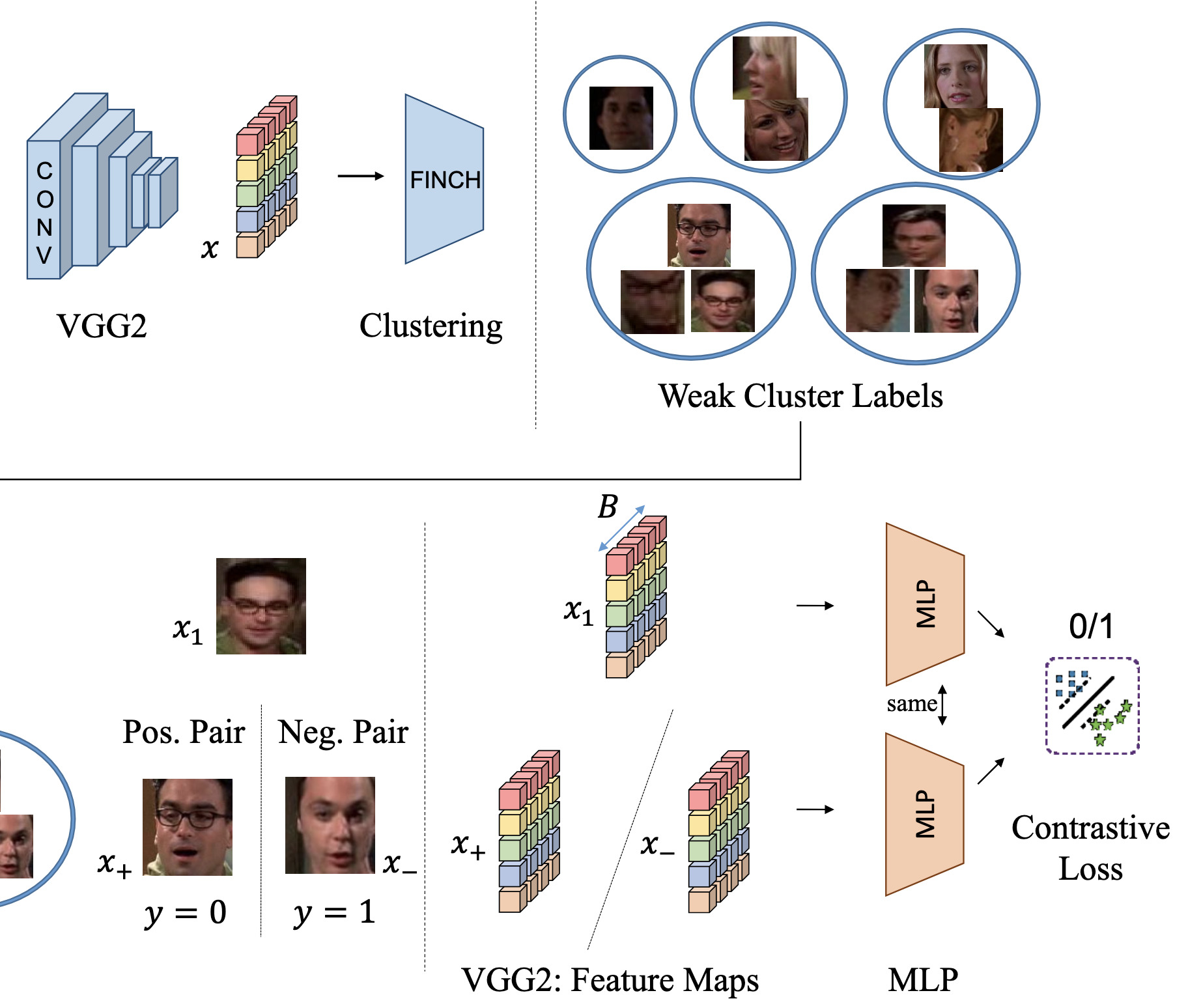

Clustering based Contrastive Learning for Improving Face RepresentationsIn IEEE International Conference on Automatic Face and Gesture Recognition (FG), May 2020

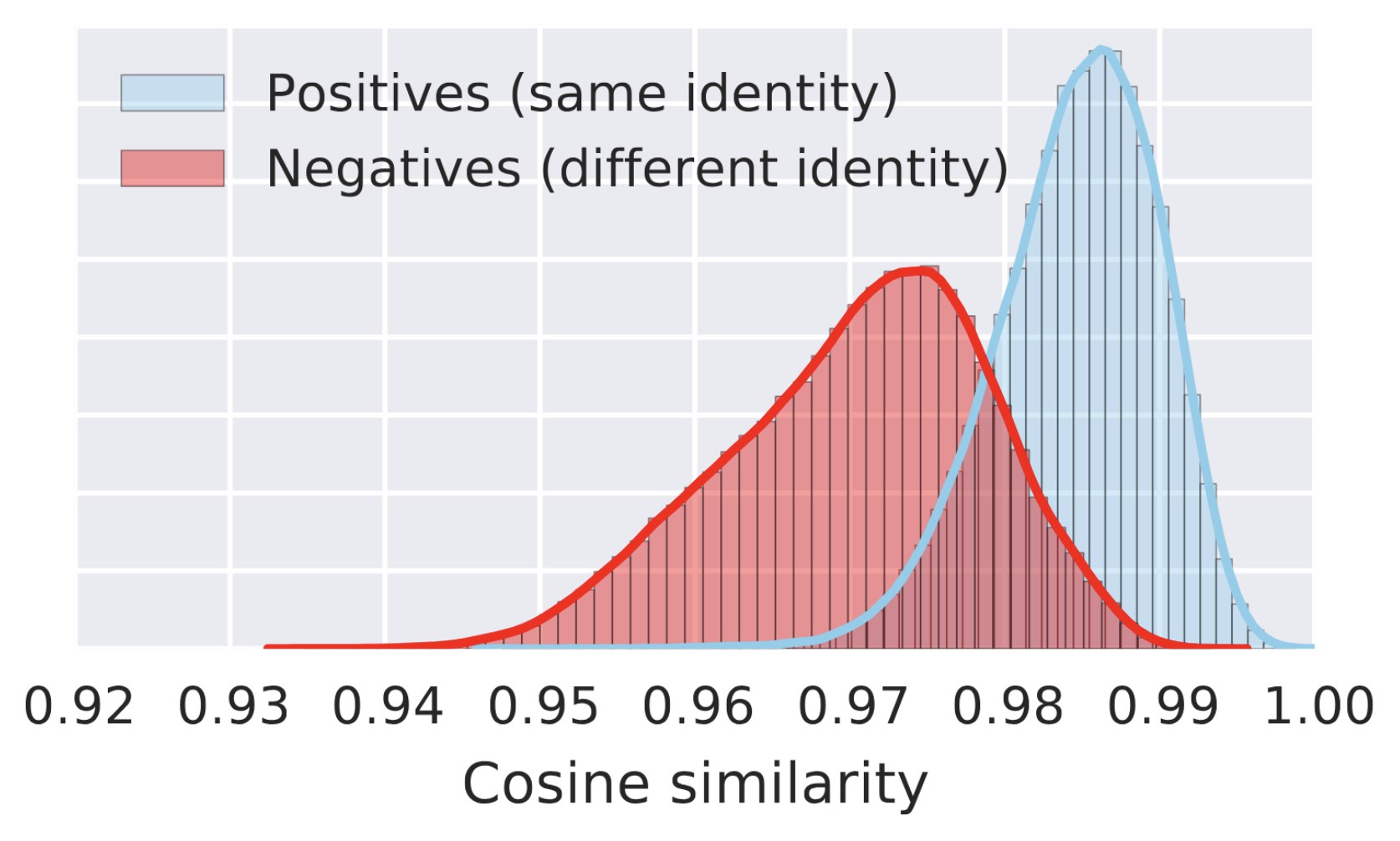

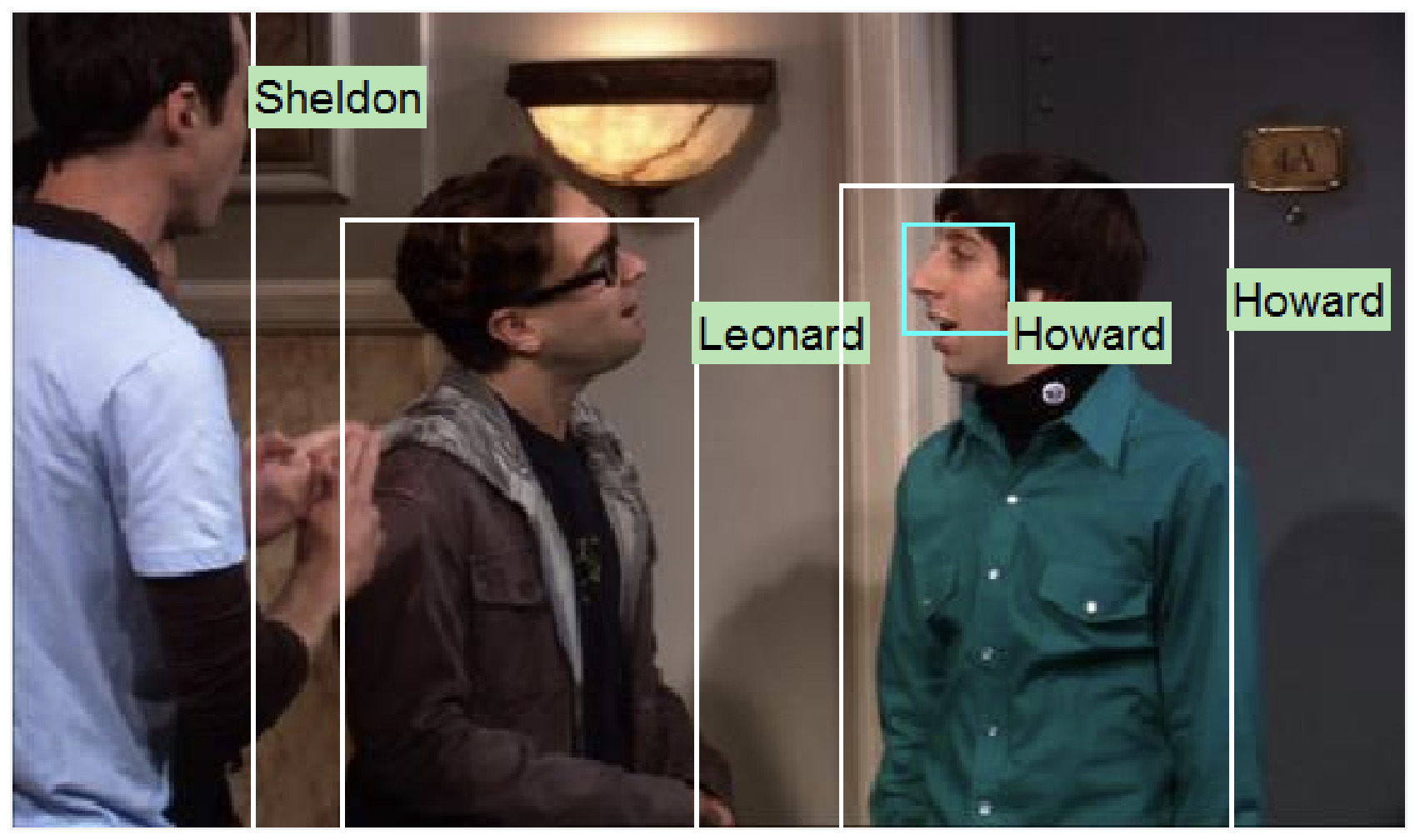

Clustering based Contrastive Learning for Improving Face RepresentationsIn IEEE International Conference on Automatic Face and Gesture Recognition (FG), May 2020A good clustering algorithm can discover natural groupings in data. These groupings, if used wisely, providea form of weak supervision for learning representations. In this work, we present Clustering-based Contrastive Learning (CCL), a new clustering-based representation learning approach that uses labels obtained from clustering along with video constraints to learn discriminative face features. We demonstrate our method on the challenging task of learning representations for video face clustering. Through several ablation studies, we analyze the impact of creating pair-wise positive and negative labels from different sources. Experiments on three challenging video face clustering datasets: BBT-0101, BF-0502, and ACCIO show that CCL achieves a new state-of-the-art on all datasets.

@inproceedings{Sharma2020_CCL, author = {Sharma, Vivek and Tapaswi, Makarand and Sarfraz, Saquib and Stiefelhagen, Rainer}, title = {{Clustering based Contrastive Learning for Improving Face Representations}}, year = {2020}, booktitle = {IEEE International Conference on Automatic Face and Gesture Recognition (FG)}, month = may, doi = {10.1109/FG47880.2020.00011} } - [J2]

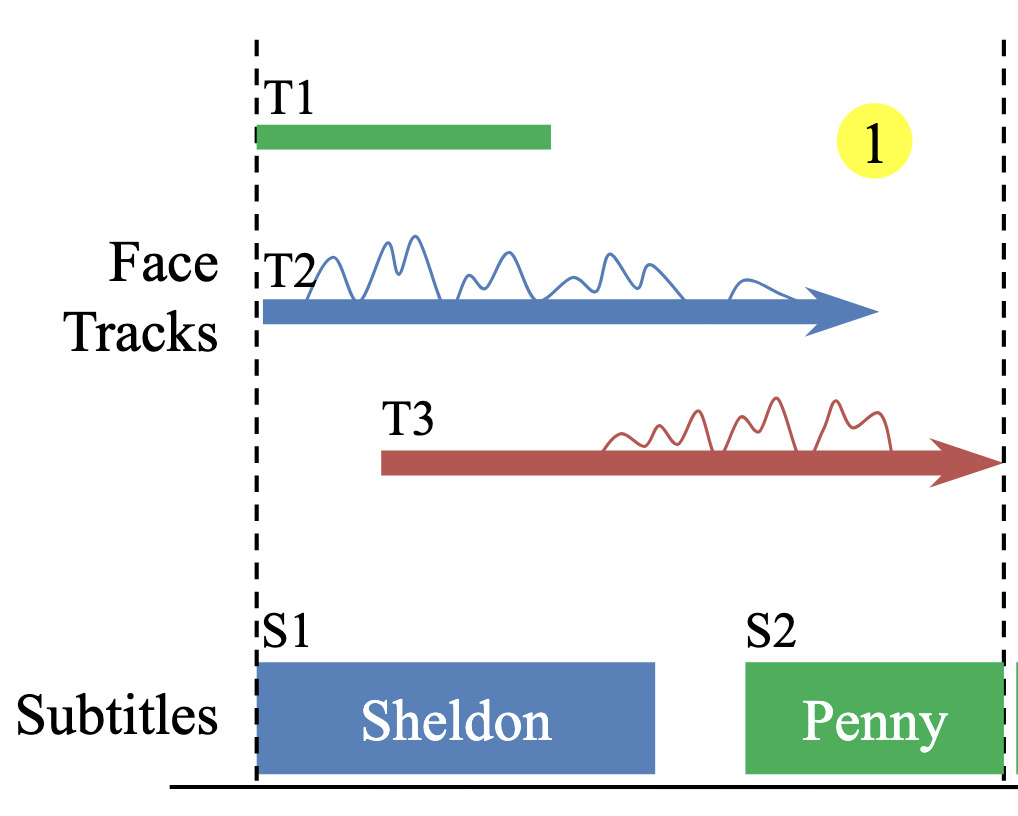

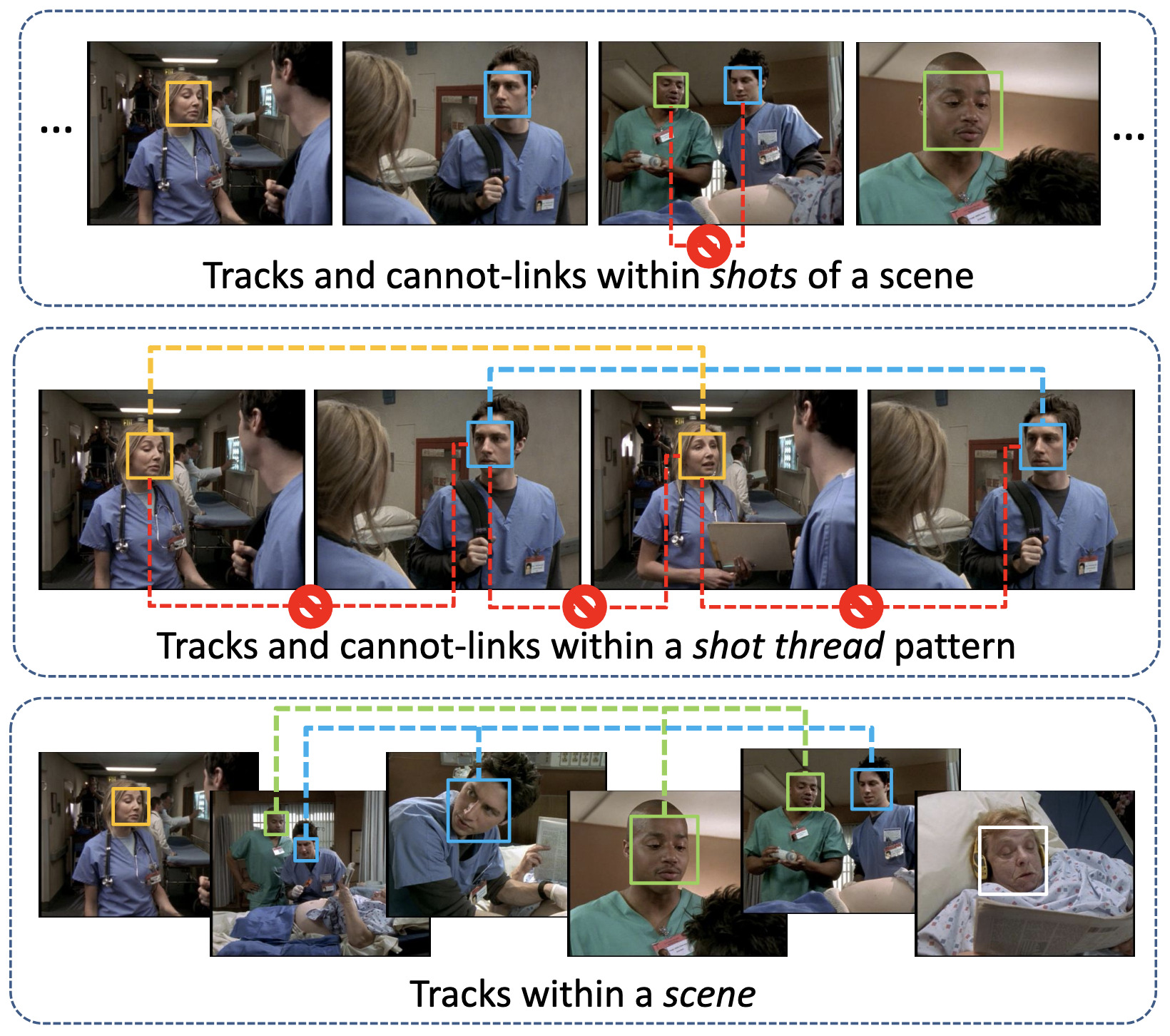

Video Face Clustering with Self-Supervised Representation LearningIEEE Transactions on Biometrics (T-BIOM), May 2020

Video Face Clustering with Self-Supervised Representation LearningIEEE Transactions on Biometrics (T-BIOM), May 2020Characters are a key component of understanding the story conveyed in TV series and movies. With the rise of advanced deep face models, identifying face images may seem like a solved problem. However, as face detectors get better, clustering and identification need to be revisited to address increasing diversity in facial appearance. In this paper, we propose unsupervised methods for feature refinement with application to video face clustering. Our emphasis is on distilling the essential information, identity, from the representations obtained using deep pre-trained face networks. We propose a self-supervised Siamese network that can be trained without the need for video/track based supervision, that can also be applied to image collections. We evaluate our methods on three video face clustering datasets. Thorough experiments including generalization studies show that our methods outperform current state-of-the-art methods on all datasets. The datasets and code are available at https://github.com/vivoutlaw/SSIAM.

@article{Sharma2019_TBIOM, author = {Sharma, Vivek and Tapaswi, Makarand and Sarfraz, M. Saquib and Stiefelhagen, Rainer}, title = {{Video Face Clustering with Self-Supervised Representation Learning}}, year = {2020}, journal = {IEEE Transactions on Biometrics (T-BIOM)}, volume = {4}, pages = {145-157}, doi = {10.1109/TBIOM.2019.2947264} }

2019

- [C23]

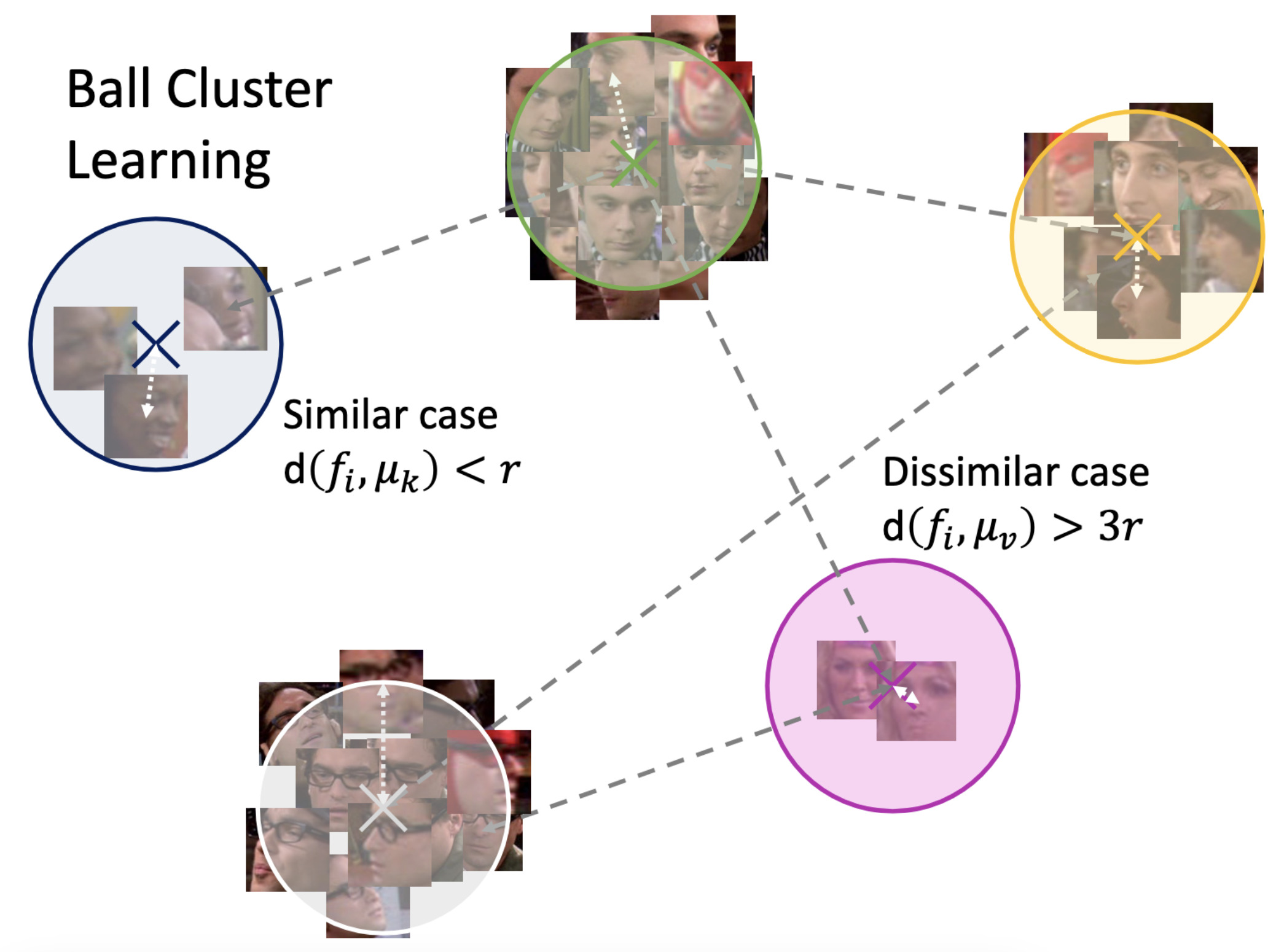

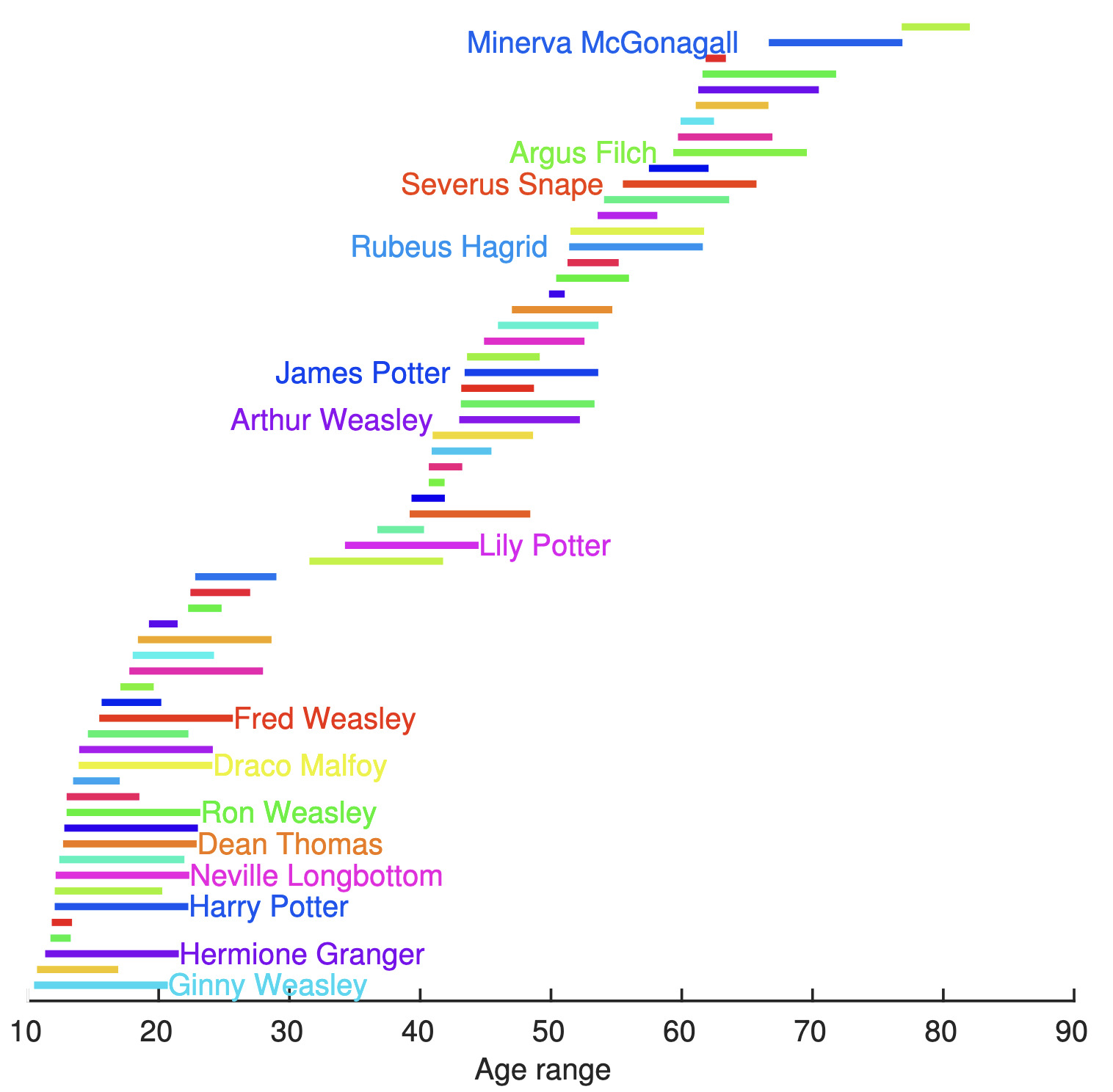

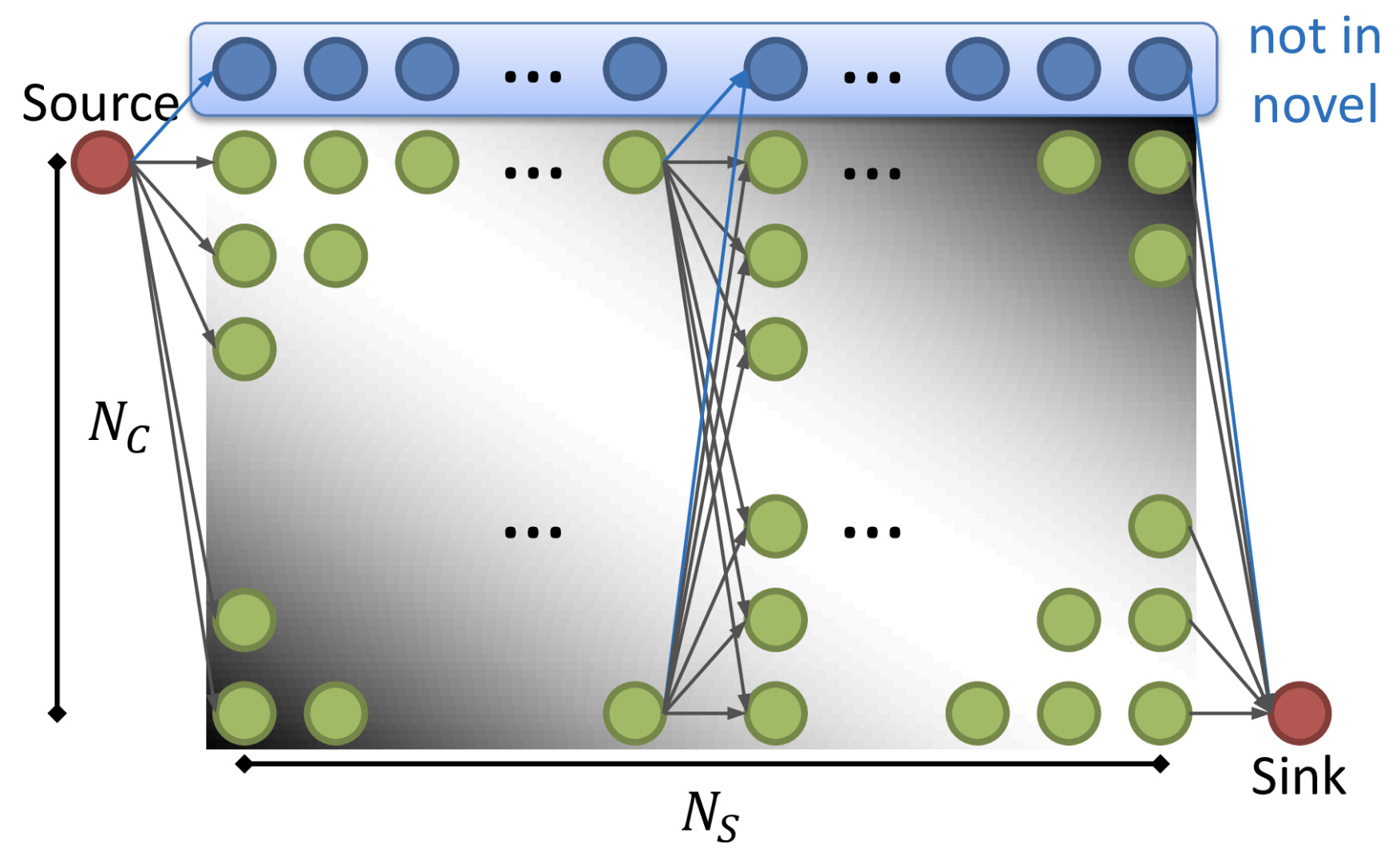

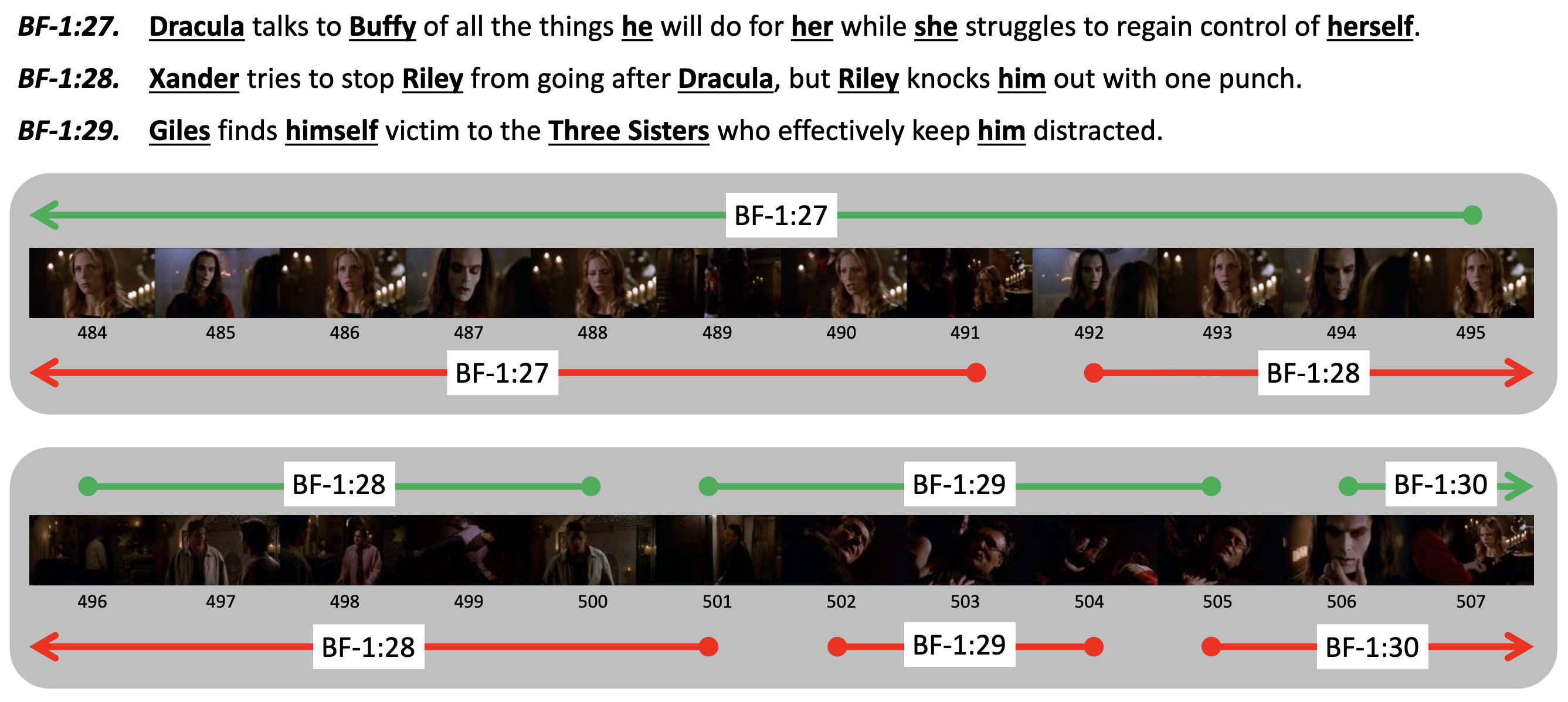

Video Face Clustering with Unknown Number of ClustersMakarand Tapaswi, Marc T. Law, and Sanja FidlerIn International Conference on Computer Vision (ICCV), Oct 2019

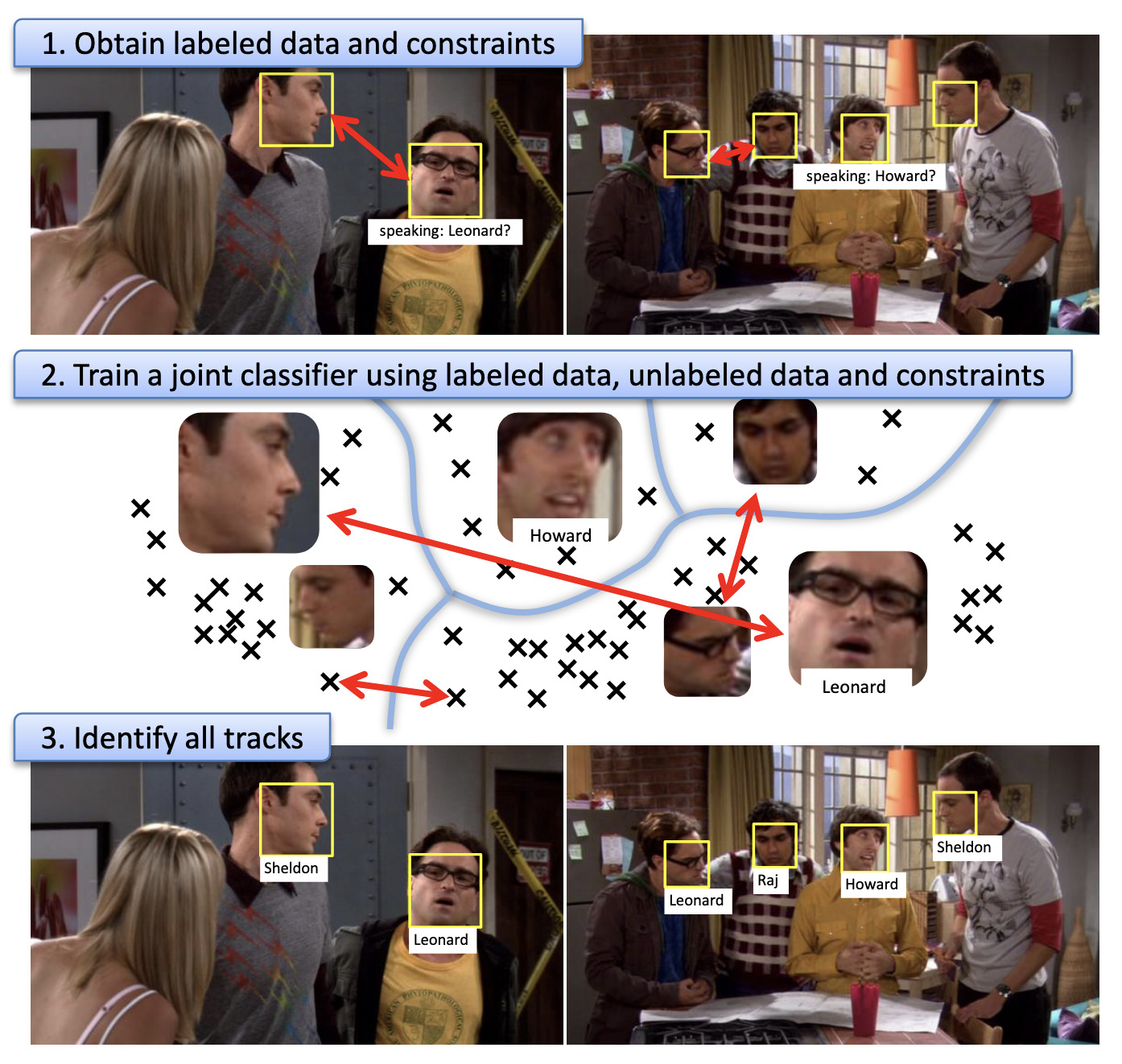

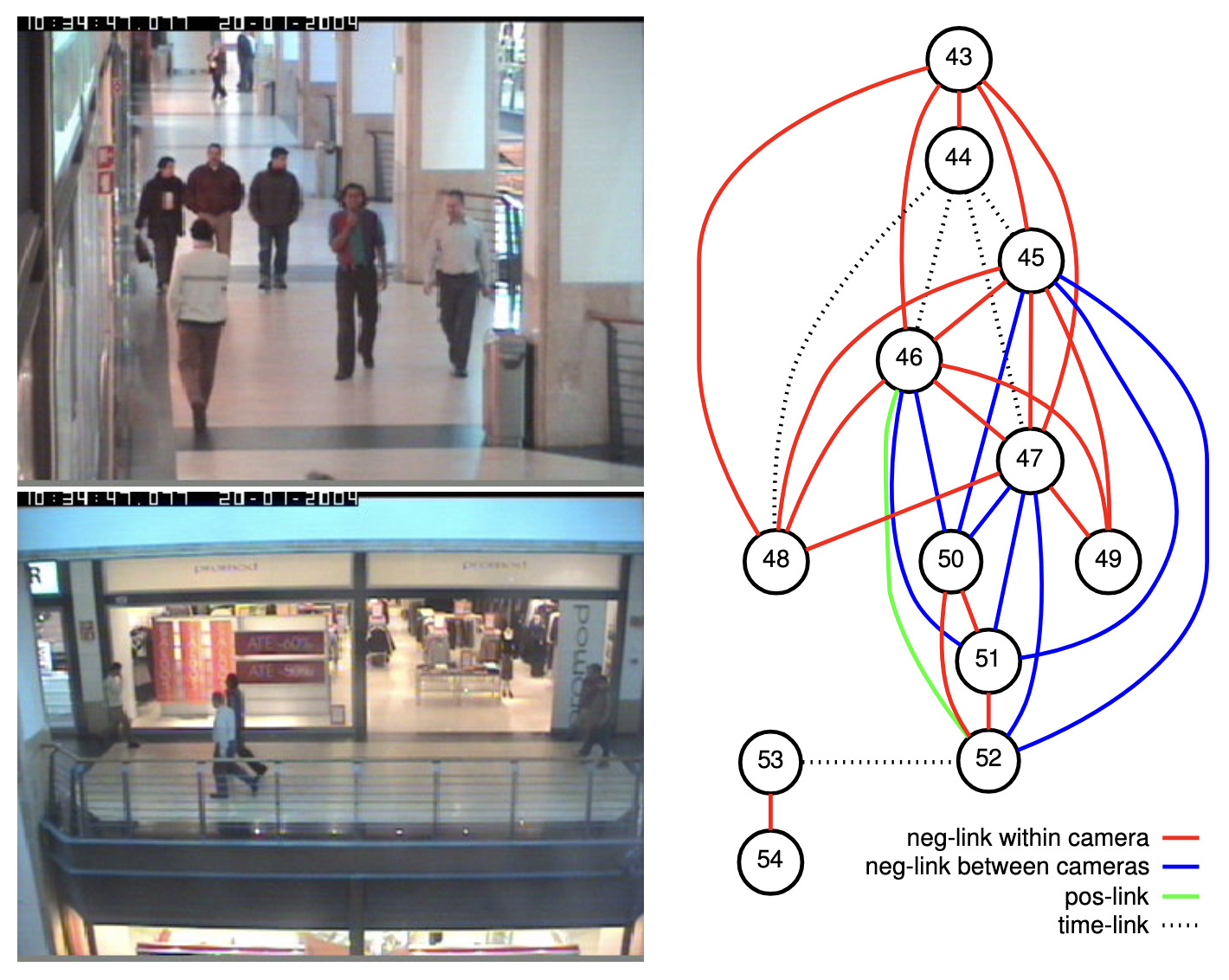

Video Face Clustering with Unknown Number of ClustersMakarand Tapaswi, Marc T. Law, and Sanja FidlerIn International Conference on Computer Vision (ICCV), Oct 2019Understanding videos such as TV series and movies requires analyzing who the characters are and what they are doing. We address the challenging problem of clustering face tracks based on their identity. Different from previous work in this area, we choose to operate in a realistic and difficult setting where: (i) the number of characters is not known a priori; and (ii) face tracks belonging to minor or background characters are not discarded. To this end, we propose Ball Cluster Learning (BCL), a supervised approach to carve the embedding space into balls of equal size, one for each cluster. The learned ball radius is easily translated to a stopping criterion for iterative merging algorithms. This gives BCL the ability to estimate the number of clusters as well as their assignment, achieving promising results on commonly used datasets. We also present a thorough discussion of how existing metric learning literature can be adapted for this task.

@inproceedings{Tapaswi2019_BallClustering, author = {Tapaswi, Makarand and Law, Marc T. and Fidler, Sanja}, title = {{Video Face Clustering with Unknown Number of Clusters}}, year = {2019}, booktitle = {International Conference on Computer Vision (ICCV)}, month = oct, doi = {10.1109/ICCV.2019.00513} } - [C22]

HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video ClipsAntoine Miech, Dimitri Zhukov, Jean-Baptiste Alayrac, Makarand Tapaswi, Ivan Laptev, and Josef SivicIn International Conference on Computer Vision (ICCV), Oct 2019

HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video ClipsAntoine Miech, Dimitri Zhukov, Jean-Baptiste Alayrac, Makarand Tapaswi, Ivan Laptev, and Josef SivicIn International Conference on Computer Vision (ICCV), Oct 2019Learning text-video embeddings usually requires a dataset of video clips with manually provided captions. However, such datasets are expensive and time consuming to create and therefore difficult to obtain on a large scale. In this work, we propose instead to learn such embeddings from video data with readily available natural language annotations in the form of automatically transcribed narrations. The contributions of this work are three-fold. First, we introduce HowTo100M: a large-scale dataset of 136 million video clips sourced from 1.22M narrated instructional web videos depicting humans performing and describing over 23k different visual tasks. Our data collection procedure is fast, scalable and does not require any additional manual annotation. Second, we demonstrate that a text-video embedding trained on this data leads to state-of-the-art results for text-to-video retrieval and action localization on instructional video datasets such as YouCook2 or CrossTask. Finally, we show that this embedding transfers well to other domains: fine-tuning on generic Youtube videos (MSR-VTT dataset) and movies (LSMDC dataset) outperforms models trained on these datasets alone. Our dataset, code and models are publicly available.

@inproceedings{Miech2019_HowTo100M, author = {Miech, Antoine and Zhukov, Dimitri and Alayrac, Jean-Baptiste and Tapaswi, Makarand and Laptev, Ivan and Sivic, Josef}, title = {{HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips}}, year = {2019}, booktitle = {International Conference on Computer Vision (ICCV)}, month = oct, doi = {10.1109/ICCV.2019.00272} } - [C21]

Self-Supervised Learning of Face Representations for Video Face ClusteringIn IEEE International Conference on Automatic Face and Gesture Recognition (FG), May 2019🏆 Best Paper Award

Self-Supervised Learning of Face Representations for Video Face ClusteringIn IEEE International Conference on Automatic Face and Gesture Recognition (FG), May 2019🏆 Best Paper AwardAnalyzing the story behind TV series and movies often requires understanding who the characters are and what they are doing. With improving deep face models, this may seem like a solved problem. However, as face detectors get better, clustering/identification needs to be revisited to address increasing diversity in facial appearance. In this paper, we address video face clustering using unsupervised methods. Our emphasis is on distilling the essential information, identity, from the representations obtained using deep pre-trained face networks. We propose a self-supervised Siamese network that can be trained without the need for video/track based supervision, and thus can also be applied to image collections. We evaluate our proposed method on three video face clustering datasets. The experiments show that our methods outperform current state-of-the-art methods on all datasets. Video face clustering is lacking a common benchmark as current works are often evaluated with different metrics and/or different sets of face tracks. Our datasets and code will be made available for enabling fair comparisons in the future.

@inproceedings{Sharma2019_FaceCluster, author = {Sharma, Vivek and Tapaswi, Makarand and Sarfraz, Saquib and Stiefelhagen, Rainer}, title = {{Self-Supervised Learning of Face Representations for Video Face Clustering}}, year = {2019}, booktitle = {IEEE International Conference on Automatic Face and Gesture Recognition (FG)}, month = may, doi = {10.1109/FG.2019.8756609} } - [C20]

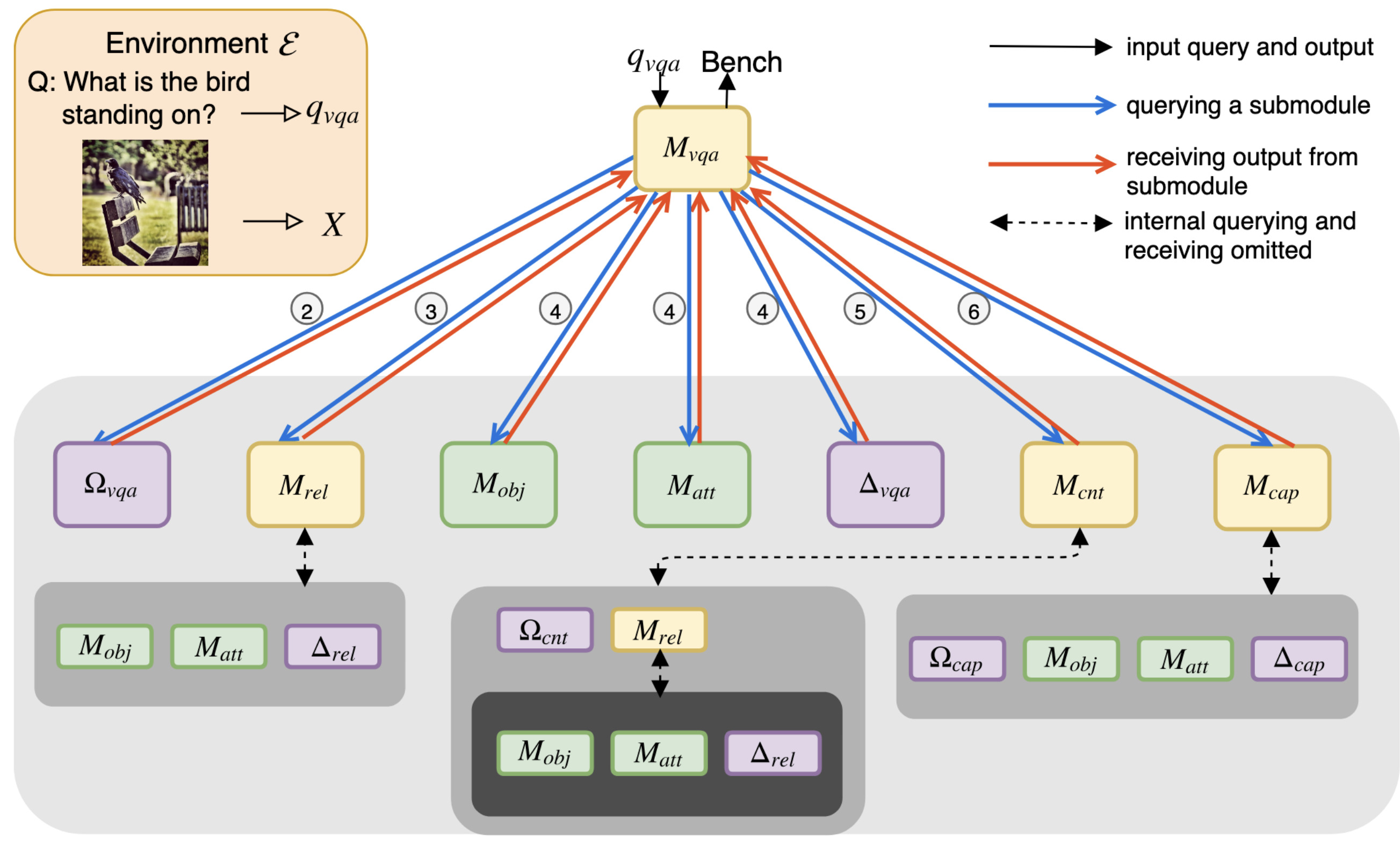

Visual Reasoning by Progressive Module NetworksSeung Wook Kim, Makarand Tapaswi, and Sanja FidlerIn International Conference on Learning Representations (ICLR), May 2019

Visual Reasoning by Progressive Module NetworksSeung Wook Kim, Makarand Tapaswi, and Sanja FidlerIn International Conference on Learning Representations (ICLR), May 2019Humans learn to solve tasks of increasing complexity by building on top of previously acquired knowledge. Typically, there exists a natural progression in the tasks that we learn – most do not require completely independent solutions, but can be broken down into simpler subtasks. We propose to represent a solver for each task as a neural module that calls existing modules (solvers for simpler tasks) in a functional program-like manner. Lower modules are a black box to the calling module, and communicate only via a query and an output. Thus, a module for a new task learns to query existing modules and composes their outputs in order to produce its own output. Our model effectively combines previous skill-sets, does not suffer from forgetting, and is fully differentiable. We test our model in learning a set of visual reasoning tasks, and demonstrate improved performances in all tasks by learning progressively. By evaluating the reasoning process using human judges, we show that our model is more interpretable than an attention-based baseline.

@inproceedings{Kim2019_PMN, author = {Kim, Seung Wook and Tapaswi, Makarand and Fidler, Sanja}, title = {{Visual Reasoning by Progressive Module Networks}}, year = {2019}, booktitle = {International Conference on Learning Representations (ICLR)}, month = may } - [P1]

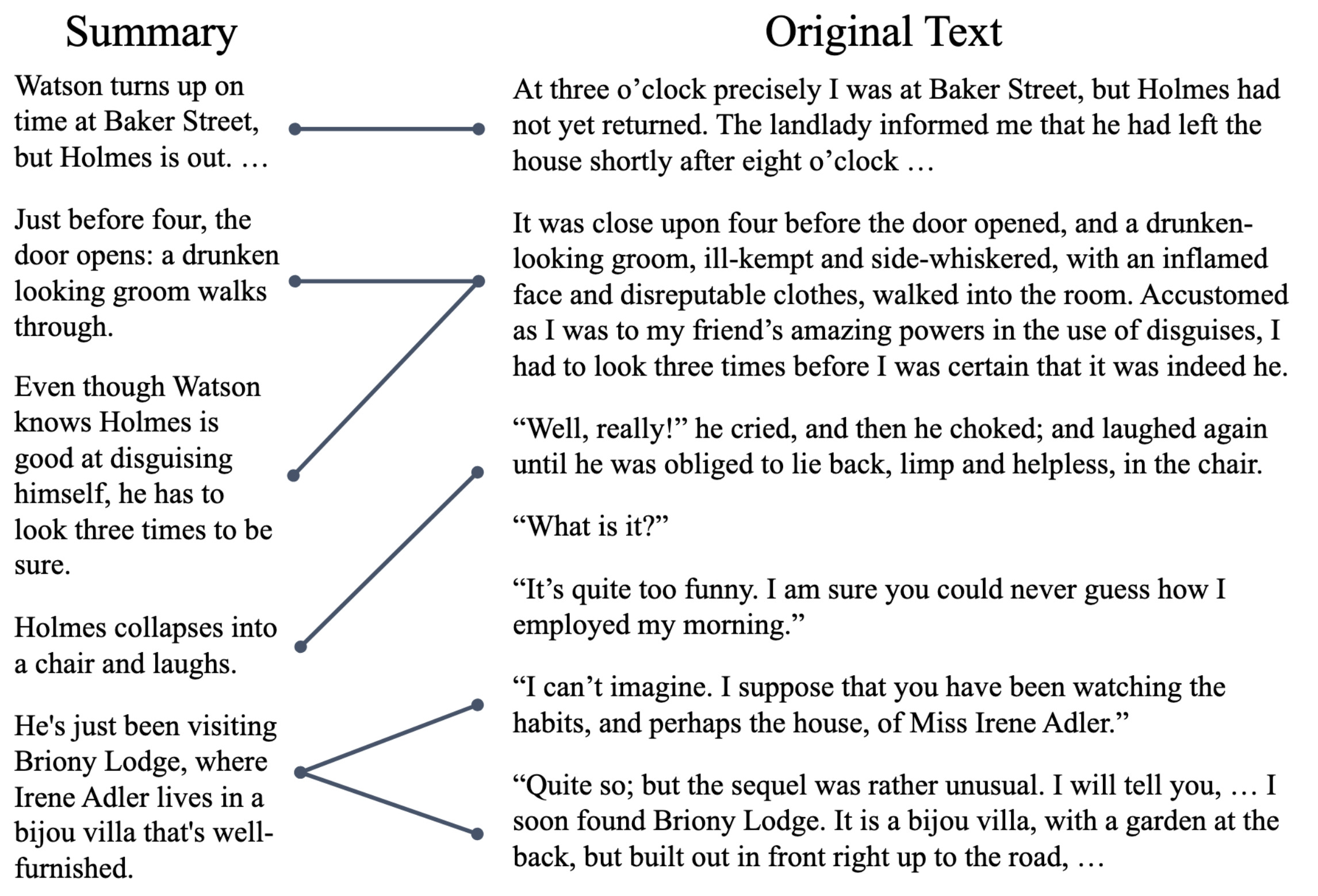

The Shmoop Corpus: A Dataset of Stories with Loosely Aligned SummariesAtef Chaudhury, Makarand Tapaswi, Seung Wook Kim, and Sanja FidlerarXiv:1912.13082, Dec 2019

The Shmoop Corpus: A Dataset of Stories with Loosely Aligned SummariesAtef Chaudhury, Makarand Tapaswi, Seung Wook Kim, and Sanja FidlerarXiv:1912.13082, Dec 2019Understanding stories is a challenging reading comprehension problem for machines as it requires reading a large volume of text and following long-range dependencies. In this paper, we introduce the Shmoop Corpus: a dataset of 231 stories that are paired with detailed multi-paragraph summaries for each individual chapter (7,234 chapters), where the summary is chronologically aligned with respect to the story chapter. From the corpus, we construct a set of common NLP tasks, including Cloze-form question answering and a simplified form of abstractive summarization, as benchmarks for reading comprehension on stories. We then show that the chronological alignment provides a strong supervisory signal that learning-based methods can exploit leading to significant improvements on these tasks. We believe that the unique structure of this corpus provides an important foothold towards making machine story comprehension more approachable.

@article{Chaudhury2019_Shmoop, author = {Chaudhury, Atef and Tapaswi, Makarand and Kim, Seung Wook and Fidler, Sanja}, title = {{The Shmoop Corpus: A Dataset of Stories with Loosely Aligned Summaries}}, year = {2019}, month = dec, journal = {arXiv:1912.13082} } - [W7]

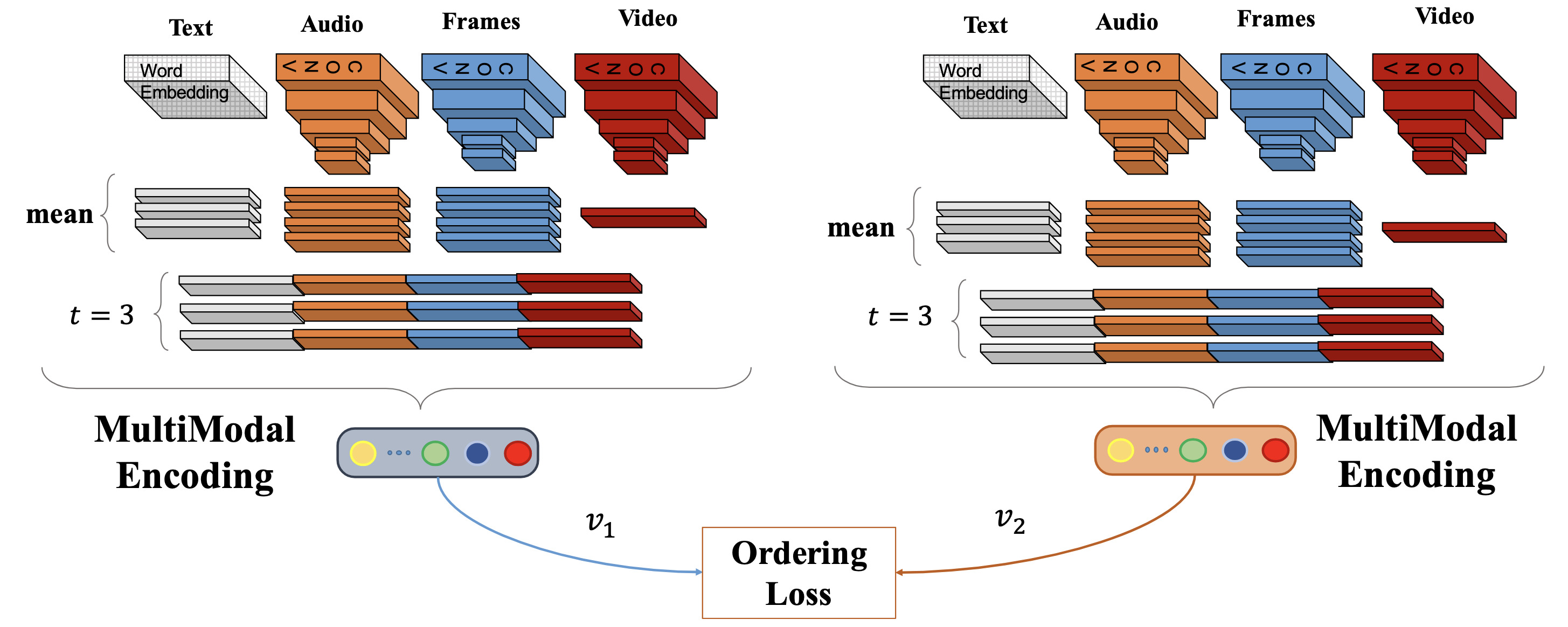

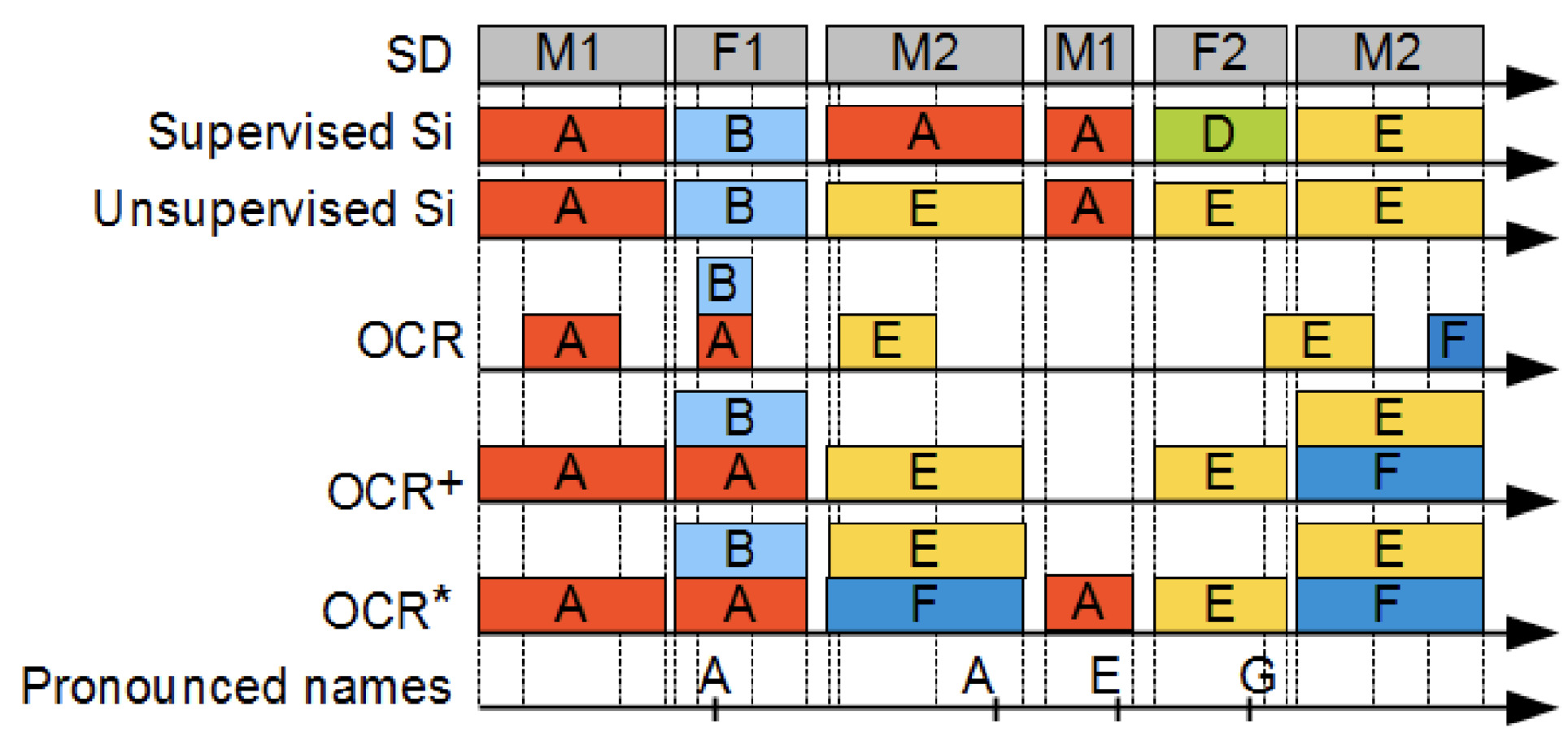

Deep Multimodal Feature Encoding for Video OrderingVivek Sharma, Makarand Tapaswi, and Rainer StiefelhagenIn ICCV Workshop on Large Scale Holistic Video Understanding, Dec 2019